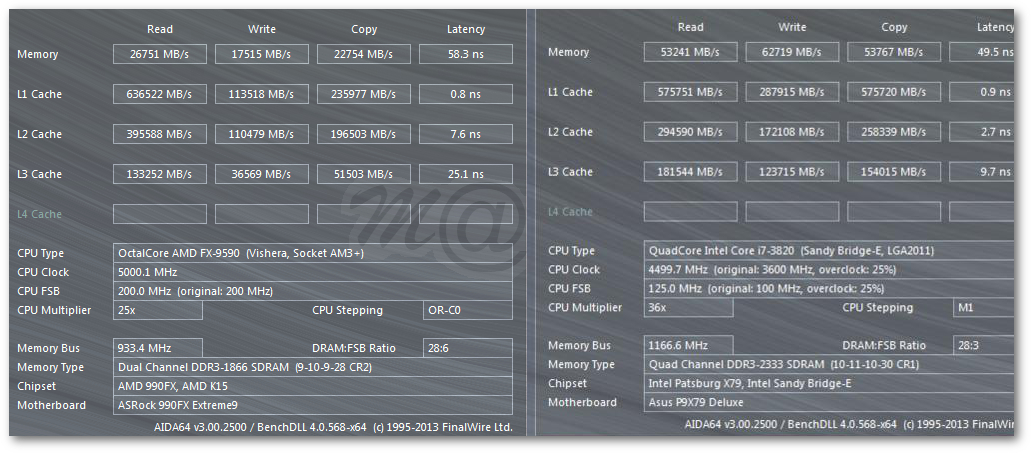

Ignoring decode, predecode, execution, scheduling and retirement...AMD seems to have a lot of sound designs but they are usually held back by their poor cache and memory performance. If AMD could improve that, I think it would bring them much closer to where they need to be.

I'm also worried about their chipset. Rumors say Asmedia is designing it but rumors also say there have been delays/issues. Not only does AMD have to execute their CPU well but they also have to execute their chipset well. This is an area I think AMD will be able to provide an advantage over Intel for the same price point at least in the mainstream market.

There's a lot riding on GlobalFoundries' 14nm process and so far it doesn't seem to be that great but hopefully from now till Zen launch it'll mature a bit. I can only wonder what Zen on IBM's (now GF's) 22nm FDSOI would look like...

Fetch / Predictors -> Cache -> Memory

In my view, this is where it is make or break for the AMD Zen uarch. I don't doubt the raw execution power... But how effective the local trace caches are at every stage relieving bottlenecks.

L0/1/2 crucial for DT/Mobile.

As well as L3/Mem crucial for Server/HPC.

As for process, I expect that is why Lisa has delayed mass availability to Q1 17 -- which I believe is a good move for competitiveness.

Forbes: "Shes expecting even bigger gains when the companys newest line of high-end computer chips, dubbed Zen, goes on sale next year. Its a nice way for us to really increase our reach, says the CEO, who is more fond of understatement than bold pronouncements."

Sent from HTC 10

(Opinions are own)