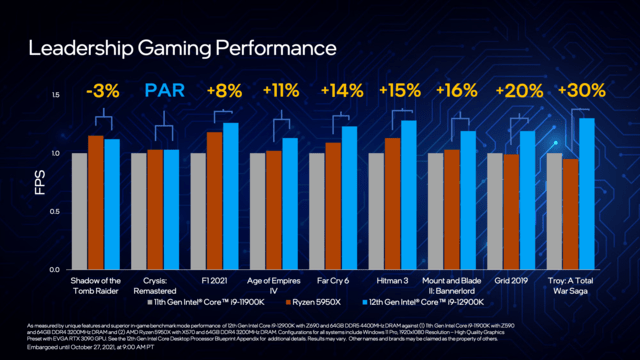

Those gaming scores are interesting.

Questions though.

DDR4 or DDR5? JEDEC spec or tighter timings/higher speeds?

W11? Before or after the L3 cache issue with zen3 was fixed?

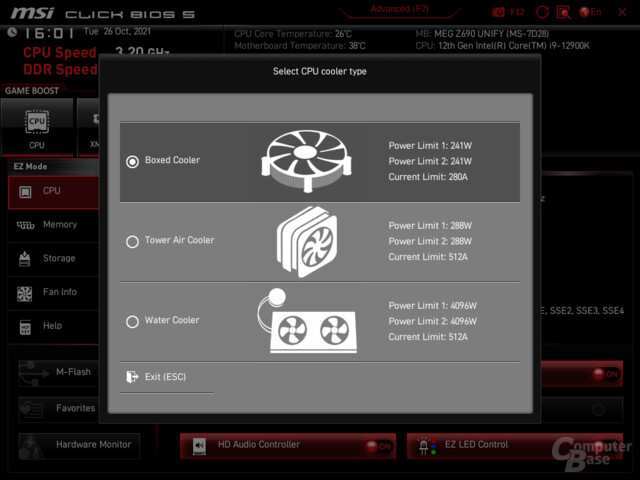

Tdp and tau settings? Intel Spec or motherboard spec?

I foresee a lot of variance in the results come review day. Anandtech who use JEDEC specs and Intel tdp/tau specs may show considerably worse results than HUB who will likely run above spec ram and allow the motherboard to dictate power and tau limits. GN who will likely run above spec ram but stick to Intel Spec power and tau limits will be an interesting middle ground.

On top of that W10 vs W11 testing, will reviewers stick to a single OS for reviews or will they choose the best performing for each platform?

Also this:

E-cores disabled or enabled?