tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

LOL, you guys discussing "Mark PC" YT benchmark videos are in for a surprise. That channel is fake.

What are your preferred values for those settings and how much is the performance loss for ensuring systems stay stable and reliable over a long period of time?

Intel XTU? Is it able to override whatever is set in the BIOS, meaning does it change the values in the firmware of the CPU, or does it run at Windows startup to set the values?The Intel utility indicated that in all core workloads I was wattage limited and throttled down to about 3.4 ghz after exhausting PL2.

Intel XTU? Is it able to override whatever is set in the BIOS, meaning does it change the values in the firmware of the CPU, or does it run at Windows startup to set the values?

XTU does not modify the bios, it runs in windows and yes at startup if you want changes to stick but XTU doesn't have to run just the XTUservice.exe which uses extremely low resources.Intel XTU? Is it able to override whatever is set in the BIOS, meaning does it change the values in the firmware of the CPU, or does it run at Windows startup to set the values?

I don't know about 5GHz but how about 4.7GHz?So anyone with 5+ Ghz Zen 3 to compare? Based on the posts of some pople it must be super efficient at this clock speed.

www.kitguru.net

www.kitguru.net

Questions:

1. Do you think that Golden Cove will have higher IPC (throughput) than Zen 3?

2. At lower frequencies ~3500-4000MHz do you think Golden Cove will have similar efficiency? Meaning they will do the approximately the same amount of work using the same amount of Joules?

3. If you think #2 will be true do you think at a certain frequency beyond 4000MHz, Zen 3 will scale more efficiently with frequency than Golden Cove from an energy consumption point-of-view? Meaning Golden Cove energy consumption will begin to increase at a faster rate then Zen 3 as frequency scales beyond 4000+MHz?

If you believe #3 to be false and they will scale approximately the same with power as frequency increases, yet Zen 3 tops out at a lower frequency (power) than Golden Cove is it fair to say Golden Cove is less efficient than Zen 3 because it can reach higher frequencies at the expense of huge power draw?

The expectations here seem very low, so I'd be surprised if Alder Lake does not exceed that low bar. The benchmarks coming out of China look rather promising. Power efficiency does not look promising for the desktop chips. But who cares for that in a desktop? Not me...Here is hoping that Alder Lake outperforms expectations. Zen 3 will win on power consumption regardless. I want AMD to be like AMD in the recent past, price/performance leader. I want a 5800X and my strike price is $250-$275. If Alder Lake is really good on performance, AMD will do what they do best. Cheap CPU's. I got my 3600 for $172 and thought that was a decent value. I just picked up a B450 simply for one last upgrade until DDR5. The cost savings (B450) go to a 5800x or the equivalent if AMD comes out with a Zen3 refresh CPU. I got the P31 1TB NVMe drive so I have no need for PCI-e 4.0

I will sit back for the next 2 or 3 years watching the DDR5 market.

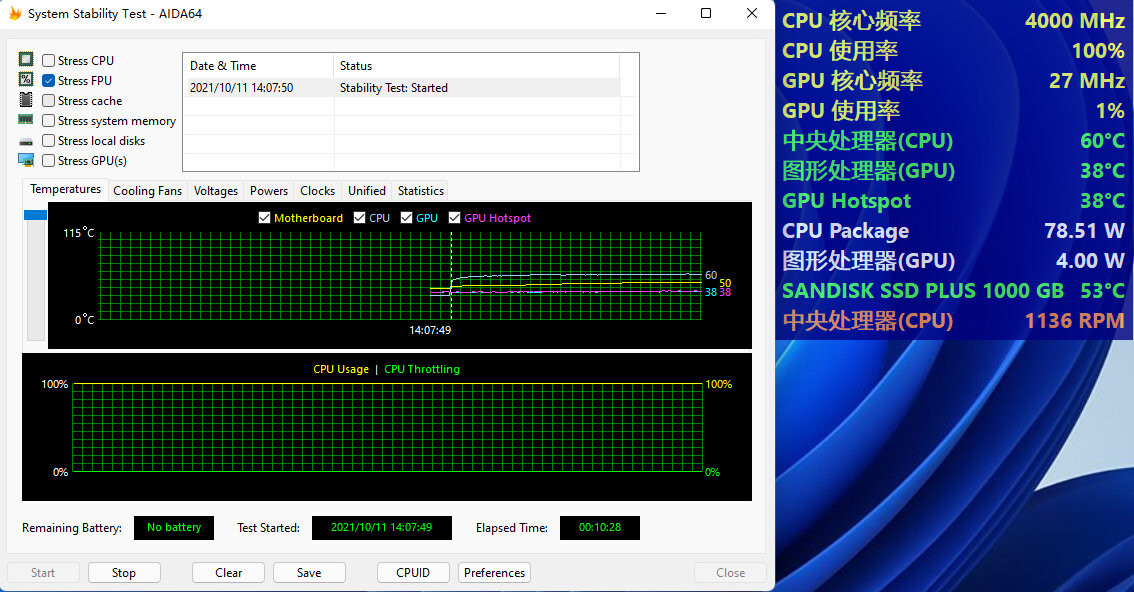

At those frequencies, core for core I'm expecting GC to be more power-efficient. Package power of 78.5W running AIDA Stress FPU at 6x4 GHz is pretty good (my undervolted 8-core Tiger Lake at 3.4GHz needs 56W for Stress FPU and 49W for CB R20) :2. At lower frequencies ~3500-4000MHz do you think Golden Cove will have similar efficiency? Meaning they will do the approximately the same amount of work using the same amount of Joules?

I find your answer to be contradictory. In simple terms, what would prevent Zen3 from running all cores at 5GHz, from the 5600x to the 5950x? Short answer, POWER!Yes. It's clearly a wider architecture.

No.

I think just like Tigerlake, Zen will win out perf/watt wise against Golden Cove in all frequency ranges, but due to Intel optimizing for the higher clocks, the gap might shrink.

In the end Golden Cove is designed for higher frequencies, so comparing Zen 3 running at 5.3GHz makes no sense - since the latter chip shouldn't reach that high anyways. It'll blow past power since it's way out of it's design target, just like using LN2 cooling but needing 1.6V for achieving overclocking records. I bet you could get Gracemont core to 5GHz too. It just wouldn't make sense, since it's way out of it's design frequency range so not only it would require ridiculous voltage to reach that, it'd be less efficient.....

Yes, Intel has designed for higher frequencies, but I would assume they would rather have more cores able to perform the same work at lower frequencies. They simply cannot do this because of the size of the GC cores and the ring bus design. I would consider Comet Lake for sure and Rocket Lake in most cases, to be a loss to Zen 3, since they have equal or lower performance in both single and multi thread design, while using more power. If AL can take back the single thread lead while giving similar multi thread performance, it will at least offer some advantages for the higher power consumption.I find your answer to be contradictory. In simple terms, what would prevent Zen3 from running all cores at 5GHz, from the 5600x to the 5950x? Short answer, POWER!

That means Zen3 will simply stop scaling with power at a certain point beyond 4GHz, while GC will blow past 5GHz with ease. So how can you claim efficiency for Zen 3 "at all frequencies."?

As you've aptly stated, Intel's willingness to tap into higher wattage for higher frequencies is deliberate. It simply is a strategy of fighting many 'slower' cores with less fewer cores. Operating at such high frequencies comes with a certain sacrifice of efficiency. If we understand this going forward, then we'll not be making blanket statements when we very well know Zen3 is not even capable of playing in that 5.3GHz without exotic cooling.

If we look at the power comparison between Zen3 and Tigerlake by Anandtech, I believe WC maintained linear efficiency scaling beyond 90w and showed no sign of waning off. Zen3, on the other hand, could only maintain linear scaling up to around 70-75w iirc, so that by around the 90w mark, it had gradually dipped to the same level as WC. Meaning, from that point forward, WC was going to demonstrate better efficiency over Zen3.

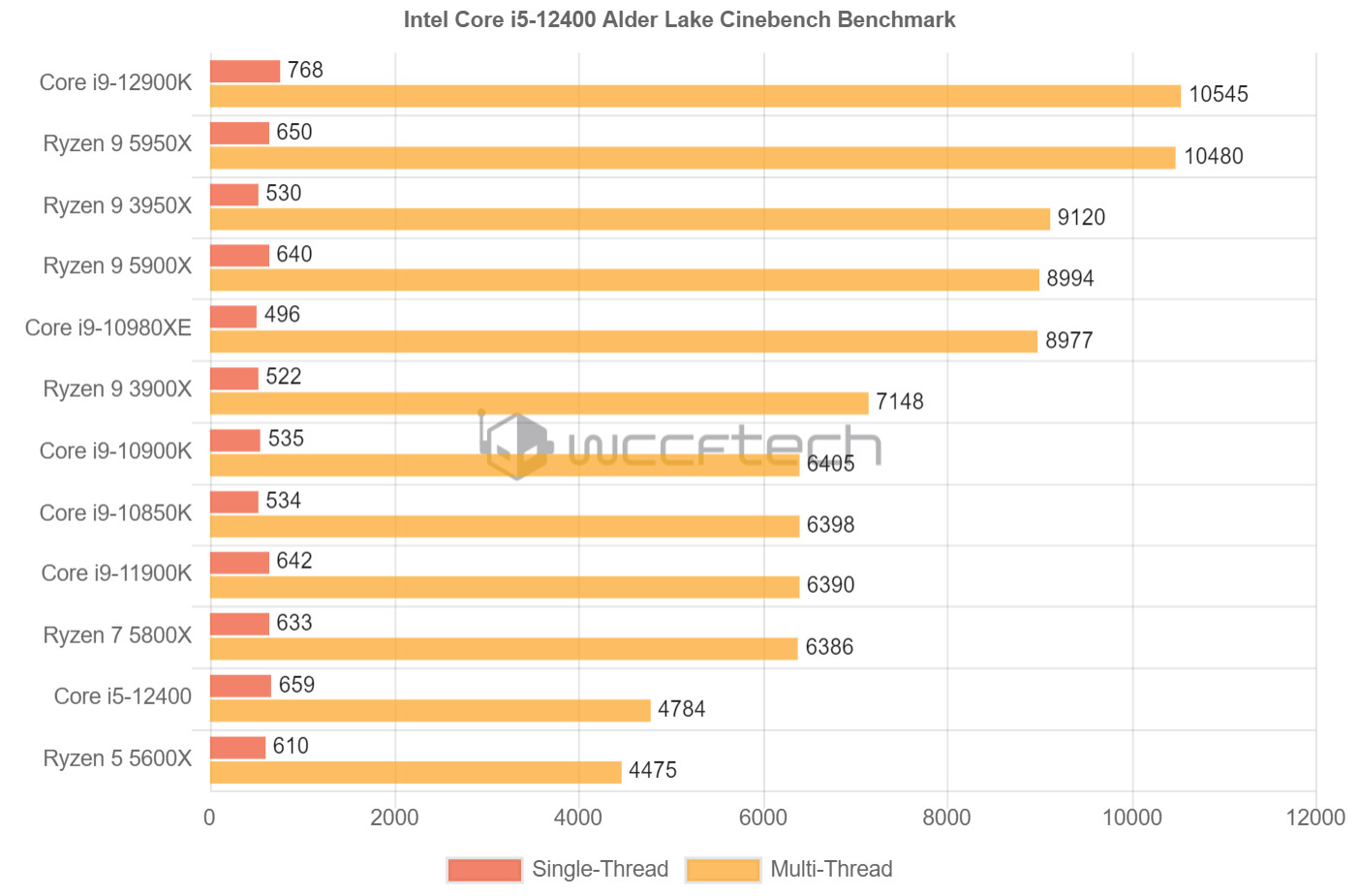

As @Accord99 has indicated, the GC chip to demonstrate the best efficiency capabilities of the GC arch is the i5 12400, with 6c/12t just like the 5600x. Preliminary results show it's beating the 5600x with same cores and threads clocked a tad lower, and using less energy to do so. So, I'm looking forward to all the reviews and praying Intel throws a surprise 12400 in the mix just to highlight this point. In any case, the 12600k is not aggressively clocked like the 12900k, so maybe we'll see some glimpses of GC efficiency with the less aggressively clocked chips.

So, I'm looking forward to all the reviews and praying Intel throws a surprise 12400 in the mix just to highlight this point. In any case, the 12600k is not aggressively clocked like the 12900k, so maybe we'll see some glimpses of GC efficiency with the less aggressively clocked chips.

Edit:

The power scaling analysis was done by techspot, not Anandtech. https://www.techspot.com/review/2262-intel-core-i7-11800h/

That gaming perfomance looks quiet... interesting for Intel's own benchmarks. Will probably be even worse in third party reviews.

Looks good. People who said Intel would keep their pricing structure similar to before were right, I was expecting something else. Can't wait for in depth reviews.ADL-S pricing leaks?

i9 12900K - $589

i9 12900KF - $564

i7 12700K - $409

i7 12700KF - $384

i5 12600K - $289

i5 12600KF - $264

Intel 12th Gen Core "Alder Lake-S" final specifications and pricing leak ahead of launch - VideoCardz.com

Intel Alder Lake will be more expensive than Rocket Lake We finally have the full specifications and pricing of the upcoming desktop series. Intel Core i9-12900K featuring 16 cores (8 Performance and 8 Efficient) and 24 threads will carry a boost clock up to 5.2 GHz, there is no Thermal...videocardz.com

ADL-S pricing leaks?

i9 12900K - $589

i9 12900KF - $564

i7 12700K - $409

i7 12700KF - $384

i5 12600K - $289

i5 12600KF - $264

Intel 12th Gen Core "Alder Lake-S" final specifications and pricing leak ahead of launch - VideoCardz.com

Intel Alder Lake will be more expensive than Rocket Lake We finally have the full specifications and pricing of the upcoming desktop series. Intel Core i9-12900K featuring 16 cores (8 Performance and 8 Efficient) and 24 threads will carry a boost clock up to 5.2 GHz, there is no Thermal...videocardz.com

At those frequencies, core for core I'm expecting GC to be more power-efficient. Package power of 78.5W running AIDA Stress FPU at 6x4 GHz is pretty good (my undervolted 8-core Tiger Lake at 3.4GHz needs 56W for Stress FPU and 49W for CB R20) :