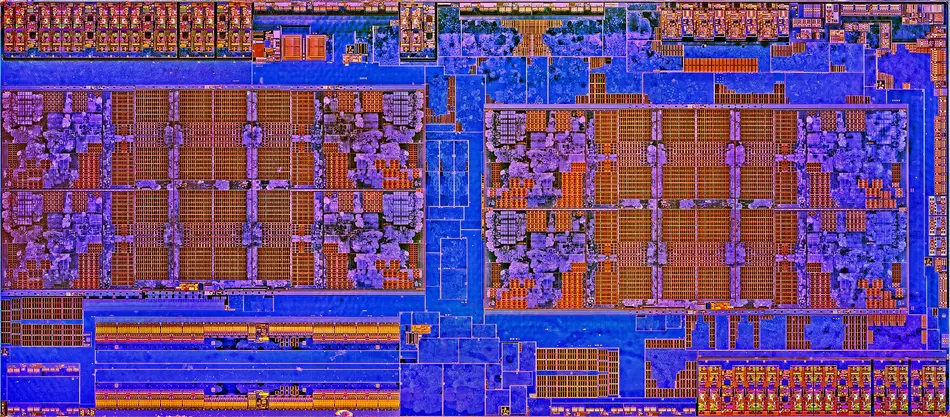

This is the direct quote from the Software Optimization Guide for the AMD Zen5 Microarchitecture published in August 2024, revision 1.00:

So I took it to mean that the setup is asymmetrical, since they underline only the first slot, not any slot, of course I might have read into it too literally, but in that case I find the wording confusing. Still, it would be a waste to put more "complex" decoders in, if only the first slot will do the "complex" decoding, unless this is being muxed for some purpose.

That ain't split between simple and complex instructions - complex instructions can be quite short too. There's really no point of making decode fetch matrix wider for allowing decoding those overly long instructions simultaneously - there isn't fetch bandwidth or mop extraction bandwidth to support those kind of instruction combinations anyway.