DrMrLordX

Lifer

- Apr 27, 2000

- 23,232

- 13,323

- 136

AMD did always prioritize mobile over desktop in APUs

Maybe from a design perspective, but not from an availability perspective. It was much easier to get Kaveri on desktop than mobile.

AMD did always prioritize mobile over desktop in APUs

Maybe from a design perspective, but not from an availability perspective. It was much easier to get Kaveri on desktop than mobile.

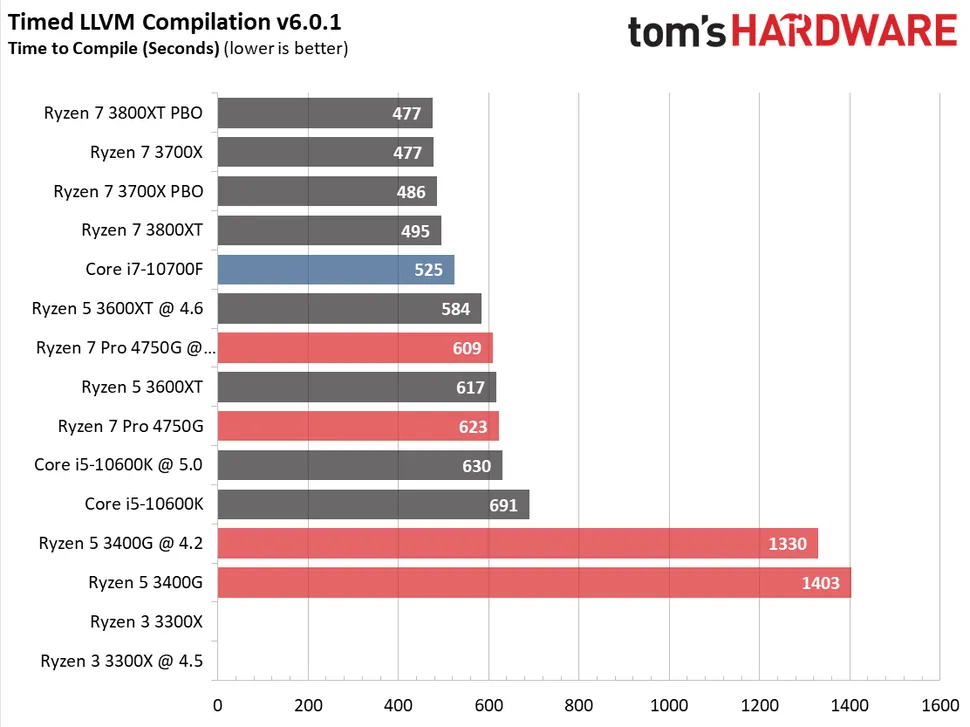

I think no one is arguing that the 4750G is better than the 3400G in all aspects. As it was no Ryzen 7 on Picasso there is really nothing to compare it with. That does not means it makes sence to use it at the $309 price point, outside some niche use cases.OK, let's just forget the fact that you get four more CPU cores that are better than the ones in the 3400G. In MT that Renoir was a full 225% faster than the 3400G. I know you are focused on iGPU performance but there are other things to consider. If they had more fab capacity maybe we'd see a cheaper 4C, 8CU chip with high iGPU clocks. I have a feeling that with the next gen consoles around the corner they don't have much room for a SKU like that.

The cache has to affect more than gaming performance, they dont put cache in there just for games. Benchmarks is a bad way to test this. Calling Zen 2 cache as "gaming cache" was a bad marketing move.Lower performance than Zen 2, despite being Zen 2. I get it, you meant gaming performance. I don't expect a 4300G to be similar iGPU-wise to a 2400G. Zen 3 CPU's are near but Zen 3 APU's? If anything Cezanne looks to be a loser with 8 Vega (still!?) CU's. Other faster 4/8 CPU's, but will a crap iGPU.

Maybe 2-3 years ago the 4300G could had been considered something else, in late 2020 with faster 4/8 cpus at 100-130 mark, the 3200G has to be replaced with a 4/8 APU with faster IGP perf, the 4350G is just that. Unless you want to keep having 4/4 stuff at $100 in 2021 there is no way to argue this.4300G has SMT whereas the 3200G doesn't. It's also Zen2 vs Zen+. That is a generational upgrade. Minimal upgrade to the iGPU vs the 3200G? Well, I haven't seen any trustworthy reviews, but I'll concede that.

Yeah the market always tend to fix that, remember the +$500 FX-9370?, how long did that lasted? a week? as long as there are other options that is a non issue, the problem with APUs is that there are no other options.That "new product" rule that you say isn't exactly true either. Sticking with AMD, they raised prices considerably with K7, K8, and dual core K8. They also raised the price a bit with the FX-8150 vs Thuban. That didn't go over well. And more recently they raised prices by far with Zen vs FX. This is AMD's best laptop chip ever and has gained them considerable market share. They are going to charge a premium for it.

I think no one is arguing that the 4750G is better than the 3400G in all aspects. As it was no Ryzen 7 on Picasso there is really nothing to compare it with. That does not means it makes sence to use it at the $309 price point, outside some niche use cases.

The cache has to affect more than gaming performance, they dont put cache in there just for games. Benchmarks is a bad way to test this. Calling Zen 2 cache as "gaming cache" was a bad marketing move.

Maybe 2-3 years ago the 4300G could had been considered something else, in late 2020 with faster 4/8 cpus at 100-130 mark, the 3200G has to be replaced with a 4/8 APU with faster IGP perf, the 4350G is just that. Unless you want to keep having 4/4 stuff at $100 in 2021 there is no way to argue this.

Yeah the market always tend to fix that, remember the +$500 FX-9370?, how long did that lasted? a week? as long as there are other options that is a non issue, the problem with APUs is that there are no other options.

Maybe it would be better not to launch Renoir at desktop at all and use all supply for mobile. If they are doing it is because they are producing more than they can sell at mobile, they are not doing it to lose money, you can be sure of that. And to be fair, as Renoir is OEM only, so this is, at least for now, true.

And BTW, they raised the prices, but looking instances where the new product was slower in some way than the older one at the same price is far more difficult, the only instance that i do remember was the first FX gen that was in some cases slower than Thuban. And AMD paid dearly for that.

Outside of gaming and things like 7-zip, I haven't seen much difference. I was against the "GameCache" marketing from the beginning. However, Zen's L3 is still a victim cache so it doesn't benefit some tasks.

This is AMD's best laptop chip ever and has gained them considerable market share. They are going to charge a premium for it.

When will Zen 2 APUs be released to retail? Building an APU based rig immediately and right now I'm forced to buy a B450 motherboard to go with Ryzen 3 3200G, with plans to upgrade to Ryzen 5 4600G in the future.

I'm not sure what you're seeing, but reading the graphs is telling me that, for a ~40% increase in ram bandwidth, most of the games are showing around a 20%+ increase in fps. The review also leaves the iGPU at stock speeds as well. With overclocking, the performance increases would have been even more pronounced.

im going to repeat myseft here... anything over DDR4-3200 becomes very expensive and harder to get. A DDR4-4000 kit could be as much as x2 the price of a 3200 kit, do you really think it is worth it to expend that much, i dont even want to know much a 4533/4600 kit cost... You you think that is worth it to gain 10 fps on avg?

this a good news you can tune renoir much better than other ryzensThis 1Usmus review of Renoir 4650G on guru3d is quite interesting, particularily the dGPU gaming results.

Essentially a highly tuned Renoir (DDR4 @ 4533CL16 1:1) can almost match a highly tuned 3600x (DDR4 @ 3800CL15 1:1) and often beat it significantly in 1% lows and minimums (see Witcher 3, which also loves CPU bandwidth but also Battlefield).

Average FPS

And 1% lows/ minimums:

Latency:

While this might be "meh" as far as Renoir itself goes (nobody is going to use such memory kits on it), this IMO bodes very well to Vermeer (Ryzen 4xxx).

Due to L3 unification Vermeer essentially doubles the L3 size for games again, and if it can run similar FCLKs of 2166+ Mhz (DDR4 4333 Mhz) and latencies of ~55ns the gaming performance should make a significant jump.

One has to bear in mind though that chiplet design will add a bit more latency (should hopefully be <=5ns) and possibily limit FCLK as well. Hopefully not too much.

If you're spending over $100 extra on high speed ram when you have the OPTION of getting a PCIe video card, you're doing it wrong. Full stop.

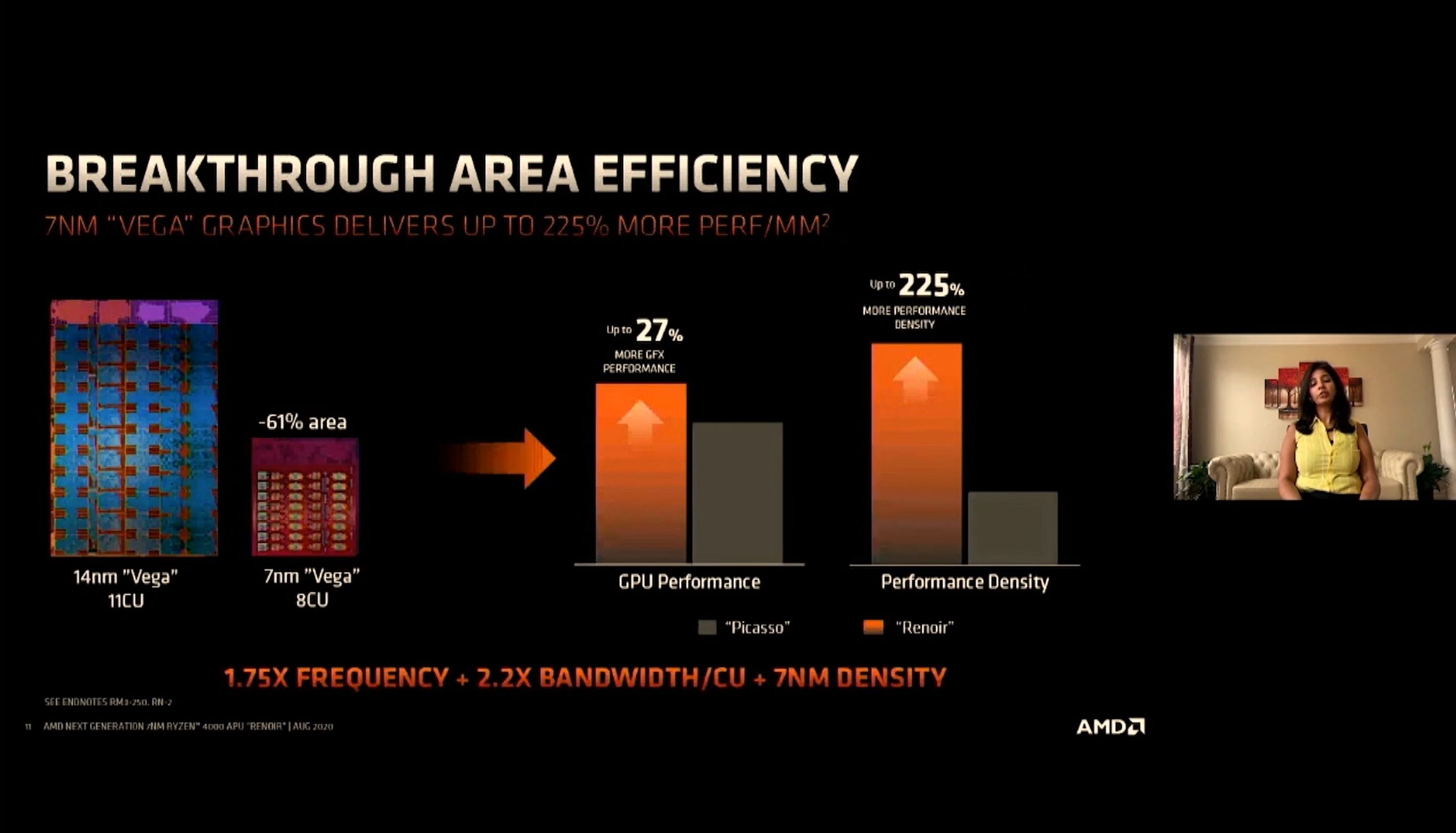

03:29PM EDT - Q: 8 cores in this size, trade offs? A: We wanted to provide the 8 core perf, and as we analyzed with 7nm that if we took care we could enable 8 cores. Lots of content creation takes advantage of 8 cores. These come in handy with high perf applications. On the GPU, we were also careful in perf/watt and perf/mm2 that we can drive. We figured out in 7nm we could get higher frequency, so we balanced resources and that's why we went for 8 CUs. Vega with mem bandwidth gives better UX in 15W

When will Zen 2 APUs be released to retail? Building an APU based rig immediately and right now I'm forced to buy a B450 motherboard to go with Ryzen 3 3200G, with plans to upgrade to Ryzen 5 4600G in the future.

Probably there is also another reason: Vega was already a proven architecture for mobile use (they already managed to cut down power substantially in Picasso), while RDNA1 needed more optimization at the time Renoir/Cezanne were laid on the design table. It makes a lot of sense, cutting costs when a certain performance would have been downplayed anyway by the lack of bandwidth due to DDR4 systems. While this has allowed AMD to optimize the RDNA2 architecture better so it will be used in future APU iterations.

I am a firm believer that we will never see a 4000G DIY release. Instead AMD will release 5000G APUs with Zen 3.