BallaTheFeared

Diamond Member

- Nov 15, 2010

- 8,115

- 0

- 71

It's going to take a while before other sites pick this up, they've been developing it for over a year.

Plus more, see: http://www.anandtech.com/show/6446/nvidia-launches-tesla-k20-k20x-gk110-arrives-at-last/3Bandwidth to those register files has in turn been doubled, allowing GK110 to read from those register files faster than ever before. As for the L2 cache, it has received a very similar treatment. GK110 uses an L2 cache up to 1.5MB, twice as big as GF110; and that L2 cache bandwidth has also been doubled.

PCGH includes these settings in their benchmarks to account for REAL-WORLD gameplay where the card heats itself up first and then the ambient after a few minutes.For our benchmarks, the GPU Boost 2.0 means but an important limitation. We test usually on an open test bench, in which the graphics card is getting enough cool air in room temperature available - ideal conditions if you will. But within enclosures often significantly different conditions prevail, especially in the summer are also in well-ventilated enclosures significantly higher values ​​than our reach around 22 ° Celsius.

Since the GTX Titan the temperature play a key role, we have to test driven a lot of effort and recorded the achieved clock speeds at 28 ° C inlet air is warm for each benchmark game separately in any resolution and enforced for the benchmark runs constantly by Nvidia Inspector . For another point is added:

Current benchmark sequences are 30 to 60 seconds long gameplay snippets, which usually precedes a loading operation. Here a GPU Boost Technology 2.0 "gain momentum" as it were, for the benchmark and go through the cooler by the idle-charge phase GPU part of the test, with higher clock speeds. That is not up to what the player is experiencing in everyday life, because longer playing phases arise in which the temperature rises more and more according to the clock sinks. Therefore, would such a "standard test" will hardly do justice to our claim to deliver meaningful game benchmarks.

In summary, we have shooed the GTX Titan in about four settings through our course to cover every possible scenario meaningful:

• Standard method with artificially on the "guaranteed" by Nvidia boost rate of 876 MHz, limited-clock. Similarly, we handle it since the GTX 670th These values ​​also represent the basis of our tests ("@ 876 MHz")

• Free boost development on our open test bench with enough cooler air ("dyn. Boost")

• Individually applied to the minimum clock rate at 28 ° C inlet air is warm board set. This corresponds to the housing operation in summer temperatures ("28 ° C")

How long is an average benchmark run for most review sites? 1 minute long? When loading up a benchmark, the card is usually cooled off a good deal already. At the very beginning, the card is likely to be running about 80MHz higher than a few minutes afterwards.In our game testing we noticed three different primary levels of clock speed our video card liked to hover between. We tested this in a demanding game, Far Cry 3. At first, our video card started out at 1019MHz actual clock speed in-game at 1.162v. As the game went on, and the GPU heated up, the clock speed dropped to 967MHz and 1.125v. After 20 minutes of gaming, the GPU clock speed settled at 941MHz and 1.087v. Therefore, our real-world starting point for clock speed was actually 941MHz at 81c max.

This explains Computerbase.de's relatively low scores for Titan (default, not "MAX") compared to most other review sites.Moreover, we have the graphics card before each test run "warmed up" for a few minutes so that the temperature rises to a realistic level and the GPU Boost clock speeds to accommodate it. We do this because at lower temperatures, the GTX titanium clocked higher and thus provides better FPS values ​​after a few minutes, however, no longer be reproduced.

We had to take the time to observe in detail the behavior of the Titan GTX in each game and on each resolution to ensure we do performance measures in representative conditions.

Here are 2 examples with Anno 2070 and Battlefield 3 with a rapid test, test temperature stabilized after 5 minutes and the same test but with the latter two 120mm fans positioned around the map:

Anno 2070: 75 fps -> 63 fps -> 68 fps

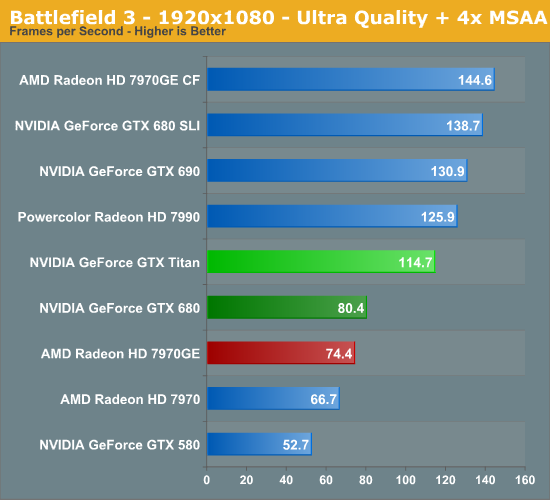

Battlefield 3: 115 fps -> 107 fps -> 114 fps

When the GeForce GTX Titan is very cold (line "1006 MHz Sample"), much more than normal, it displays an advance of 40% to nearly 50% compared to the GeForce GTX 680, the gains are highest located at extreme resolutions.

...

Roughly, if you have a well cooled case, the result is likely to be between the two examples tested. Without efficient cooling performance will be against by the example at the least efficient, while a watercooling should allow the GeForce GTX Titan permanently remain almost at its maximum frequency.

Them and their boost and now boost 2 along with TDP limits and then the card is hard limited to 265w, none of this is good for overclocking, and even skew the results. Very interesting find!

If Titanics real scores are all the artificially boosted 5-10%, it looks worse with each detail uncovered *for the price*.

It will be fun to see the NV PR/shills try to spin this.

You're probably doing all the spinning this thread needs. Thanks.

So the Titan in real world scenarios is only roughly 20-25% faster than the 7970ghz edition if I'm reading that post correctly.

Didn't we run into this same problem with the gtx680 reviews where a hot card would perform worse in benchmarks? I thought other sites reported this back then.

A dozen or so posts from now, it'll be down to 15-20 and 10-15 isn't too much further. You can do it!! Watch.

High-Larious.

Well, judging from this chart (using the same data as the above bar chart) below:We continue to see tiny gaps between frames from our single-GPU cards, though the GeForce GTX 690 consecutive frame time difference more than triples, on average. However, the latencies are still so small, and the frame rates so high, that we would still consider this a good result.

I think you guys are missing the point of the titan. And no, with boost 2 it's very overclock friendly, it doesnt limit the power to the card it in fact unlimited it for the user's discretion at the user's own risk.

The point of titan:

Titan is a single gpu, when clocked properly it is effectively giving the power of two gtx 670's (slightly lower clock speed however) because it has exactly double the cuda cores, on a single gpu.

Tri-SLI is the most you can use and get your $ worth for performance increase.

Yes the titan is expensive, but this is because it is the ONLY sli option that will give you full compliment of SIX 670's worth of performance. With a 690 you're gonna put in two of those, and you'll get a 75% scale approximately, with a titan, you put in 2 and you get 100% scale, you put in three and you still get that 100% scale.

You can overclock it but it's my understanding that you have a hard limit of 265w. It throttles once you hit that limit. Someone may release a hacked bios for the card eventually.

***PROPOSAL*** for further investigation into real-world performance of Titan, WITHOUT allowing GPU Boost 2.0 to interfere with preliminary benchmarking when the card is cool for the first 1-2 minutes:

(Google translated: http://translate.google.com/transla...Tests/Test-Geforce-GTX-Titan-1056659/&act=url )

PCGH includes these settings in their benchmarks to account for REAL-WORLD gameplay where the card heats itself up first and then the ambient after a few minutes.

Also, from HardOCP:

How long is an average benchmark run for most review sites? 1 minute long? When loading up a benchmark, the card is usually cooled off a good deal already. At the very beginning, the card is likely to be running about 80MHz higher than a few minutes afterwards.

The question is, how much does that really affect the benchmark scoring?

PCGH's benches show there to be between 5-10% difference between "dynamic boost" (open-air rig) and "28 degrees Celsius" at 2560x1600, with average fps (except for Skyrim which shows a 19% difference). Sometimes, the 28C test is slower than the 876MHz result, and sometimes it is faster.

Computerbase.de is saying the same thing (translated):

This explains Computerbase.de's relatively low scores for Titan (default, not "MAX") compared to most other review sites.

The same goes for Hardware.fr (translated page explaining such) and its "relatively" low scores for Titan.

As to the PCGH tests, the differences can be astounding, upwards of 10% (averaging 5-10% for these few games). The benchmark videos can be viewed at PCGH, to judge its length, in how long the run actually is, keeping in mind how much GPU Boost2.0 could potentially affect the entire result, with a large "boost impact" coming from the first 30-60 seconds especially.

Let's request American reviewers to also look into this, and to try to account for it. ~342-343 Voodoopower (after excluding PCGH, CB.de, HW.fr, TPU's CPU-bottlenecked benches at 25x16, etc..) then reduced by 5-10% would be a drastically reduced:

~311-327 Voodoopower

What do y'all think about this? It's something serious reviewers need to beware of, in the future. Would AnandTech look into this?

So the Titan in real world scenarios is only roughly 20-25% faster than the 7970ghz edition if I'm reading that post correctly.

Didn't we run into this same problem with the gtx680 reviews where a hot card would perform worse in benchmarks? I thought other sites reported this back then.

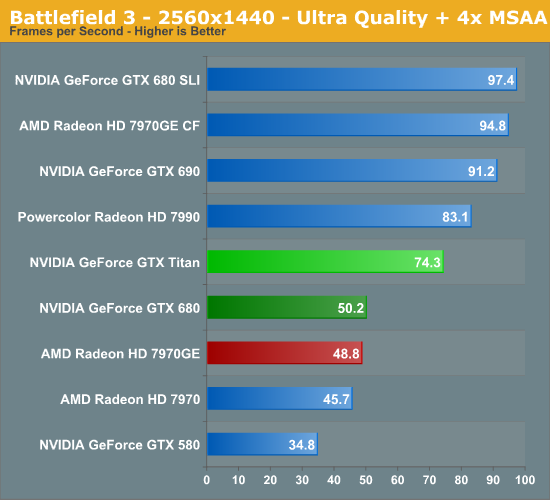

Wow.AMD and NVIDIA have gone back and forth in this game over the past year, and as of late NVIDIA has held a very slight edge with the GTX 680. That means Titan has ample opportunity to push well past the 7970GE, besting AMD’s single-GPU contender by 52% at 2560. Even the GTX 680 is left well behind, with Titan clearing it by 48%.

That actually makes a lot of sense because the average performance gain of Titan over the 680 and 7970 varies widely between review sites. Some of that is normal because the games and settings tested are different but it seems like the swing with Titan is greater than average.

So the best case results we've seen in reviews are probably representative of someone living in Alaska with all the windows open or someone with liquid cooling. Otherwise performance drops off after 5-10 minutes of gaming.

Yeah, 2x 660 Ti specs, pretty much.I think you guys are missing the point of the titan. And no, with boost 2 it's very overclock friendly, it doesnt limit the power to the card it in fact unlimited it for the user's discretion at the user's own risk.

The point of titan:

Titan is a single gpu, when clocked properly it is effectively giving the power of two gtx 670's (slightly lower clock speed however) because it has exactly double the cuda cores, on a single gpu.

I think you guys are missing the point of the titan. And no, with boost 2 it's very overclock friendly, it doesnt limit the power to the card it in fact unlimited it for the user's discretion at the user's own risk.

The point of titan:

Titan is a single gpu, when clocked properly it is effectively giving the power of two gtx 670's (slightly lower clock speed however) because it has exactly double the cuda cores, on a single gpu.

Tri-SLI is the most you can use and get your $ worth for performance increase.

Yes the titan is expensive, but this is because it is the ONLY sli option that will give you full compliment of SIX 670's worth of performance. With a 690 you're gonna put in two of those, and you'll get a 75% scale approximately, with a titan, you put in 2 and you get 100% scale, you put in three and you still get that 100% scale.

I think you guys are missing the point of the titan. And no, with boost 2 it's very overclock friendly, it doesnt limit the power to the card it in fact unlimited it for the user's discretion at the user's own risk.

First and foremost, Titan still has a hard TDP limit, just like GTX 680 cards. Titan cannot and will not cross this limit, as its built into the firmware of the card and essentially enforced by NVIDIA through their agreements with their partners. This TDP limit is 106% of Titans base TDP of 250W, or 265W. No matter what you throw at Titan or how you cool it, it will not let itself pull more than 265W sustained.

And then there are posters that tell us they run two and three cards stacked-multi-gpu, and run higher o/c's than reported from tech sites, supposedly for 24/7 gaming. You know those people are dreaming.

Uhhh. Not sure who that is in reference to...

And then there are posters that tell us they run two and three cards stacked-multi-gpu, and run higher o/c's than reported from tech sites, supposedly for 24/7 gaming. You know those people are dreaming.

Here at Anands, Ryan noted that Nvidia is again leading in the AAA game of the year.

Battlefield 3

Wow.

I say leave it alone, the fan is what is causing the reduced clocks, it's set at stupid low noise levels.

Unless we're taking into account fan noise on reference cards vs performance, I don't think in the overall scheme of things it matters.