Starting with HD 5000 makes no sense. It's heavily bandwidth limited and heavily TDP limited. If you scaled EUs further while keeping the same bandwidth your improvement would be close to nothing. I don't think you'd need 220 EUs to get 7790 performance, I think you'd need infinite EUs. But that doesn't mean that Gen can't scale if you also scale the bandwidth reasonably.

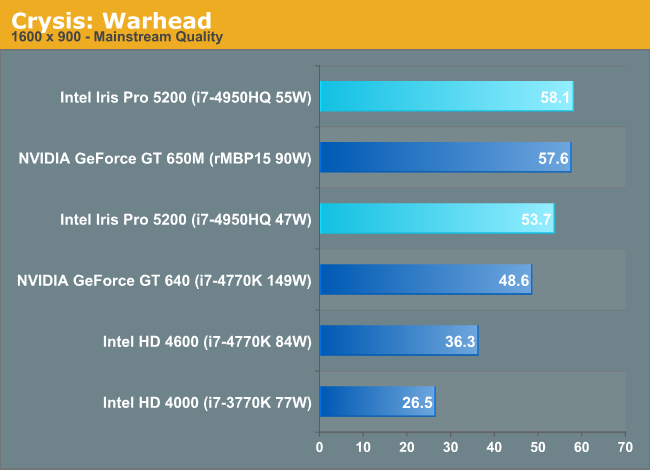

HD5000 scores 1080 in 3DMark 2011. Iris Pro 5200 scores 2364 - 2505 (47W and 55W respectively). They're the same GPU. Only difference is one has a big cache bolted onto it. There's a much larger than expected die area difference, that isn't really explained by said cache. Without proper die photos we won't really be able to get a good idea of what's going on there. The GPU in these cases is going to use maybe 30-40W of that TDP.

So why start with HD5000 instead of 5200 unless you want to make it look impossible for Intel? HD7790 is around 5968 in 3DMark 2011. TDP is 85W. Therefore, doubling Iris Pro 5200's GPU resources (while giving it more bandwidth) and keeping the clocks a little higher, which is what I and others have been saying, will give you similar performance. Based on what we know so far I don't see a good reason why Intel couldn't do this with a dual-core Haswell CPU at somewhere very vaguely around 350mm^2 (give or take several dozen mm^2), if they really wanted to.

Not saying this would beat AMD at all, just that they could come at least sort of in the same ballpark.

By all means Exophase. Come on. The 80eu is an unrealistic stretch also. Consoles plays real games and need

consistent performance and keeping programming cost down. We are in the real world where there is no 3D mark. You need to go for the 100-120EU to get just reasonable power numbers and cost for the programming, and then you are already in what is borderline technical possible or at least technical reasonable imho. It just doesnt make technical sense in my world. And 100% economic impossible looking at the alternatives from NV or AMD - this is not a perhaps (or roi bracket for that matter).

Then there is the question of the DDR5 controller - if chosen. As it have taken years for AMD and NV to get an efficient controller, - just look the gains from Keplar when NV fixed the memory issue, - i think its fair to assume, that if Intel was to chose and able to build an DDR5 controller - it wouldnt have the nessesary efficiency leaving the edram as the only viable option. And doubling the iris pro ram here is also a stretch - leaving 3 og 4 times the size as the possible solution (edit saw silverforce beat me to it). DDR5 is expensive but this is just meaningless if you can produce this ram with good enough yield to call it technical possible.

At the same time two Haswell cores just dont cut it. It dont, its not a perhaps. For the simple reason that the new consoles is build as heavy multitasking entertainment machines. Its a requirement, leaving 4 cores as the only option because you need human beeings to program for the consoles and get paid doing it.

Add the ram and eu size, in a consumer product intended for millions and millions of installements, i dont think we normally in this forum, calls that a technical viable solution. Its looking more like GF ppt in my world. I think there must be a limit to what we call technical possible, not having a definition that is a theoretical far out possibility.