nicalandia

Diamond Member

- Jan 10, 2019

- 3,331

- 5,282

- 136

posted on the wrong thread

Attachments

Last edited:

Windows is not a real time OS.Windows is "notorious" for being hard to achieve good battery life here. It's because (...) it's a real time OS.

Windows is not a real time OS.

0.95V @ 3.3Ghz and with 1 E-core running Linpack package power is 9W

1.25V @ 4 ghz and with 1 E-core running Linpack package power is 20W

It is out of ideal frequency range, the V/f plot we have for Gracemont shows good scaling up until 3Ghz, after which it moves to another slope to scale up to 4Ghz.But we must consider Gracemont might be way out of it's ideal frequency range. At 3.3GHz and 25% reduction in voltage assuming nothing else affects it - we end up with 2.7W, while still being 60% faster in this particular benchmark.

I highly doubt Meteor Lake will have only 2C8c and 6C8c CPU tiles, when this kind of configuration is already present in Alder Lake. Let's not forget Raptor Lake will be released before Meteor Lake, too. I think It's highly likely that they will increase either P-cores or E-cores, or both of them.The packages in the CNET photos were almost certainly the MTL-M (U9) 2+8+2 configuration, but MTL-P (P28/H45) will most likely use a 6+8 CPU tile.

During the Intel Accelerated event, Intel showed off a test wafer of Meteor Lake compute tiles that measure 4.8 mm x 7.9 mm. The Meteor Lake test chips that CNET photographed during their Fab 42 tour contain a top tile that also measures 4.8 mm x 7.9 mm, which strikes me as being somewhat beyond coincidental. Not locating the SoC tile in between the CPU and GPU tiles seems like a bold strategy, as it would make interconnect routing a nightmare. So I think @wild_cracks and @Locuza_ might need to reassess.

Will feature an updated compute tile with 8/32 config for the high end enthusiast products

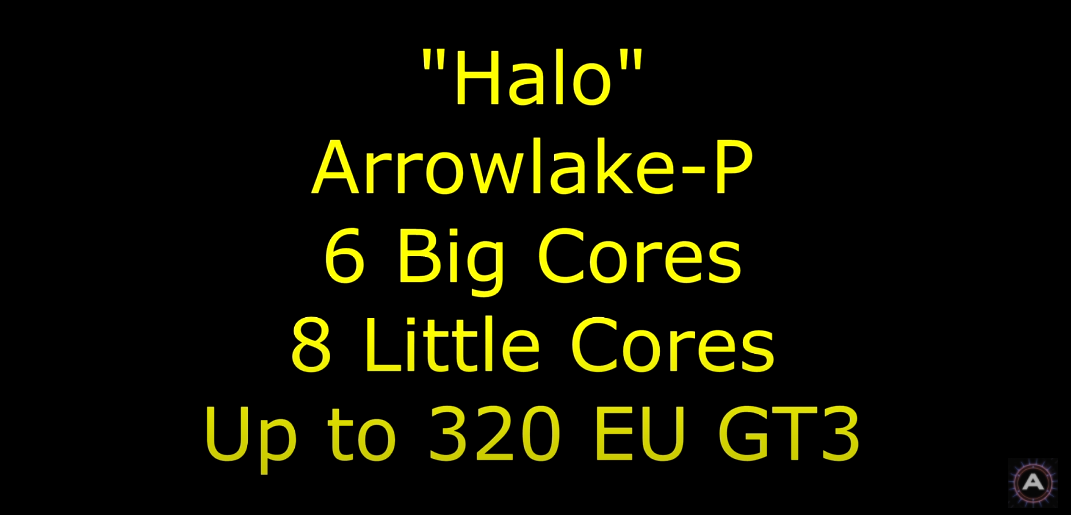

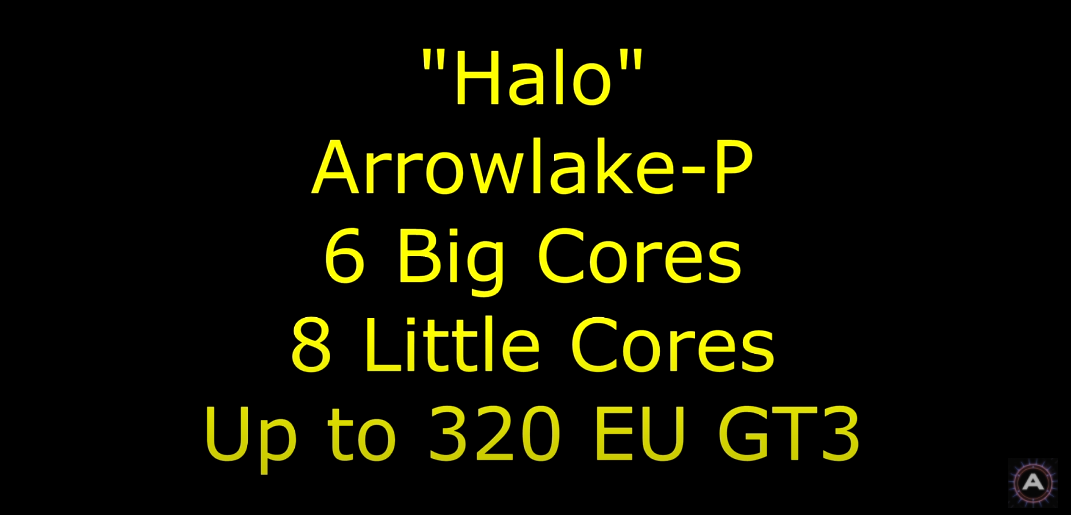

It is said that the mobile version of the Arrow Lake would feature 6 big cores and 8 little cores.

It is out of ideal frequency range, the V/f plot we have for Gracemont shows good scaling up until 3Ghz, after which it moves to another slope to scale up to 4Ghz.

However, keep in mind the same applies to Golden Cove: based on V/f curve this core works efficiently up until ~3.6GHz.

- From 2Ghz to 3Ghz voltage delta is ~130mV or 17%.

- From 3Ghz to 4Ghz voltage delta is ~350mV or 37%.

Here's the 12700K running 3.6Ghz P-core / 3Ghz E-core / 3Ghz bus, scores just under 18K in CB23 while staying under 75W. The CPU is not undervolted but the motherboard AC DC Loadline parameters are set to favorable settings (on Auto my board overvolts the CPU like crazy). RAM is overclocked but I can't be bothered to undo that as well, not for CB testing anyway.

- From 2.6Ghz to 3.6Ghz voltage delta is ~100mV or 12%.

- From 3.6Ghz to 4.6Ghz voltage delta is ~250mV or 27%.

I'm curious what people would think of 4+16 for the P die.I'm not expecting a core count increase for Meteor Lake. If the reddit Leak is accurate even Arrow Lake stays at 8 P cores, although with 32 E cores.

It clearly refers to a desktop tile, mobile Arrow Lake may not get this.

Intel Arrow Lake-P GPU rumored to feature 320 Execution Units - VideoCardz.com

Intel Arrow Lake for mobile with powerful GPU AdoredTV claims to have the first information on mobile series succeeding Meteor Lake. Last week a list of supposed Intel product codenames has been making rounds on the tech news websites. The message that was originally shared on Reddit has since...videocardz.com

Maybe 6+8 refers to ARL-P 28W and not the higher end H series. I think 6+16 is the next logical step for ARL-H.

If the idea is still to use that die for gaming laptops then I don't think it's a good idea. Games are starting to scale past 4c w/HT now, it's essentially the absolute minimum spec needed right now, and even then some games see significant performance loss with 4c8t vs 6c12t.I'm curious what people would think of 4+16 for the P die.

In a gaming context, I'm curious how many threads have to be "as fast as possible". Right now, GRT is written off for performance sensitive threads because of the gap with GLC of what? Around 2/3 GLC's performance? How does the tradeoff change as that gap shrinks? Would make a good study for anyone with ADL and a lot of time on their hands.If the idea is still to use that die for gaming laptops then I don't think it's a good idea. Games are starting to scale past 4c w/HT now, it's essentially the absolute minimum spec needed right now, and even then some games see significant performance loss with 4c8t vs 6c12t.

For thin and light laptops, it's absolutely fine. Or rather, probably better than 6+8 would be. Just not for gaming laptops.

In a gaming context, I'm curious how many threads have to be "as fast as possible". Right now, GRT is written off for performance sensitive threads because of the gap with GLC of what? Around 2/3 GLC's performance? How does the tradeoff change as that gap shrinks? Would make a good study for anyone with ADL and a lot of time on their hands.

| CPU/GPU | Configuration | Time (min.sec) | Seconds | Time per photo | Score | Rank |

| 12700K | 8+4 | 2.01 | 121 | 30.25 | 8.26 | 100% |

| 12700K | 8+0 | 2.08 | 128 | 32 | 7.81 | 95% |

| 12700K | 7+0 | 2.21 | 141 | 35.25 | 7.09 | 86% |

| 12700K | 6+0 | 2.42 | 162 | 40.5 | 6.17 | 75% |

| 12700K | 5+0 | 3.02 | 182 | 45.5 | 5.49 | 66% |

| 12700K | 4+0 | 3.36 | 216 | 54 | 4.63 | 56% |

| 12700K | 770 iGPU o/c 1800 | 3.44 | 224 | 56 | 4.46 | 54% |

| 12700K | 3+0 | 4.38 | 278 | 69.5 | 3.60 | 44% |

| 12700K | 770 iGPU (stock auto) | 5.10 | 310 | 77.5 | 3.23 | 39% |

| 12700K | 2+0 | 6.41 | 401 | 100.25 | 2.49 | 30% |

| Surface Laptop 2 | 620 iGPU | 7.46 | 466 | 116.5 | 2.15 | 26% |

| Surface Laptop 2 | 8250U | 10.35 | 635 | 158.75 | 1.57 | 19% |

| 12700K | 0+4 | 11.00 | 660 | 165 | 1.52 | 18% |

| 12700K | 0+1 | 13.10 | 790 | 197.5 | 1.27 | 15% |

You'd also need to lock frequencies for each core type and vary them to adjust the performance gap. Sadly, I don't have Alder Lake, so can't do it even if I had the time.This is a really interesting question. Since we can shut off P cores in the BIOS it would be easy to test games 8+0, 6+0, 4+0, 4+2, 4+4.

I'd do it except for the fact that I don't have a discrete GPU so I don't think there would be much use. Also I don't game so I don't have any games on my system.

You'd also need to lock frequencies for each core type and vary them to adjust the performance gap. Sadly, I don't have Alder Lake, so can't do it even if I had the time.

The E core clusters may be heavily L2 throughput bound in these tests.

That's down to how the cache is being cut down in the cpus. i9 has 30MB, i7=25MB and i5=20MBMaybe that's the reason, but I'm not sure. Having a 12900 to test scaling beyond that would be interesting. There is only 2 MB of L2 for the efficiency cores so it's possible that if all four cores are trying to run a heavy workload the cache is getting thrashed really badly. Having the 0+3 and 0+2 results as well might be enlightening. If those had better performance that would certainly be the case.

I looked up the information on wikichip and something really stood out to me. I'm not sure if it's just a typo, but the 12700K is listed has having 1 MB of L3 cache for the efficiency cores. The 12900K lists 6 MB of L3 cache for the efficiency cores, which makes me think it's a typo, but if it weren't that would further suggest the cache being the culprit.

The really interesting part to me is how little difference it makes going from 1 efficiency core to 4 of them. What's the bottleneck that's holding them back so badly.

I'm also curious of how it performs in a 1+0 configuration. We can probably extrapolate, but the efficiency core doesn't seem as though it would be that much worse.

I'd say any L2 sensitive taskThe really interesting part to me is how little difference it makes going from 1 efficiency core to 4 of them. What's the bottleneck that's holding them back so badly.

I'm also curious of how it performs in a 1+0 configuration. We can probably extrapolate, but the efficiency core doesn't seem as though it would be that much worse.

”H2 20” is a huge red flag since product qualifications are planned down to the week. Even at Intel where qualification takes 4 or 5 quarters (which is longer than everywhere else), not even saying which quarter they plan on finishing qual means they expect delays.

Or you can just call me an AMD fanboy LOL.

Show examples from AMD/NV? My two cents, a half year window is acceptable when a product is in early to mid pre-silicon development. Not so much when first silicon has already arrived back.

However, like I said, a half year window is just FUD and likely CYA.

@dmens, I was thinking about what you said about stating in January an H2 launch for the same year is a huge red flag. I'm wondering your thoughts on this Anandtech post in January about an H2 launch for the same year: https://www.anandtech.com/show/17152/amd-cpus-in-2022-cesThe whole point of the post-silicon schedule is a high confidence time plan based on prior experience to achieve qualification, which enables production. Which is precisely why it is down to the work-week.

Although I don't know if they need Gracemont for that, even a low powered one. They used a downclocked Silvermont core for their last manufactured LTE modem. Perhaps the performance is needed to reduce context switching latencies when it needs to wake up the main cores again?