GeForce Titan coming end of February

Page 87 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

AMD should just come out with a real 7990 GHZ for 650 GBP and it will own everything for less than this titan costs

Including your electricity bill. I kid, I kid, haha.

But woof is Tahiti a pig! Glad mine can reach decent clocks at stock volts.

Including your electricity bill. I kid, I kid, haha.

But woof is Tahiti a pig! Glad mine can reach decent clocks at stock volts.

mines ok but my PSU couldnt cope with 2 of them.

imaheadcase

Diamond Member

- May 9, 2005

- 3,850

- 7

- 76

Its really dumb of people to talk about price vs performance or power consumption on a card that is $1000. If you care about those things, you are in the wrong thread.

wand3r3r

Diamond Member

- May 16, 2008

- 3,180

- 0

- 0

perhaps we should look at this more like some "extreme" CPU from Intel (although Intel normally offers almost the same thing for a lot less),

in terms of price/perf it's bad, but pure performance for a single GPU looks good, also even performance per watt is looking quite nice... as much as cheaper dual GPU solutions might get the same framerate in many cases, it just not the same, single GPU performance is more consistent, less problematic, and... harder to improve, I guess that's where the prices come from,

but yes, it's a little disappointing for the price and performance, and the whole GF110 to GK104 thing...

I would certainly choose this car over a dual GPU solution for the same price,

Sure, why doesn't AMD just rename the 8970 as the AMD Ulyses and call it the halo card for $900. Oh wait, is it just NV that should do this?

I was really hoping for a 900$ MSRP. Still curious about overclocking performance, wonder if it'll close the gap with a 690 with the new temperature target overclocking.

Since quantities are not super limited, I would have preferred to see NVIDIA launch Geforce Titan with an MSRP of ~ $849 USD. NVIDIA would have avoided much of the negative press and ridicule from people who point out that 7970 GHz Ed. Crossfire and GTX 680 SLI are both cheaper and faster in comparison (albeit at significantly higher power consumption and higher noise), and they would have avoided pricing Geforce Titan at the same price point as the faster GTX 690. In my opinion, adding an extra $150 USD to the MSRP is not worth all the negativity associated with a $999 USD MSRP for a single GPU product. I realize that NVIDIA is trying to offer much more double precision performance per dollar vs. the Tesla K20 variants, but the feature set/reliability/support is not the same as Tesla either, and I feel it would have been better to position Geforce Titan as a very high end gaming card at $849 USD, with full double precision performance de-emphasized and reserved for higher margin Tesla variants.

Last edited:

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

mines ok but my PSU couldnt cope with 2 of them.

I'm in that boat

VulgarDisplay

Diamond Member

- Apr 3, 2009

- 6,188

- 2

- 76

Since quantities are not super limited, I would have preferred to see NVIDIA launch Geforce Titan with an MSRP of ~ $849 USD. NVIDIA would have avoided much of the negative press and ridicule from people who point out that 7970 GHz Ed. Crossfire and GTX 680 SLI are both cheaper and faster in comparison (albeit at significantly higher power consumption and higher noise), and they would have avoided pricing Geforce Titan at the same price point as the faster GTX 690. In my opinion, adding an extra $150 USD to the MSRP is not worth all the negativity associated with a $999 USD MSRP. I realize that NVIDIA is trying to offer much more double precision performance per dollar vs. the Tesla K20 variants, but the feature set/reliability/support is not the same as Tesla either, and I feel it would have been better to position Geforce Titan as a very high end gaming card at $849 USD with full double precision performance de-emphasized and reserved for Tesla variants.

Buy two aftermarket 670's or 7950 instead. Even cheaper, probably able to get quieter ones, and be still be faster. Unfortunately they will still use a bit more power.

Tweak155

Lifer

- Sep 23, 2003

- 11,449

- 264

- 126

I'm in that boatWas thinking about jumping on them crazy bundles, but figured a new PSU would be in the cost so it sort of deflated my interest.

Errrr what? According to your sig you have an 850w, right? Should be plenty.

Nintendesert

Diamond Member

- Mar 28, 2010

- 7,761

- 5

- 0

I'm gonna SLI my 680 from used ones people are going to dump cheap in the hopes of getting a Titanic!

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

Errrr what? According to your sig you have an 850w, right? Should be plenty.

2xGPU

Last post on this, since I don't want to steer off course, more so, but this guy's kila-watt meter made me double think (and yes, I acknowledge he has more hardware than me, but even at 750-775W I wouldn't feel comfortable, considering my PSU is already 4 years old!

SirPauly

Diamond Member

- Apr 28, 2009

- 5,187

- 1

- 0

But wait: nVidia is no competition because they are a smartphone company.

Roy Taylor said:their attempted transition into being a smartphone company.

Tweak155

Lifer

- Sep 23, 2003

- 11,449

- 264

- 126

Last post on this, since I don't want to steer off course, more so, but this guy's kila-watt meter made me double think (and yes, I acknowledge he has more hardware than me, but even at 750-775W I wouldn't feel comfortable, considering my PSU is already 4 years old!

It has gotta die some time

I really doubt you'll see any issues, but yeah 4 years old there is no way to know for sure.

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

It has gotta die some time

I really doubt you'll see any issues, but yeah 4 years old there is no way to know for sure.

For the price of another card, a new 1kw PSU, and Ramen noodle lunches for 2 weeks I can get a GTX Titan! :awe:

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

And you suffer from the delusion that you can compare FPS across single and multi GPU solutions just like that...point your finger at yourself.

If you look beyond his walls of red herrings...his logic is flawed, false or his is lying.

The comparison I made specifically discussed taking a GTX660Ti and doubling it. No SLI was even discussed. Go re-read the before attacking me for the nth time. All I did is try to extrapolate to get us an estimate of where the Titan might land. I provided 2 different cases too. Next time please take more time to carefully read what is being discussed.

So he uses bad math too....thx updating list next time

GTX660Ti boosts to 980mhz on average. Case 2 has Titan with 915mhz base and boost to 975mhz (some stated rumoured specs for Asus card were 915/975). I rounded the 5mhz differential to make it 200% of 100% GTX660Ti. Not trying to ace a math test. Quick estimate. If you want to be exact and do 975 / 980mhz x 2 x 100% (GTX660Ti), knock yourself out. You have a calculator.

Hehe...call an ambulance...RS need to "revisionsts" posts now ^^

Right, because nearly every single site that started to leak specs/pictures and details of the Titan discussed the limited availability of 10,000 units. Since every rumour discussed before an official launch is considered in a forum thread dedicated to a pre-launch of a new product, discussing that aspect was not biased. You aren't a lawyer by any chance in real life are you? You sound like a really fun person. If you don't like discussions/rumours before an official product launch, then don't participate and just wait until everything is revealed officially.

----

Nice card, sexy look, high quality components. 1176mhz early overclocking results on stock voltage are impressive. With more voltage and better cooling, this is going to be an awesome product for overclockers on water. For some buyers the 1/3rd Double Precision is going to be a great feature to get a card with a fraction of the price of K20X. This is more proof than ever that NV will make large die GPUs because they need to service Tesla/Quadro markets (big die strategy) and that compute performance is becoming more and more important in many more applications outside of games and NV is capitalizing on this early. I expect Maxwell to take it to the next level. NV projects GLOPs / watt will improve 3x from Kepler from 5 GFLOPs/watt to 15 GFLOPs/watt. That means we could end up with a GPU having 14 Tflops of single precision in a 250W power evelope on 20nm. Exciting times ahead!

Double precision wise, to unlock full performance you must open the NVIDIA Control Panel, navigate to “Manage 3D Settings”. In the Global Settings box you will find an option titled “CUDA – Double Precision”, but... GeForce GTX Titan runs at reduced clock speeds when full double-precision is enabled. Still a great option if you are working on CUDA applications.[/i]

Good to know NV publicly confirms what I said earlier that a card's maximum power consumption goes up in other real world applications besides games that happen to use the available functional units of the GPU more extensively, such as computing projects, raytracing, rendering, compiling, etc. This is why NV/AMD can't just look at average power consumption in videocards when setting GPU clocks. Not crippling this card's DP to 1/24th like GK104 is great news for some people!

AMD fucked up in the HPC market and this is what they get a GPU for the consumer market that they don't really have an answer for

AMD never had a real presence in the HPC market. NV created this market themselves starting with G80. GCN is AMD's first real effort to be taken seriously. NV has a 6-year head-start. It's not exactly unexpected for NV to continue being ahead: more financial/engineering resources, CUDA infrastructure in place, sticky customers with whom they've been working on for more than half a decade all mean NV has already been making a lot of $ in this segment, which fuels their desire to grow this revenue stream. This is why NV continues to make 551mm2 die chips. AMD doesn't have this luxury.

Last edited:

Phynaz

Lifer

- Mar 13, 2006

- 10,140

- 819

- 126

NO BENCHES?!

Fucks sake NVidia.

If intel is gonna play the same game with haswell - i'm gonna have to start rooting for bloody ARM -_-

Nvidia probably has something to hide. It's the same thing AMD did when Bulldozer launched.

Get the hype machine turned up before the truth emerges.

Grooveriding

Diamond Member

- Dec 25, 2008

- 9,147

- 1,330

- 126

Nvidia probably has something to hide. It's the same thing AMD did when Bulldozer launched.

Get the hype machine turned up before the truth emerges.

We've seen nvidia's slides leaked, which always amount to best case scenario situations. Per those slides on average 40% over GTX 680.

In actual impartial reviewer's hands we will probably see it sit about 35% faster than a 680 averaged over the board on current games. Less than the hype it was spun up to, and even more expensive than rumoured....

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Other than more mature 28nm node, there is another possible reason why the Titan is so much more efficient than Tahiti XT:

"Happily for NVIDIA they have made some interesting design decisions about the double precision units and keeping them from affecting TDPs when running single precision applications and games" ~ PCPerspective

Very innovative. I've never seen any GPU do this.

"Happily for NVIDIA they have made some interesting design decisions about the double precision units and keeping them from affecting TDPs when running single precision applications and games" ~ PCPerspective

Very innovative. I've never seen any GPU do this.

96Firebird

Diamond Member

- Nov 8, 2010

- 5,749

- 345

- 126

Last post on this, since I don't want to steer off course, more so, but this guy's kila-watt meter made me double think (and yes, I acknowledge he has more hardware than me, but even at 750-775W I wouldn't feel comfortable, considering my PSU is already 4 years old!

To be fair, that is from the wall. Your PSU is (was) capable of outputting 850W continuously, which means it will pull ~1075W from the wall (assuming 80% efficiency). You should have plenty of room left, as (depending on PSU efficiency) the picture is actually supplying ~700W to the components.

HisDivineOrder

Member

- Jun 24, 2012

- 112

- 0

- 0

Even though I think it's overpriced by a little (given it's a $1k GPU, I think $100-200 qualifies as "a little"), I am looking forward to the benchmarks of a Tri-SLI system with these cards. Not because I'll buy three or even just one of this card. Ha, I wish. No, I just want to see what's possible for the 1%'ers out there.

I think it's going to be a good halo product. Given the sea of console ports and given that I don't care about Eyefinity or 3D gaming, I don't think I'm the target audience for this thing. And I think by the time game ports from the next gen consoles show up and trash my 670, nVidia will have a new halo product that trashes them.

Given, I don't care that much about AA, so I was probably never the target audience for any halo product in the last five years at least. Consider me grateful that GPU's have gotten to the point where $300-ish products can run 2560x1600 easily with cards that didn't function like room heaters. That's all I wanted and it became true last year.

Everything else to me is just gravy.

I think it's going to be a good halo product. Given the sea of console ports and given that I don't care about Eyefinity or 3D gaming, I don't think I'm the target audience for this thing. And I think by the time game ports from the next gen consoles show up and trash my 670, nVidia will have a new halo product that trashes them.

Given, I don't care that much about AA, so I was probably never the target audience for any halo product in the last five years at least. Consider me grateful that GPU's have gotten to the point where $300-ish products can run 2560x1600 easily with cards that didn't function like room heaters. That's all I wanted and it became true last year.

Everything else to me is just gravy.

Golgatha

Lifer

- Jul 18, 2003

- 12,455

- 1,165

- 126

Shhhhh. Nobody else here seems to have taken Econ 101.

Moreover, they all know better than a company pushing $4b in revenue.

That said, I agree that this card should be priced at $800 to differentiate it from the 690. But I'm not going to pretend I know more than nVidia. The market will decide who's right in the end.

Personally I'd say $650 with 3GB of RAM. I am interested in entertaining a purchase at this price point. I do like the thermals and the all metal construction. It's a very nice card, it's just not worth $1,000 to me personally. Also, the extra 3GB of RAM does almost nothing for gamers, it's only needed for compute projects. Seems like a strange duck to market to gamers IMO.

Tweak155

Lifer

- Sep 23, 2003

- 11,449

- 264

- 126

Its really dumb of people to talk about price vs performance or power consumption on a card that is $1000. If you care about those things, you are in the wrong thread.

Not really. If the card provided 200%+ performance increase (over 680) it would be a valid discussion and a valid reason to opt for the card.

Its really dumb of people to talk about price vs performance or power consumption on a card that is $1000. If you care about those things, you are in the wrong thread.

No. OK?

6+8 pin and TDP/cooling combo is what is going to limit overclocking on Titan

If Titan had 280W power consumption at full load, you wouldn't be able to OC it much.

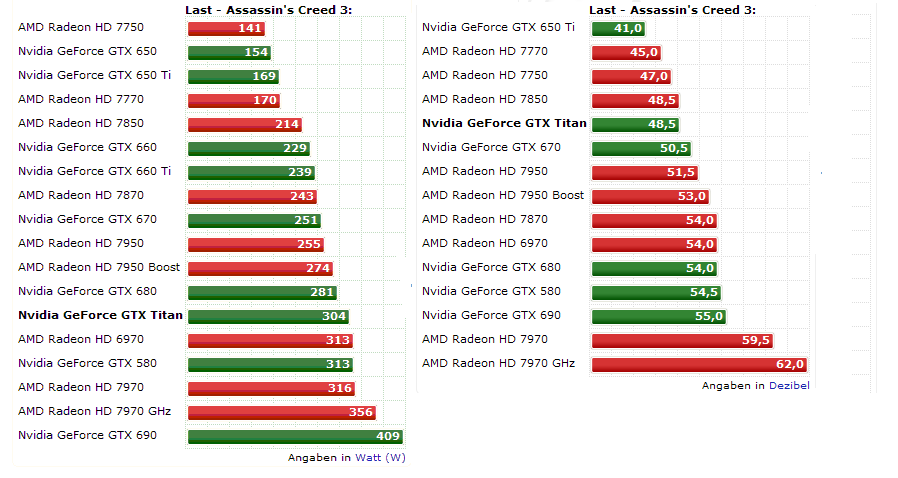

Entire system power consumption and loudness in AC3

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.