Deus EX: Mankind Dividied system specs revealed

Page 5 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Red Hawk

Diamond Member

- Jan 1, 2011

- 3,266

- 169

- 106

FYI, the game benchmarks right now should probably be ignored altogether as they are not using final release code. Also, ultra quality uses 4x MSAA by default, so that has a lot to do with why there is such a large performance difference between ultra and high. And from what I've been reading, global illumination is the other massive performance drain..

Yep, not using final release code, and not using drivers optimized for the game. Not much point to GameGPU's benchmarks other than showing why the DirectX 12 renderer was delayed, because it seems to perform even worse than the DX11 renderer, and that sure isn't the way it's supposed to work.

MSAA? Bleh. MSAA is such a performance hit in games these days and doesn't even eliminate a lot of jaggies anymore. It's good that more games are using temporal antialiasing, the best implementation of which seems to be TSSAA as used in Doom. Although...looking at the options menu screenshots that were posted, it looks like temporal antialiasing is on there, but I don't see MSAA.

Yep, not using final release code, and not using drivers optimized for the game. Not much point to GameGPU's benchmarks other than showing why the DirectX 12 renderer was delayed, because it seems to perform even worse than the DX11 renderer, and that sure isn't the way it's supposed to work.

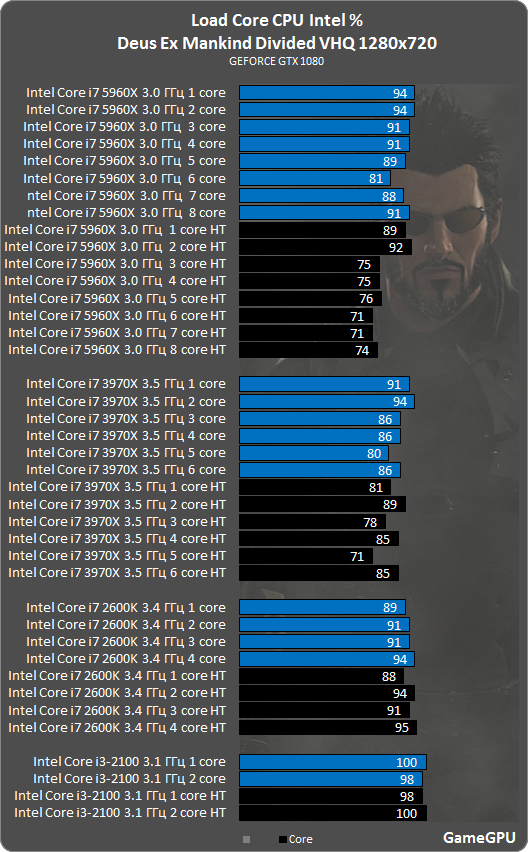

Not using final code, but they did use the game optimized drivers. It seems like the game is suffering from some sort of CPU threading issue, because the game is using way too much of the CPU. I mean, just look at it. If a 5960x is loaded to this degree, they really screwed up..

MSAA? Bleh. MSAA is such a performance hit in games these days and doesn't even eliminate a lot of jaggies anymore. It's good that more games are using temporal antialiasing, the best implementation of which seems to be TSSAA as used in Doom. Although...looking at the options menu screenshots that were posted, it looks like temporal antialiasing is on there, but I don't see MSAA.

Yeah, MSAA is a massive hit, especially in games that have a lot of foliage. But NVidia does offer MFAA, which boosts the performance of MSAA considerably. I will test it when the game finally ships..

Way too much or just well threaded?

Still waiting for my key. Grrr.

It could be both. A well threaded game doesn't necessarily mean optimized. Based on a lot things I've read over the years from developers, there is diminishing returns the more threads you try to address

Yep, not using final release code, and not using drivers optimized for the game. Not much point to GameGPU's benchmarks other than showing why the DirectX 12 renderer was delayed, because it seems to perform even worse than the DX11 renderer, and that sure isn't the way it's supposed to work.

MSAA? Bleh. MSAA is such a performance hit in games these days and doesn't even eliminate a lot of jaggies anymore. It's good that more games are using temporal antialiasing, the best implementation of which seems to be TSSAA as used in Doom. Although...looking at the options menu screenshots that were posted, it looks like temporal antialiasing is on there, but I don't see MSAA.

I think this game is using clustered forward shading, so MSAA wouldn't necessarily be a huge performance hit. It still only helps with geometry and transparencies though.

Red Hawk

Diamond Member

- Jan 1, 2011

- 3,266

- 169

- 106

On a separate note, the Human Revolution Director's Cut is still kinda broken. I have both the original release of the game and the DC installed. The DC hitches all over the place, while the original release is smooth. Which is a shame, because otherwise the DC has several gameplay advantages over the original release.

The game seems to run well when MSAA is not used:

Rig and settings used:

Rig and settings used:

Settings Used:

• Preset: CUSTOM

• Texture Quality: ULTRA

• Texture Filtering: 16x ANISTROPIC

• Shadow Quality: High

• Contact Hardening Shadows: ULTRA

• Temporal Anti-Aliasing: ON

• Motion Blur: OFF

• Depth of Field: OFF

• Bloom: ON

• Volumetric Lighting: Ultra

• Subsurface Scattering: ON

• Cloth Physics: ON

• Ambient Occlusion: VERY HIGH

• Tessellation: ON

• Parallax Occlusion Mapping: HIGH

• Screenspace Reflections: ULTRA

• Sharpen: ON

• Chromatic Aberration: ON

• Level of Detail: VERY HIGH

PC Specs:

– Microsoft Windows 10 Pro

– Intel Core i7 6700k @ 4.2Ghz (Turbo)

– NZXT Kraken X61 106.1 CFM Liquid CPU Cooler

– G.Skill Ripjaws V Series 32GB (2 x 16GB) DDR4-3200 Memory

– EVGA GeForce GTX 1080 FTW 8GB GDDR5X

I'm not terribly worried about using MSAA, I've found that in a lot of modern titles it's not the gold standard it used to be. It doesn't seem to do anything for the "shimmering" effect you get on some textures. Some of the post process AA options are starting to look pretty impressive. Fraction of the cost of MSAA, work better in to reduce those "shimmers" work nearly as well on textures MSAA is good at smoothing out, while not blurring the entire scene as bad as FXAA used to do.

I'm not terribly worried about using MSAA, I've found that in a lot of modern titles it's not the gold standard it used to be. It doesn't seem to do anything for the "shimmering" effect you get on some textures. Some of the post process AA options are starting to look pretty impressive. Fraction of the cost of MSAA, work better in to reduce those "shimmers" work nearly as well on textures MSAA is good at smoothing out, while not blurring the entire scene as bad as FXAA used to do.

MSAA doesn't touch textures, unless they're transparent(and only sometimes for those).

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Yep, not using final release code, and not using drivers optimized for the game. Not much point to GameGPU's benchmarks other than showing why the DirectX 12 renderer was delayed, because it seems to perform even worse than the DX11 renderer, and that sure isn't the way it's supposed to work.

MSAA? Bleh. MSAA is such a performance hit in games these days and doesn't even eliminate a lot of jaggies anymore. It's good that more games are using temporal antialiasing, the best implementation of which seems to be TSSAA as used in Doom. Although...looking at the options menu screenshots that were posted, it looks like temporal antialiasing is on there, but I don't see MSAA.

True, but in many games MSAA is still the best option unless one manually forces SSAA. In many games the post-processing AA filters blur the image to the point where you start to wonder why you spent the extra $ on a 2560x1440, 3440x1440 or a 4K monitor in the first place. PCGamesHardware and GameGPU agree that MSAA provides the best IQ in Deus Ex MD, as the alternatives blur the game:

PCGamesHardware:

"The game's MSAA is very expensive - not only the frame rate drops when switching the modes 2 ×, 4 ×, and 8 × especially significant, above all, need the additional buffer abundant graphics memory. For MSAA smoothes the numerous polygon edges better than the also and also additionally available modes FXAA and Temporal-AA. The latter two provide a slight blurring of the image, which with the developer - try to compensate sharpness filter - also switched on and off. This post is a matter of taste; We find, however, that the sharpening is exaggerated, even with active TAA."

http://www.pcgameshardware.de/Deus-.../Specials/Benchmarks-Test-DirectX-12-1204575/

PCGamesHardware also has an opionion that the game's global illumination (as part of the engine) is what puts extra demand on GPUs:

"The generally high system requirements could at least fall back in part to the global illumination, because they can not seem to turn it off."

They are also saying that 4GB of VRAM isn't enough for 1080p Full HD with MSAA in this title!

"In addition, it is the amount of video memory to be considered, because 4 GiByte be short of our trial already in Full HD."

This shouldn't be surprising as I consider any 2016 $200+ GPU with less than 4GB of VRAM DOA.

Since MSAA produces such a massive performance hit in this game at this time, PCGamesHardware chose to use temporal (TAA), DX11 mode ["Therefore, we do not for the time being on measurements under DX12 until the implementation has matured"] and a custom bench in Prague.

"For the first graphics card benchmarks we'll set a game scene in Prague. We rely on the game's "Ultra" preset and the performance gentle temporal antialiasing - it is automatically activated when the graphics preset "Ultra". Deus Ex: Mankind Divided incidentally also offers a built-in benchmark. Since these, however, have rarely anything to do with real workloads, we waive such, albeit comfortable traceable measurements."

Under DX11, GTX1060 comes last, behind Hawaii's R9 290/390 and RX 480 leads by almost 11.5%. Pretty amazing that a cut-down Hawaii 290/390 is consistently beating GTX780Ti, GTX970 and now it's beating GTX1060 in DEMD. Remarkably, this is only a 1Ghz 390 going against a 1.85Ghz 1060, but many 390s can overclock to 1150-1200mhz.

Under DX11, Fury X's performance looks terrible. Hopefully the DX12 patch brings proper Async Compute that allows AMD cards to shine as this seems to be the most demanding next gen title of 2016.

Most people on this forum aren't talking about it, but RX 480 only has ~1.3Ghz 32 ROPs and yet it's beating both the 1.85Ghz 48 ROP GTX1060 and the 1Ghz 64 ROP R9 390 in a next gen title! Good stuff from GCN 4.0. In the past ROPs were one of the biggest bottlenecks in games.

A couple side notes:

1. I think the game should have been delayed before DX12 was incorporated into the title. If the DX12 accelerates performance, why release it in less than optimally optimized DX11 state? If DX12 does not accelerate performance, why incorporate it in the first place? Seems like there is an inconsistency there.

2. If the game is getting a DX12 patch, that means the backbone of the game is still DX11. This suggests it'll be a while before we start to see "true" DX12 games in the same manner it took a while to transition from DX9 to 10 or 10 to 11. At least it's nice to see that some developers are at least trying to add DX12 to improve CPU multi-threading and GPU utilization (hopefully the DX12 patch has improved performance).

Last edited:

I don't think adding in DX12 later really matters much. Look at Doom. It was OGL initially and Vulkan was patched in later which worked wonders for AMD hardware.

Also from everything I'm reading, this game runs rather well UNTIL MSAA is enabled. I don't think that alone is worth delaying the whole game personally.

Also from everything I'm reading, this game runs rather well UNTIL MSAA is enabled. I don't think that alone is worth delaying the whole game personally.

Azix

Golden Member

- Apr 18, 2014

- 1,438

- 67

- 91

Did they say anything about the usage of the fury x? Percentage. Wish they had more cards in the chart

I'd guess the 390 is suffering from bottlenecks related to CPU/drawcalls. The game has a lot of objects from what I saw. Expect grenada at least to be faster in dx12. Fury X much faster if not limited by VRAM.

I'd guess the 390 is suffering from bottlenecks related to CPU/drawcalls. The game has a lot of objects from what I saw. Expect grenada at least to be faster in dx12. Fury X much faster if not limited by VRAM.

So dropping contact hardening shadows, ambient occlusion, and volumetric lighting to high will up the FPS seeing as there is no option to gut global illumination? Ironic that 1080p is still putting up a fight, even "next-gen" GPUs will melt with settings upped. And that 3GB 1060 will be about as useful as a GTX 960 2GB.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

ROPs are not a major bottleneck in modern games. Or at least they shouldn't be the primary bottleneck.

If by past you mean perhaps first generation 360/ps3 ports with much lower shader complexity, then you might be right.

I never said they are the primary bottleneck but one of. Of course GPUs need to be balanced but you still missed my point entirely.

3 years ago with Kepler, $600+ GPUs such as GTX780/780Ti/OG Titan had 48 ROPs and AMD bumped that up to 64 ROPs with R9 290/290X. 2 years ago NV bumped the ROP count to 56/64 on GTX970/980. This generation NV increased ROPs by 50% from 32 by moving from the 960 to the 1060. RX 480 is just an R9 285/380 successor but AMD choose to keep ROPs at 32. RX 480's peak pixel fill-rate is only 65% of the GTX970 and less than half of the GTX980 and yet its beats the 970 and is barely behind the 980. In your view all of the GPUs that preceded the RX 480 had excessive/useless amounts of ROPs then?

Synthetic pixel fill-rate for RX 480 has it even below the GTX960!

Yet, in the higher resolutions where pixel fill-rate is even more important, RX 480 is close to 2X faster than the GTX960. Normally we should NOT see a scenario where the Polaris 10 GPU is so unbalanced on paper and yet is performing so well. Its paper specs are completely underwhelming.

Did they say anything about the usage of the fury x? Percentage. Wish they had more cards in the chart

I'd guess the 390 is suffering from bottlenecks related to CPU/drawcalls. The game has a lot of objects from what I saw. Expect grenada at least to be faster in dx12. Fury X much faster if not limited by VRAM.

Since we can't compare final DX11 vs. DX12 results yet, it's hard to tell if AMD simply didn't optimize DX11 drivers much knowing its cards may perform way better under DX12 + Async OR the DX11 API / drawcall bottleneck is so severe, that AMD cannot overcome it with Fury X under DX11 mode.

So dropping contact hardening shadows, ambient occlusion, and volumetric lighting to high will up the FPS seeing as there is no option to gut global illumination? Ironic that 1080p is still putting up a fight, even "next-gen" GPUs will melt with settings upped. And that 3GB 1060 will be about as useful as a GTX 960 2GB.

In YouTube videos, this game looked awful, like a 4-5 year old title. Per GameGPU screens though, it's a beautiful looking game. The difference between all of the YouTube footage and still screenshots is the most mind-blowing one I've seen in any PC game released in the last 5 years. It's the completely opposite of the Witcher 3 which looked incredible and the end result was a downgrade.

Before the developer removed the option to run the game under DX12, GameGPU was able to bench R9 295X2 and it ended up trading blows with GTX 980 SLI / GTX1080, which is pretty awesome as R9 295X2 cost barely more than a single GTX980 when they were competing with each other in the US.

I don't think adding in DX12 later really matters much. Look at Doom. It was OGL initially and Vulkan was patched in later which worked wonders for AMD hardware.

Also from everything I'm reading, this game runs rather well UNTIL MSAA is enabled. I don't think that alone is worth delaying the whole game personally.

Anecdotal, but it seems a lot of gamers buy these AAA titles on launch date. It's possible that by the time DX12 patch and AMD/NV DX12 optimized drivers come out, these gamers would have beaten the game. Also, some gamers may choose to upgrade specifically for this title and they will use DX11 performance as a point of reference, which may not accurately reflect the final user experience under DX12. My biggest issue is the game was delayed another 6 months already and they still haven't finished the DX12 patch? Sounds like it wasn't a priority which could mean it'll be another half-baked DX12 title from Nixxes, aka DX12 Rise of the Tomb Raider.

Last edited:

brianmanahan

Lifer

- Sep 2, 2006

- 24,696

- 6,054

- 136

got my 1070 installed, i think it'll have a better chance than a 7950 at playing this game with decent framerate

I never said they are the primary bottleneck but one of. Of course GPUs need to be balanced but you still missed my point entirely.

Yet, in the higher resolutions where pixel fill-rate is even more important, RX 480 is close to 2X faster than the GTX960. Normally we should NOT see a scenario where the Polaris 10 GPU is so unbalanced on paper and yet is performing so well. Its paper specs are completely underwhelming.

I think increasing resolution only marginally increases the relative ROP load. Remember, shader invocations also increases proportionately to resolution. Additionally, modern games uses lots of compute shaders, which don't use ROPs at all, meaning processing increase from resolution can shift bottlenecks even farther from ROPs as resolution goes up.

I think the main reason radeons perform relatively better at higher resolutions is because increasing resolutions adds a lot of ALU load without increasing geometry load, and they have a higher ratio of alu:geometry performance than geforces.

got my 1070 installed, i think it'll have a better chance than a 7950 at playing this game with decent framerate

1070 is a significantly faster card, 7950 is not even in the same league.

Red Hawk

Diamond Member

- Jan 1, 2011

- 3,266

- 169

- 106

In YouTube videos, this game looked awful, like a 4-5 year old title. Per GameGPU screens though, it's a beautiful looking game. The difference between all of the YouTube footage and still screenshots is the most mind-blowing one I've seen in any PC game released in the last 5 years. It's the completely opposite of the Witcher 3 which looked incredible and the end result was a downgrade.

A possible explanation is that Youtube's compression algorithms distorted how gameplay footage looks.

I'm not terribly worried about using MSAA, I've found that in a lot of modern titles it's not the gold standard it used to be. It doesn't seem to do anything for the "shimmering" effect you get on some textures. Some of the post process AA options are starting to look pretty impressive. Fraction of the cost of MSAA, work better in to reduce those "shimmers" work nearly as well on textures MSAA is good at smoothing out, while not blurring the entire scene as bad as FXAA used to do.

I think the main reason why MSAA has such a massive performance hit in Deus Ex MD is because the game is exceptionally detailed with lots of surfaces and edges. MSAA if I remember correctly, applies anti aliasing to everything on screen. So the more objects you have on screen (like foliage), the more bandwidth is used..

Of course NVidia cards from Maxwell on can use MFAA to take some of the sting out of using MSAA.

That said, I agree about some of the post process AA techniques being used today. The most notable is Doom's 8xTSSAA, which is as close to perfect as I've ever seen. No noticeable blur, but it annihilates shimmering and gets rid of about 90% of the aliasing.

I think increasing resolution only marginally increases the relative ROP load. Remember, shader invocations also increases proportionately to resolution.

Agreed relative ROP load should be about the same at higher resolutions. There should be 2 effects at higher resolution with respect to shader and ROP load:

1) There are more compute resources available for fragment shading as vertex shading is constant

2) ROPs being more efficient as triangles getting larger.

Last edited:

tviceman

Diamond Member

The RX 480 putting a serious beating on the GTX 1060 and the R9 390. Looks like AMD is putting in the special "optimization" sauce for their newer cards like Nvidia did with Maxwell over Kepler.

I expect just about every game coming out that is sponsored by Nvidia or AMD to have the same polarizing results like this, at least until a few driver releases after the game releases. Recommending a card now, more than ever, will be based on the specific games played.

I expect just about every game coming out that is sponsored by Nvidia or AMD to have the same polarizing results like this, at least until a few driver releases after the game releases. Recommending a card now, more than ever, will be based on the specific games played.

ThatBuzzkiller

Golden Member

- Nov 14, 2014

- 1,120

- 260

- 136

The RX 480 putting a serious beating on the GTX 1060 and the R9 390. Looks like AMD is putting in the special "optimization" sauce for their newer cards like Nvidia did with Maxwell over Kepler.

I expect just about every game coming out that is sponsored by Nvidia or AMD to have the same polarizing results like this, at least until a few driver releases after the game releases. Recommending a card now, more than ever, will be based on the specific games played.

You forget that the RX 480 has higher geometry performance than the R9 390 ...

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.