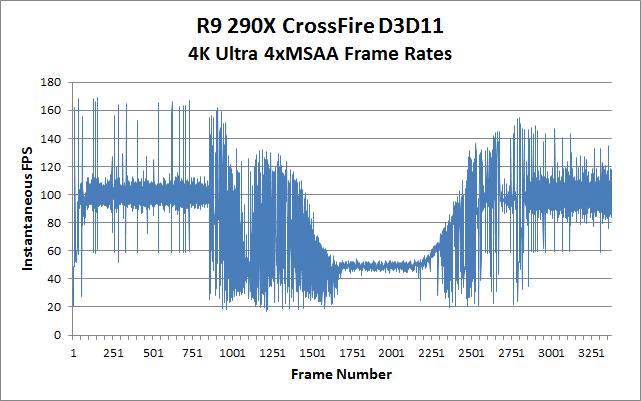

It's not a joke metric as if you get a certain minimum above what you find is reasonable you will get good performance. But that isn't to say that a lower minimum can't also be very good performance wise if that minimum is only hit very rarely or when it doesn't matter.

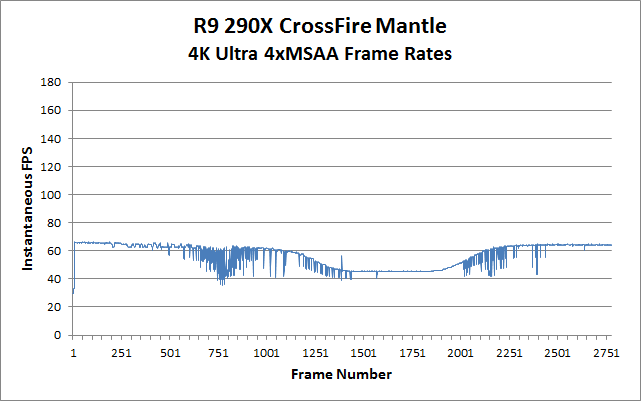

Rarely? How about once?

That image explains quite clearly why minimum fps is far less meaningful metric then people are led to believe here.