- Mar 1, 2020

- 7

- 0

- 11

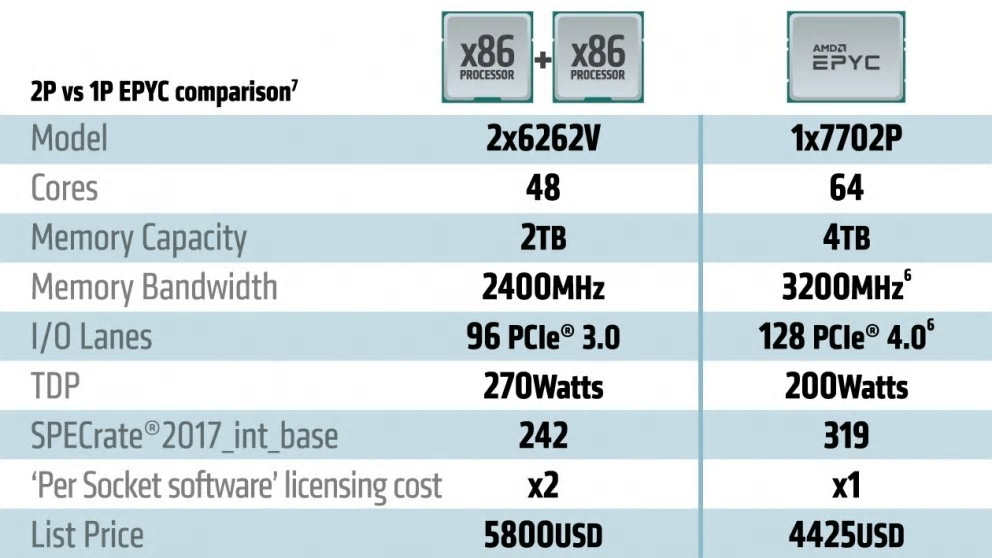

Say you're thinking of upgrading your servers and you're goal is fast access to SQL Server data (Database with lots of joins and tables rendering web pages over the web). In this case your ceiling of RAM will be 128GB.

While I appreciate the raw power and value of Epyc vs Xeon, it seems to me that for SQL Server and web apps, the slower Xeon CPU when paired with Storage Class Memory (SCM) is the clear performance winner. I'm not an expert in this realm, and did look to TPC-E for guidance (didn't find any really) but given these parameters I wanted to get feedback from anyone with knowledge in this particular area.

Thoughts anyone ?

While I appreciate the raw power and value of Epyc vs Xeon, it seems to me that for SQL Server and web apps, the slower Xeon CPU when paired with Storage Class Memory (SCM) is the clear performance winner. I'm not an expert in this realm, and did look to TPC-E for guidance (didn't find any really) but given these parameters I wanted to get feedback from anyone with knowledge in this particular area.

Thoughts anyone ?