[VC]AMD Fiji XT spotted at Zauba

Page 13 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No one is arguing the definition of performance per watt. What we are saying is performance per watt is SKU specific. Making general statements that 1 architecture is X% better in performance/per watt is ONLY true if ALL SKUs on that new architecture are AT LEAST X% faster than their predecessor on the previous generation. It's that simple.

If you say Maxwell overall provides 2X the perf/watt over Kepler, then for this statement to be true, BOTH the GM204 and GM200 MUST provide at least 2X the perf/watt over GK204 and GK110, respectively.

Ok, now with that out of the way, please explain how NV's 2X perf/watt marketing statement holds up if GM200 is rumoured to be 40-60% faster than Titan Black? Do you get it now? They should have said Maxwell architecture is "Up to 2X the perf/watt" of Kepler. That way you cover all your bases.

It's a typical practice Apple uses where they will advertise something 2X faster and then you see 1-2 apps out of 1000 running 2X faster on their new GPU/CPU. So in reality it's not 2X faster but up to 2X faster. That's why I hate marketing and their entire disciple; it's full of BS to get the average consumer who has no time to do research or understand the terminology to keep buying newer products.

hahaha

thats the most ridiculous...........

i mean, i cant stop chuckling long enough

So, seriously

Are you for real?

What the heck are you talking about? Your not making any sense at all.

Nvidia marketed the gtx980 as having twice the performance per watt as the gtx 680. They were obviously talking about the reference designs. It was a general statement in explaining the progress from their past cards to their new cards. I am sure you know how these things work.

When a car company says "number one car sold in America" as it's marketing, this (too) is just a general statement. Surely it can and will fall apart when you break it down to specific trims, body styles, packaging and such. Heck, what exactly do they mean by that at all? For every second of every day it is the number one car sold? Every single day of the month, is it the number one car sold. Or are they going by a specific data set they gathered and chose to market on. Seriously, that is how it works. It doesnt mean that that vehicle will forever remain in the number one slot. Or that every model thereafter will take that number one position. It is marketing a specific data set relevant only at that time.

Its hard for me to believe you dont already know this.

General statement? That is all marketing is. Pick any stinking product and i will rip it apart for you. Lets talk about AMD, Intel, or Nvidia if you like. Of course you picked nvidia to trash on, lol. But please, i challenge you to look at the marketing of any product from these other companies. Yet i doubt you will. I seriously dont think you would. Its much more fun having blinders on and going after the same one, over and over. It really has no place at all in this thread.

Sorry for my continuation in the off topic path. That is all i have to say about it.

Last edited:

Nvidia marketed the gtx980 as having twice the performance per watt as the gtx 680.

And that s a deliberatly flawed comparison as they know that the memory controler will consume about the same in boths cases but with more exe units for the 980...

Looking at Techreport review and using a case that favour the 980 we clearly can compute that the 980 has 65% better perf/watt, in Crysis 3 1440p, than the reference 290X and 40% better than the 780, in 4K games where the 290X perform better the 65% perf watt advantage is reduced notably, now we can take OEMS 980 cards and the perf/watt will decrease even further, indeed one has to wonder why there s no reference cards sold, that is, perf/watt of a product that doesnt exist is used as reference for real designs that are as power hungry as previous products..

http://techreport.com/review/27067/nvidia-geforce-gtx-980-and-970-graphics-cards-reviewed/8

Edit : On the game below the 980 has 33-35% better perf/watt than the 290X in the two more demanding settings, so much for thoses extraordinary perf/watt improvements..

http://forums.anandtech.com/showthread.php?t=2403455

Last edited:

96Firebird

Diamond Member

- Nov 8, 2010

- 5,748

- 345

- 126

now we can take OEMS 980 cards and the perf/watt will decrease even further, indeed one has to wonder why there s no reference cards sold, that is, perf/watt of a product that doesnt exist is used as reference for real designs that are as power hungry as previous products..

I'm so confused by this broken sentence... Are you saying there is no reference 980?

Abwx - that is system power NOT gpu power.

I accounted for everythings as my numbers suggest, also the 680 is the less efficient of the Nvidia cards, actualy it s less efficient overall than a 290X Tri X.

I'm so confused by this broken sentence... Are you saying there is no reference 980?

Where are they.?.

All we see are ocked OEMs cards.

Last edited:

I accounted for everythings as my numbers suggest, also the 680 is the less efficient of the Nvidia cards, actualy it s less efficient overall than a 290X Tri X.

I'm not sure how you did.

Using Card only (average) and metro.

66% more efficient that the 680, 52% more efficient that the 770 (likely the 770 is squeezing more performance from better drivers). Its not twice as efficient, no clue where nvidia is getting that from but its more than 40%.

is there a clock to clock = in there any where ? if not a highly over clocked card [980] on a refined process compared to under clocked cards neffed with lacking vram [3gb]does not have a lot of meaning to me imoAnd that s a deliberatly flawed comparison as they know that the memory controler will consume about the same in boths cases but with more exe units for the 980...

Looking at Techreport review and using a case that favour the 980 we clearly can compute that the 980 has 65% better perf/watt, in Crysis 3 1440p, than the reference 290X and 40% better than the 780, in 4K games where the 290X perform better the 65% perf watt advantage is reduced notably, now we can take OEMS 980 cards and the perf/watt will decrease even further, indeed one has to wonder why there s no reference cards sold, that is, perf/watt of a product that doesnt exist is used as reference for real designs that are as power hungry as previous products..

http://techreport.com/review/27067/nvidia-geforce-gtx-980-and-970-graphics-cards-reviewed/8

Edit : On the game below the 980 has 33-35% better perf/watt than the 290X in the two more demanding settings, so much for thoses extraordinary perf/watt improvements..

http://forums.anandtech.com/showthread.php?t=2403455

what was the boost of the gtx980 vs the 780 lol no clocks no bench marks = useless , unless you drink nv koolaid

Last edited:

I'm not sure how you did.

Using Card only (average) and metro.

66% more efficient that the 680, 52% more efficient that the 770 (likely the 770 is squeezing more performance from better drivers). Its not twice as efficient, no clue where nvidia is getting that from but its more than 40%.

I explained above that these cards are not adequate, they have 1536 EUs while the 980 has 2048, when you increase the EU count you wont increase the memory controler size, hence if the power comsumption ratio for the 680 is 40/60% for MC and EUs you ll get 120% of the TDP (33% of 60%) by increasing the EU count by 33% and perfs by the same amount, hence using the same card with more EU you ll increase efficency by 133/120 = 10.8% even before talking of uarch...

If real improvement is let say 40% then starting from this basis will inflate the number to 1.4 x 1.108 = 1.55%, here how you build nice marketing numbers.

I explained above that these cards are not adequate, they have 1536 EUs while the 980 has 2048, when you increase the EU count you wont increase the memory controler size, hence if the power comsumption ratio for the 680 is 40/60% for MC and EUs you ll get 120% of the TDP (33% of 60%) by increasing the EU count by 33% and perfs by the same amount, hence using the same card with more EU you ll increase efficency by 133/120 = 10.8% even before talking of uarch...

If real improvement is let say 40% then starting from this basis will inflate the number to 1.4 x 1.108 = 1.55%, here how you build nice marketing numbers.

Not sure why you are splitting hairs. Nvidia has new compression technology with Maxwell, precisely why they can get by with > 780 TI performance with 2/3 the bandwidth which is part of their efficiency gain. Sure you could build a theoretical 770 with 224 GB/sec and 2048 shaders but efficiency gain would be minimal (770 is already tapped out in terms of bandwidth and scales poorly- it would scale maybe 10% with 33% more shaders in terms of performance). Nvidia never stated per shader, they stated per entire chip.

Also Kepler and Maxwell shaders are not comparable.

Simply put if AMD is to build their 390X with 4096 shaders and HBM and say its 70% more efficient you are saying this is untrue because the HBM must be removed to compare efficiency?

Efficiency is the sum of all parts on the GPU, building really efficienct shaders but having a design that requires a ton of BW doesn't make the chip more efficient unless the total chip consumption drops (and vice versa).

This is the same obtuseness as when someone previously was trying to compare perf/watt/area. Its not entirely wrong, but its really weird logic.

3DVagabond

Lifer

- Aug 10, 2009

- 11,951

- 204

- 106

Came here expecting to see if any new information was known about the new AMD cards. Was treated to multiple pages of useless efficiency arguments. Good job all

What, an AMD thread getting totally derailed to talk about GM104 efficiency? Who would have thought that could happen?

Bateluer

Lifer

- Jun 23, 2001

- 27,730

- 8

- 0

Came here expecting to see if any new information was known about the new AMD cards. Was treated to multiple pages of useless efficiency arguments. Good job all

You expected better?

Nearly everyone in this thread is an armchair engineer blowing smoke from their buttocks.

You expected better?

Nearly everyone in this thread is an armchair engineer blowing smoke from their buttocks.

:thumbsup:

You expected better?

Nearly everyone in this thread is an armchair engineer blowing smoke from their buttocks.

:thumbsup: Laughed my arse off when I read that, thanks!!

NIGELG

Senior member

- Nov 4, 2009

- 852

- 31

- 91

It's like some of them have no life...I just want information,not stupid speculations.You expected better?

Nearly everyone in this thread is an armchair engineer blowing smoke from their buttocks.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

66% more efficient that the 680, 52% more efficient that the 770 (likely the 770 is squeezing more performance from better drivers). Its not twice as efficient, no clue where nvidia is getting that from but its more than 40%.

Exactly. Even NV owners such as tviceman and toyota have already calculated that GM204 is about 70% more efficient per watt in avg. gaming performance than GK204, but when this is pointed out, people just ignore facts and mathematics.

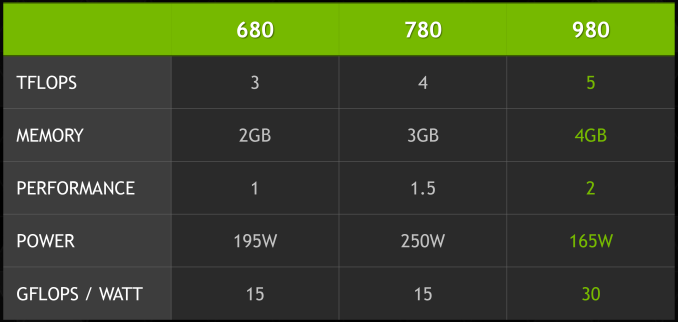

NV's measurement of efficiency was tied to GFLOPs/Watt, not in terms of gaming performance/watt, but as usual marketing won over logic and facts! NV also used 195W as indicative of GK104's power usage and 165W for the 980. This is crazy misleading since they used the lowest possible power usage of the reference 980 despite 99% of after-market 980's using much more than 165W of power at max load and they used the higher measurement for 680 when reference 680 uses less than 195W.

---

Anyways, back to Fiji. The interesting part is that Fiji XT is supposed to be R9 380/380X, while Bermuda XT is R9 390/390X.

R9 390 series = Bermuda XT

http://www.pc-specs.com/gpu/AMD/R-300_Series/Radeon_R9_390/2264

"Finally the performance of the card in OpenGL is approximately 50% higher than the R9 290x (63.6 vs 42.4 GB / s) so it seems that AMD will offer a good performance increase with Pirate Islands."

http://www.profesionalreview.com/2014/11/12/primeros-detalles-de-la-radeon-r9-390x/

"The bleeding edge R9 390X is next – and will be based on Bermuda. You can expect the GPU to be targeting both the 980 Ti and the Titan X."

http://www.redgamingtech.com/amd-r9-380x-february-r9-390x-370x-announced/

"Previously there was a news that AMD Radeon 300 series would feature the Bermuda core and on R9 390X and R9 390 Bermuda XT would fuse with them. But last week the news from Zauba send everyone in the new void of thinking, as according to their latest entry AMD Next Generation of Radeon R9 300 Series will feature the Fiji XT GPU. Previous rumors claimed that, Bermuda GPU would be the part of AMD Next Generation of Radeon R9 300 Series."

http://tech4gamers.com/amd-r9-radeo...ns-4gb-3d-stacked-hbm-4096-stream-processors/

Right now, it's difficult to say if Fiji XT is R9 380/380X and Bermuda XT is R9 390/390X, if the codenames are reversed now, or if AMD will hold back the real Bermuda XT for the R9 490/490X, or someone incorrectly assumed that Fiji XT that leaked is R9 390 series, when in fact it's only R9 380 series.

If you guys look at AMD's strategy, their next gen mid-range cards roughly equal their last gen flagship cards in performance (similar to NV):

1. HD6950 (2nd fastest) < HD7850 (2nd fastest mid-range); HD6970 (fastest last gen) < HD7870 (next gen top mid-range)

2. HD7950 (2nd fastest high-end) < HD7950 V2 ~ R9 280 (2nd fastest mid-range); HD7970/7970Ghz (fastest last gen) ~ R9 280X (next gen top mid-range)

3. HD7970 was about 25-30% faster than HD7870 (2nd tier level card of that gen)

4. R9 290X was about 24-30% faster than HD7970Ghz/R9 280X (2nd tier card of that gen)

Based on the above, we are starting to see some trends:

Trend #1 - AMD's next gen mid-range is about as fast as last gen flagship. Therefore, we can estimate that R9 380/380X will be about as fast as R9 290/290X.

Trend #2 - AMD's flagship card tends to be about 25-30% faster than its comparable mid-range card of the same generation. Therefore, we can estimate that if R9 380/380X ~ R9 290/290X, then R9 390/390X will be at least 25-30% faster.

However, the rumours are suggesting that R9 380X is aimed at GTX970/980 cards, which means R9 390/390X should be much faster than 25-30% than R9 290/290X for this to be true because 980 is 20% faster than R9 290X. For R9 380X to compete with a 980, it has to be faster than R9 290X, which means if we apply 25-30% faster over R9 380X, we'll be way faster than 30% over the 290X.

"The R9 380X (based on the Pirate Islands architecture) is said to be aimed squarely at Nvidia’s recently released Nvidia Maxwell GTX 970 and 980."

http://www.redgamingtech.com/amd-r9-380x-february-r9-390x-370x-announced/

It's possible based on connecting these dots that R9 390X will actually be faster by more than 30% than R9 290X.

Last edited:

NostaSeronx

Diamond Member

- Sep 18, 2011

- 3,815

- 1,294

- 136

I have heard the R9 370X, which is also the R9 M390X, will be the one competing with the GM204 SKUs(GTX 980/970/980M/970M).

There is two more SKUs above it. A big die and an even bigger die.

There is two more SKUs above it. A big die and an even bigger die.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

I have heard the R9 370X, which is also the R9 M390X, will be the one competing with the GM204 SKUs(GTX 980/970/980M/970M).

There is two more SKUs above it. A big die and an even bigger die.

I find that very hard to believe that AMD's 3rd tier card will be competing with GTX970/980. The 270/270X series launched as $179-199 cards.

Unless AMD successfully managed to go 20nm + HBM + made very large 20nm die chips, I can't possibly believe that 370X will compete with a 980. Right now a 290X is 67% faster than a 270X at 1080P. If R9 370X is competing with 970/980, are you suggesting that R9 390X will be 50-70% faster than a 980/370X?

I think if 390X beat 980 by 25% that would be a major accomplishment because:

1. 980 only beat 290X by 20-22% in 11 months, so that would mean R9 390X accomplished a similar feat in nearly half the time if it launches by March 2015 with 20-25% more performance over the 980.

2. 25% over 980 would make 390X 51% faster (1.21 for 980 x 1.25 for 390X) than a 290X. That's very impressive.

3. Looking at this graph:

http://www.computerbase.de/2014-09/geforce-gtx-980-970-test-sli-nvidia/6/

If 390X is 25% faster than a 980:

780Ti = 100%

980 = 105%

390X = 131% (25% over 980)

GM200 = 150% (if 50% faster than 780Ti)

If this pans out, GM200 would beat 390X by only 15%. If 390X $549 beats 980 by 25% in just 6 months, that would be pretty epic.

I have heard the R9 370X, which is also the R9 M390X, will be the one competing with the GM204 SKUs(GTX 980/970/980M/970M).

There is two more SKUs above it. A big die and an even bigger die.

Right... having bajillion different chips over several arch. and process nodes. That makes soooo much sense.

BTW you say SKU, but you don't really mean that?

And what happened with 20nm? Pretty much everyone now agrees AMD will be 28nm this round.

NostaSeronx

Diamond Member

- Sep 18, 2011

- 3,815

- 1,294

- 136

FDSOI and FinFETs are on time.And what happened with 20nm?

Go for more efficient on current gen. Jump to either 20-nm FDSOI(14FD)(re-use of 20-nm LPM/SOC plus high performance) or 20-nm FinFET(14LPP)(drop everything for lower life expectancy plus low power). Reap the extra efficiency benefits from either jump.

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.