I said that there are benchmarks where Cortex-A15 performs near the perf/MHz of Bobcat to make a case for a 2.5GHz A15 being reasonable. Don't distort it into something else. I gave two benches, here's links:

Bobcat 1.6GHz vs Exynos 5250 7-cpu:

http://www.7-cpu.com/

Bobcat 1.6GHz vs Exynos 5 Octa Geekbench:

http://browser.primatelabs.com/geekbench2/compare/1950660/1159110 (look at the single threaded tests only, ignore total scores)

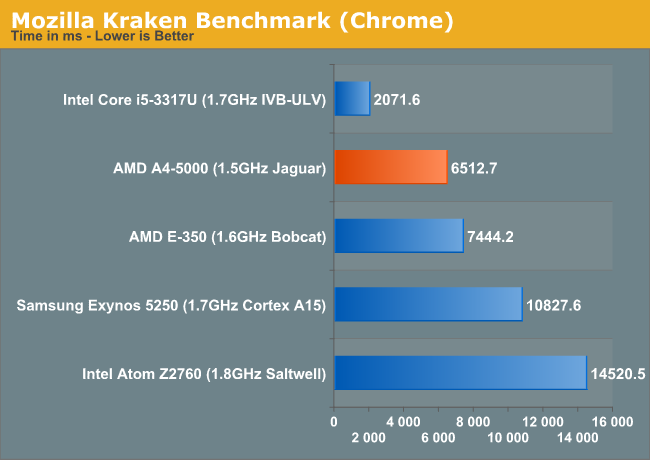

On 7-CPU, the A4-5000 wins by approx. 50-60% with 4 threads. That is at a lower clockspeed for the A4 as well. Extrapolate to 8 threads, easy conclusion: Jaguar is immensely faster.

On Geekbench: Bobcat wins in every single-threaded test at 1.6GHz. Jaguar adds between 10-20% IPC boost from Bobcat. Extrapolate to 8 threads, ARM again loses by a large margin.

It's 1.5W to 2W per core, that is what I said. That's clearly evident in AT's power analysis of Exynos 5250. Exynos 5 Octa would be lower. We don't have much idea what power consumption of a Cortex-A15 is like on TSMC's 28nm, HPM or HP.

We're not talking about desktops or servers. We're talking about consoles. Whatever desktop or server processors AMD made doesn't have to do with a Jaguar vs Cortex-A15 comparison.

1.5 to 2w, essentially the same power consumption as Jaguar, for worse performance. No thanks.

Desktop, server, game console, fax machine, call it whatever you want, ARM has never made a processor which is at the performance level that an 8C Jaguar is expected to be at.

Because using a dynamic power optimized process means your idle power consumption gets a lot worse, making it a very bad choice for phones and tablets. So far A15 has only been released in a small handful of phones and tablets.

But we're talking about what options there'd be if someone tried to make a console SoC with this. Something we'll probably never have the answer to because a variety of other things precluded this from being the right console design for Sony or MS. Maybe someone else will make a device that targets performance instead of low clock characteristics.[/QUOTE]

So, what you're saying is that ARM could make a processor with worse power consumption, to compete against a processor that already has better power consumption. Lol, that's excellent logic. They're definitely going to make their situation BETTER with that.

Look at press releases that have announced 2.5-3GHz+ spins of Cortex-A9 on performance optimized processes with GF or TSMC. Do you think ARM jumped through hoops and over the moon to achieve that? For a press release? More likely it was the foundries pimping pretty normal existing designs. ARM says that it can run at 2.5GHz on 28nm, do you think they're making it up? They could very well already have a hard-macro that does this. They had one for Cortex-A9, it did 2GHz on 40nm back when everyone else was stuck at around 1.5MHz max. It wasn't used in an SoC because it wasn't a good fit for what people wanted the SoCs for, although NuFront was doing one that I don't think ever got released.

Once again. A lack of released SoC doesn't mean it's a huge engineering challenge to do it now. Maybe it is. But saying that they would necessarily have to go through that would be speculating on YOUR part.

Ok, fine, sure they can MAKE 2.5GHz ARM processors. Sure, they could make billions! Oh, wait, they consume more power, and still aren't very fast...

Even assuming ARM could easily make a 2.5GHz A15 core, the power consumption numbers make it look absolutely atrocious in comparison to Jaguar.

More for less.

That's what we should be discussing, but when people insist on making comparisons using power consumption numbers for Exynos 5250 that include the GPU running at full tilt.. well you figure it out.

The numbers don't look much better when you compare CPU+GPU for Kabini. A4-5000 draws 15w at full CPU and GPU load. A4-5000 performs over TWICE as fast as Exynos 5250 in GPU based benchmarks. Look at 3dmark: over double the CPU score, over triple the GPU score. That's better performance/watt for damn sure.