The biggest issue with those little fans when running distributed computing, they will be running 24x7x365. My experience with those little fans is that they start making grinding noise within 3 months, and fail completely within 6-9 months. I find that a passive cooling case is going to be the longer lived solution, and worst case you can always point a 120mm fan at it.

The Flirc case temp depends on ambient, of course, but even in a room with some high ambient temp (80f) I've not seen a pi 4 in one thermally throttle.

My temp testing with a flic case with a stock pi 4 inside, in a 68 degree basement:

w/ plastic top on 58c

w/ plastic top off 55c

w/ plastic top off + heatsink sitting on top of case 51c

w/ plastic top off + heatsink sitting on top of case + 60mm fan blowing on it: 35c

That one is one I hadn't seen before. Very interesting, if not terribly cost effective for a whole cluster of boinc boxes.

Another completely over the top option:

CooliPi 4B support site

www.coolipi.com

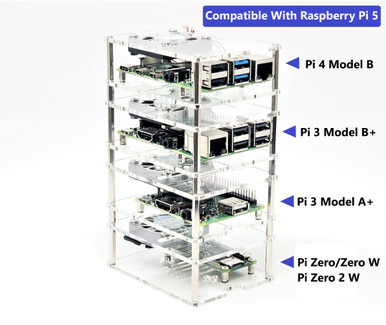

The multi layer setups are probably the ideal option, but I'm still concerned about the longevity of the little fans with that setup. I'm leaning toward buying one of these to try out:

Amazon.com: GeeekPi New Raspberry Pi Cluster Case, Raspberry Pi Rack Case Stackable Case with Cooling Fan 120mm RGB LED 5V Fan for Raspberry Pi 4B/3B+/3B/2B/B+ and Jetson Nano (4-Layers): Computers & Accessories

www.amazon.com

Excellent article that I've seen before. It was part of my motivation to store all the boinc project data on my NAS. I'll make sure to include this link in the first post.

Yep - microcenter is definitely the best place to buy a one off pi, and I've gotten several that way over the last 4 months. Buying multiple at once, though, microcenter seem to discourage (website shows a price increase for buying more than 1).