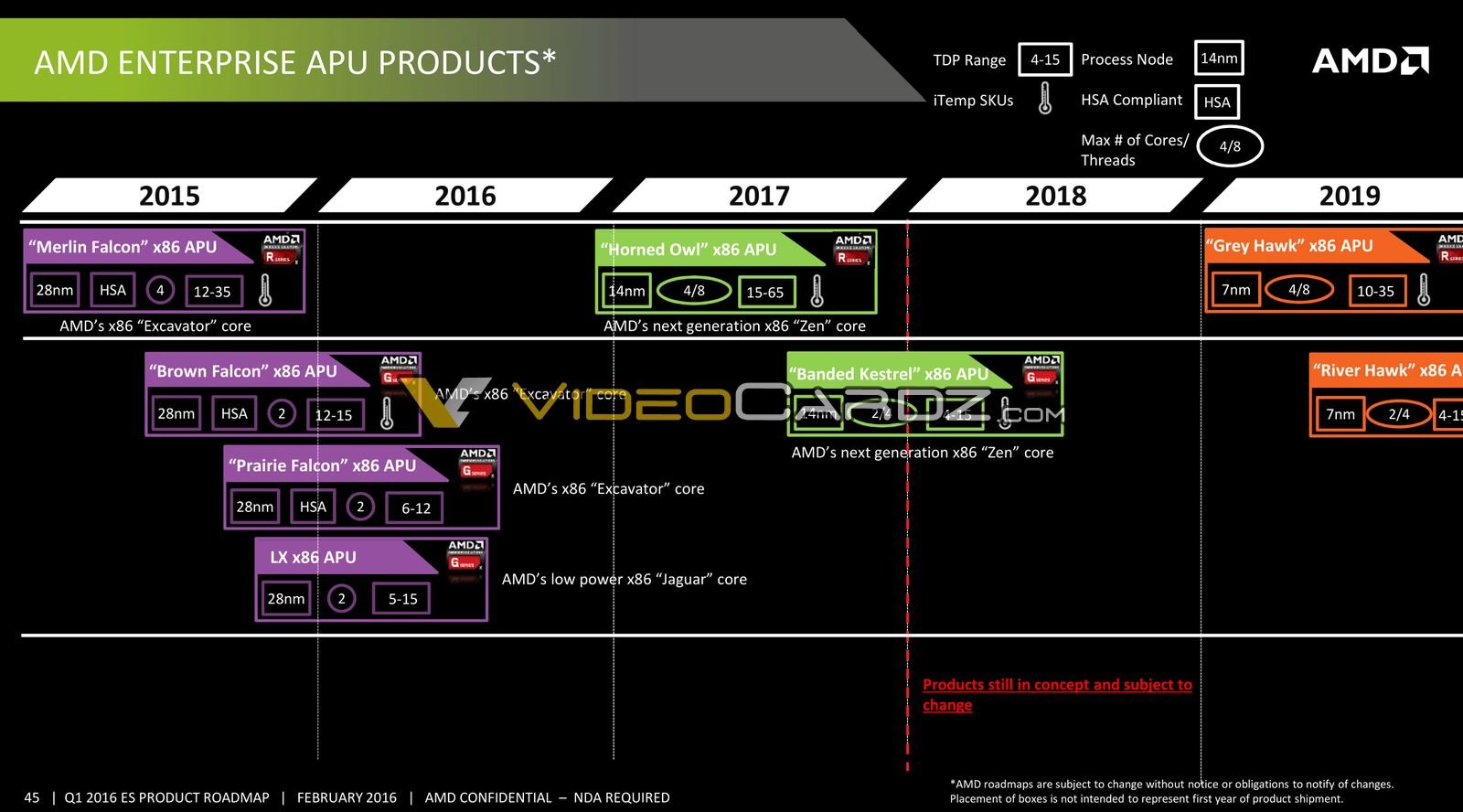

Just to clarify, Banded Kestrel was the embedded name for Stoney Bridge right (XV + GCN3)?Banded Kestrel

Last edited:

Just to clarify, Banded Kestrel was the embedded name for Stoney Bridge right (XV + GCN3)?Banded Kestrel

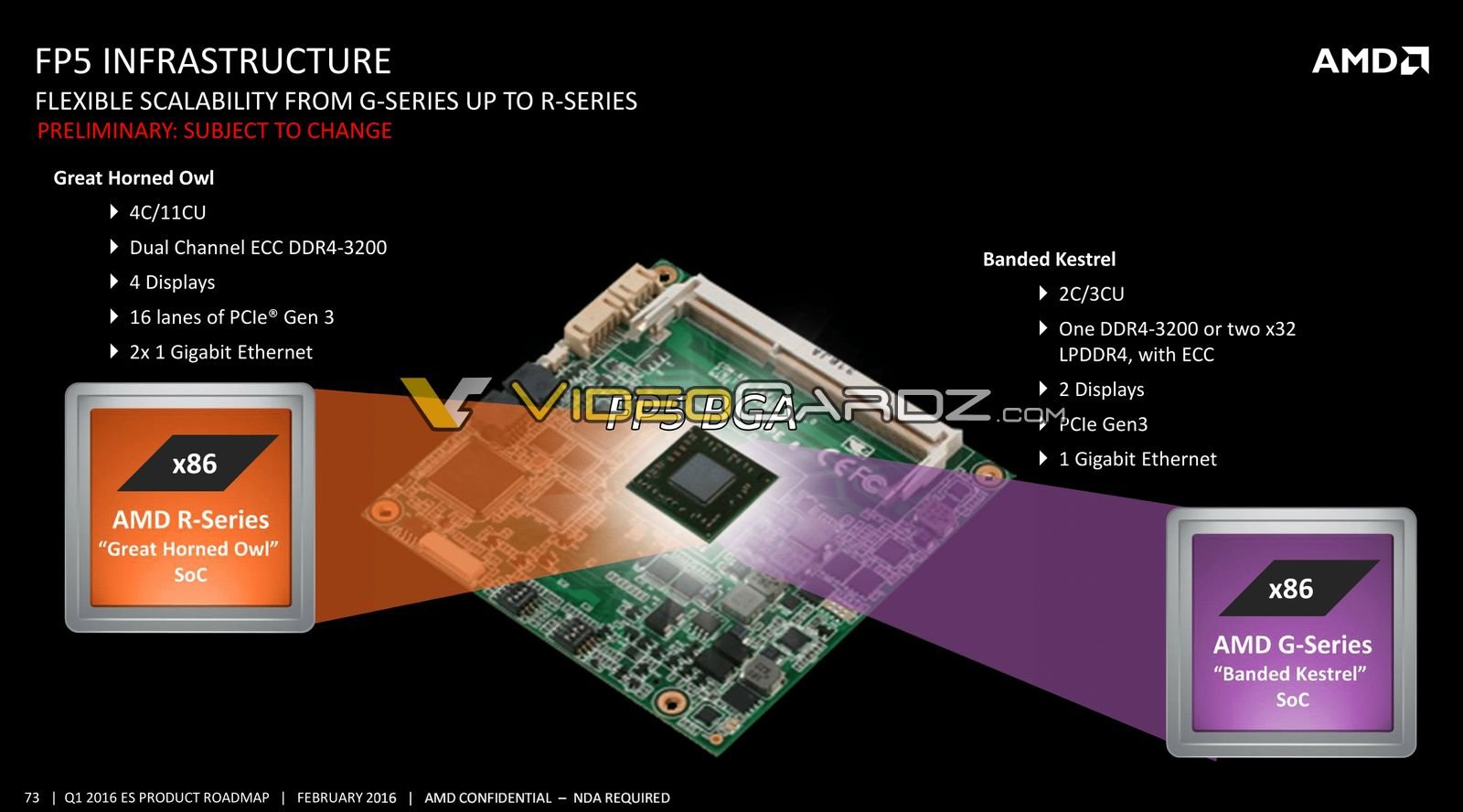

No, Banded Kestrel was an early internal name for the 2c4t counterpart to the 4c8t Raven Ridge (originally Great Horned Owl), so Zen 1 with Vega 10 plus VCN 1.0. It ended up only launching very late for some reasons (likely the Raven Ridge dies were cheap enough to be used for cut down chips like the Athlon APUs) so the only product officially using it right now is the R1000 embedded series.Just to clarify, Banded Kestrel was the embedded name for Stoney Bridge right (XV + GCN3).

Huh, I thought Intel used to used 6T cells (back, oh, 20+ years ago). Are 8T cells more stable at higher frequencies (or lower drive currents)?

That would be too much I think; was doing a ballpark area guess late at night.

So 9*4*1M= 36M

Did you mean to say L2? Because that's 4M bits, or 512 kilobytes. Your original post said L3.

No, I mean L3. 300M estimate sounds large.

But I'll look closer at the die shots of the CCX again and get back later tonight. It might also be the case that the density of transistors is far from uniform and doesn't relate well to area.

300M is not an estimate, it's a hard lower bound. They can't fit bits into wishes, they need 8 transistors per cell. (8 bits per byte) * 9 transistors per bit * 4 million ~= 300M. On top of that they need more transistors for redundancy, tags, comparators, etc.

That is definitely true. sram is generally the densest kind of structure, and cache is packed to be as dense as possible because signal delay is substantial part of latency, so density is not just cost, it's also speed.

Each core is capable of four-way simultaneous multithreading (SMT). The POWER7 has approximately 1.2 billion transistors and is 567 mm2 large fabricated on a 45 nm process. A notable difference from POWER6 is that the POWER7 executes instructions out-of-order instead of in-order.

WTH, who is talking about copying Power or Intel? Are you even following this discussion?

And I don't think you correctly interpret the market if you really think people are happy to suddenly decrease the number of cores again just because SMT2 were to be replaced by SMT4.

You are just repeating me.

There is zero indication AMD would ever sell a 4c/16t CPU as their new 8c/16t chips.

"Nobody did it that way before so it won't happen."

Let's agree to disagree.

Possibly, but there is still Dali which was meant to be the value APU option, which would imply monolithic/small to me.

I think Renoir will be chiplet still, it's more a question of how that will be configured.

Of course they could both be monolithic but that seems doubtful to me.

They used to, but everyone's moved back to 8T cells with smaller processes. I don't know the precise reason why. L3 moved to 8T cells last, the inner caches switched first, so that might suggest speed as the reason?

@DrMrLordX

Not interested in continuing the previous discussion in general, just this one point:

You appear to have the fixation on SMT (and extending it from 2 to 4 threads) costing a lot of transistors and as such being expensive (to the point of the amount of cores needing to be reduced as a result which sounds insane to me). Where did you get that idea?

You won't be wrong if it does pop up down the line...I can't help thinking of Soft Machines and their tech. Some of the concepts seem quite promising to both increase single thread performance and reduce power/computation. AMD was one of the main investors until Intel bought the company and it went dark.

Zen 3 or Zen 4?

https://www.anandtech.com/print/10025/examining-soft-machines-architecture-visc-ipc

Nice discussion here guys.

Let look at SMT4 from other point of view.

Here are the known facts:

Now if you are AMD CPU architect you can simply do same successful trick:

- ILP is real limitation. CPU can process on average 2 instruction/cycle for most of code.

- But avg IPC number is too simplified. For more details you look at Gaussian distribution curve of IPC. This shows that 2 IPC can be found in 60% of code, 3 IPC in 35%, 4 IPC in 20%, 6 IPC in 10%, 8 IPC in 5% of code. For example. Every algorithm has slightly different distribution however lets assume average distribution across typical code.

- Fact is that 2xALUs per thread is kind of sweet spot in terms of utilization. This is the idea behind Bulldozer uarch and why they made this "smart" move to 2xALU design from 3xALU K10.

- Fact is that Intel kept same utilization at 2xALUs/thread via different strategy> by adopting SMT2 for 4xALUs.

- Fact is that 4ALUs + SMT2 is much more powerful in single thread - via saturating more use case % from Gaussian distribution. Bulldozer designers ignored this important factor and it was bad mistake. Resulting from smart move to "smart" move.

- A) move from 2ALU+2ALU Bulldozer cores to 4ALU + SMT2 and creating Zen core. Same sweetspot of 2 ALU/thread, same number of ALU units but much more single thread power and better utilization. It was fusion/merge of 2 cores into one wider while taking into account ILP.

- B) again do the core fusion> by merging 2 Zen cores into one wider Zen3 core ( from 4ALU + 4ALU SMT2 going to 8 ALU + SMT4). This would gain more single core performance from Gaussian distribution of code ILP (with compiler optimalization much more in future) and even more efficiency than SMT2.

- C) 6ALU + SMT4 also make a sence since we know A12 Vortex core has 6ALU already. It's more conservative approach. Keep in mind that Jim Keller started Zen design approximately in the same time as Apple their A11. Engineer talks to each other especially when Jim is ex-Apple engineer.

I would bet on option C) because it is easier to develop. However both B and C are feasible.

VISC is very interesting. But. These ideas are already built as OoO+SMT2 in modern RISC x86 CPUs. No need to double it in more complicated way as VISC - that's why it was never adopted or licensed IMHO. Just we are stuck in SMT2 now. Hopefully not for long time...I can't help thinking of Soft Machines and their tech. Some of the concepts seem quite promising to both increase single thread performance and reduce power/computation. AMD was one of the main investors until Intel bought the company and it went dark.

Zen 3 or Zen 4?

https://www.anandtech.com/print/10025/examining-soft-machines-architecture-visc-ipc

It's possible, I agree. But. Partly because AMD is not developing it's own compiler - Intel do optimization for its own 4ALU+SMT2 design. AMD wasn't able to push optimizations for their Bulldozer arch with more cores (it's more programmers issue than compiler one) and the same will happen when they will aim for 8ALU+SMT2. Even with 6ALU+SMT2 is very thin ice for them as most code is Intel friendly. SMT4 is not so complicated to implement and is eliminating every risks for wide cores (via keeping ratio 2ALU/thread). And it can be disabled in BIOS or OS controlled. 90% of work is to develop very complex wide core. SMT4 will increase demand for front-end throughput. It's just much easier to develop such a complex core from scratch. So Zen3 is the first good chance to see such a wide SMT4 core - just because of quite good combination of Jim Keller and development from scratch since 2012. Maybe it was canceled together with wide server ARM core. Who knows.That is really insightful.

My thinking is why not shoot for the very lowest hanging fruit of between 1 and 2 IPC (closer towards 1) with a pair of light duty non-speculative threads. 6 ALU with current Zen2's 3 AGU seems a good fit with that, and then do SMT4 for Zen4, either with the same pipes or going even wider to 8 (option B).

Always be careful with that;No need to double it in more complicated way as VISC - that's why it was never adopted or licensed IMHO.

SMT4 is not so complicated to implement and is eliminating every risks for wide cores (via keeping ratio 2ALU/thread). And it can be disabled in BIOS or OS controlled. 90% of work is to develop very complex wide core. SMT4 will increase demand for front-end throughput. It's just much easier to develop such a complex core from scratch. So Zen3 is the first good chance to see such a wide SMT4 core - just because of quite good combination of Jim Keller and development from scratch since 2012. Maybe it was canceled together with wide server ARM core. Who knows.

I think you are too pesimistic. There are two separate things: SMT vs. Wide core1. Sure SMT4 would be complicated. Adding more hardware threads is just like widening the core - it's a case of diminishing returns. Adding more SMT threading without making architectural improvements and allocation of more resources (e.g., more decode, registers and cache) will result in significant fall off in SMT yields at higher thread counts (I can't find the damn graph from the 90's, but using simulations on early Alpha CPU architectures (21064 or 21164) performance scaling was hyperbolic with an optimal xtor cost per thread being reached at 4 (SMT 4). AMD certainly can use Dr. Joel Elmer's approach an keep the resource use to a minimum as a reasonable trade off of using few resources (xtors) to keep die size, and hence cost, down.

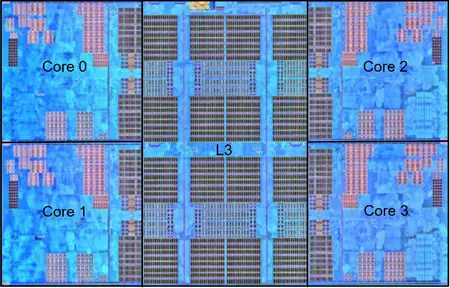

In order to keep performance up while adding more threading, proportionally more redundant resources will need to be added than was needed for SMT2. This will need to be done while maintaining high clocks and staying within the target design's power budget. Verification (simulation) and validation (silicon) will increase design time just like widening the core. Actually, more so because as the added registers, circuit blocks, widened resources, etc. span throughout the CPU to maintain good performance across more threads. See the figure below from the Anandtech Zen1 Deep Dive.

2. I would be shocked to see SMT4 in Zen3. I really expect more cores are the most likely outcome (96?). I just don't see the need for more hardware threading at this point in Zen's development. Power and Spark went with more hardware SMT to keep die size and power in check (relative to prior designs), rather than scaling core counts. With AMD's chiplet strategy, that need simply doesn't exist yet.

View attachment 10178

There's no reason to go wider SMT unless you want to game per-C licensing like you're IBM.I think you are too pesimistic. There are two separate things: SMT vs. Wide core

- you can implement 4-way SMT for Zen2 core sharing 4xALUs and 2FPU. Intel stated HT cost 5% transistor increase. Its not so difficult to implement..... however you will get very little gain (better utilization by 2-5%?) and performance/thread will decrease to half (well it can be switched back to SMT2 or switched off). Overall gain is very small compare to effort.

- to make SMT4 reasonable you need wider core. It depends what AMD is aiming for. AMD would need to double at least FPU from 2xFPU up to 4xFPU. This is minimal configuration for SMT4 IMHO.

- when AMD is gonna touch all parts of CPU due to SMT4 implementation it is more effective to redesign it completely to increase throughput everywhere (front-end, back-end) - and create new arch for next decade. AMD had enough time to do it since Keller started 2012. IMHO Zen1+2 was just fast food to fill time gap (Zen has from Bulldozer shared FPU, front-end, AGU, completely new is only ALU back-end moving from 2+2ALU to 4ALU+SMT2, they needed something fast to avoid bankruptcy) before big dinner (new architecture from scratch for next decade?). I can see there was an time opportunity and real reasons to plan so. Maybe I'm too optimistic

Throwing more cores is inefficient. Imagine situation when one front-end decoder is free because of using uop cache, and second is 100% utilized (being bottleneck). When you have a separated fron-end for each core and you cannot use free resources of other core.You can save a lot of transistors and increase performance via shared front-end like Bulldozer did.There's no reason to go wider SMT unless you want to game per-C licensing like you're IBM.

Just throw more cores.

Oh, and every core xtor ever should go towards more ST, period.

Huh? Yeah, cloud providers will just love this - not. Running massive numbers of VM instances is a clear target for EPYC systems and more cores is the way to go.Throwing more cores is inefficient. Imagine situation when one front-end decoder is free because of using uop cache, and second is 100% utilized (being bottleneck). When you have a separated fron-end for each core and you cannot use free resources of other core.You can save a lot of transistors and increase performance via shared front-end like Bulldozer did.

Oh it is very, very efficient, particularly in current scale-out world.Throwing more cores is inefficient

Oh no, it's not this and definitely not what your fantasies about it are.Minimal Zen3 config> Zen2 + Doubled Zen2 FPU to AVX-512 + SMT4 (+20% transistors -> +20% performance)

That would make Zen3 more like Zen2+, which AMD has said is not happening.Personally I think an increase in the amount of cores won't happen with Zen 3 simply because that step will lack the density increase (TSMC's 7nm+ won't offer much there, the next big increase is for 5nm Zen 4 likely will use), lack the memory bandwidth (Zen 3 will still use DDR4, Zen 4 will use DDR5 requiring a new platform) and the current topology makes it tricky to add more cores without adding bottlenecks (currently with 64 cores each 8 core CCD is linked to one RAM channel, each pair of CCDs with one dual channel IMC on the IOD).

I believe Zen 3 will focus on internal changes in core and uncore that will then allow Zen 4 to massively scale out with a different package topology, similar to how it happened with Zen to Zen 2.