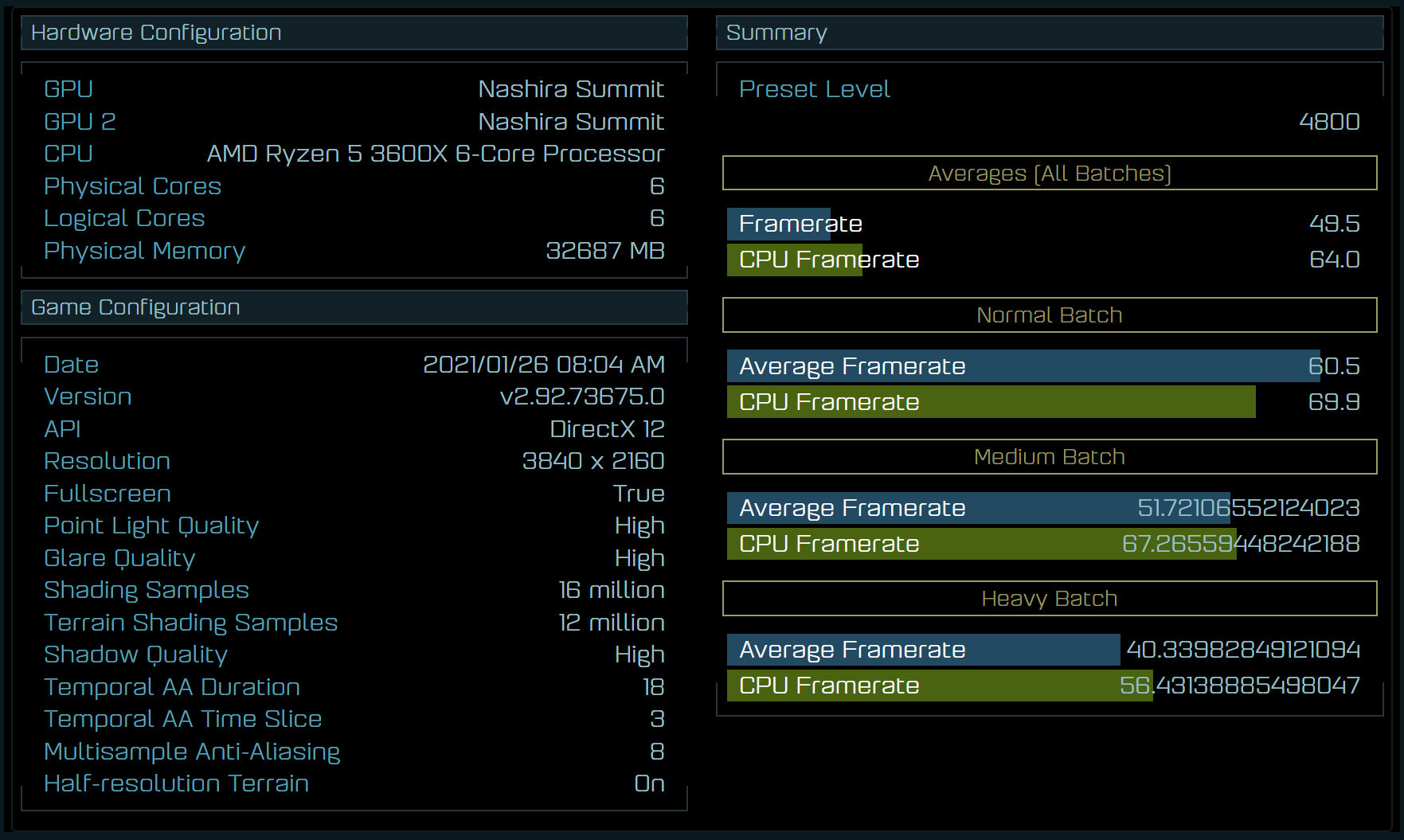

AMD did show off information about the hit rate of infinity cache at various resolutions, so we can at least use what they showed us as the basis for an argument even if there are some cases that fall outside of the typical results.

I added a few lines just to make it a little easier to compare the resolutions. The bottom line is the intersection of the 4K curve and 128 MB of infinity cache. The top line is the intersection of the 1440p curve and 96 MB of infinity cache.

96 MB of Infinity Cache has a better hit rate at 1440p than 128 MB does at 4K, so even with 40 CU, the Navi 22 cards should perform reasonably well at 1440p. TPU puts the 5700 XT at 87 FPS average across 22 games in their 1440p tests, so that's a good baseline for where the 6700 XT should be at. Even without having the Infinity Cache, just using faster GDDR6 memory like they have with Navi 21 would alone result in 85% of the bandwidth as Navi 10 despite only having 75% of the bus width. The 64 MB of infinity cache in Navi 23 should have a better hit rate than the bigger cards at their respective resolutions, but the graph does show reasonable growth up to about 64 MB which is where it starts to taper off.

I don't know if that necessarily makes the setup they've used overkill for Navi 22 though. Navi 10 did have a 256-bit memory bus so it's obvious that AMD needs enough infinity cache to compensate for that. If they wanted to do it through memory clock speed alone, they'd need VRAM that's clocked 33% faster than what the 5700 XT uses. Navi 21 is using faster memory, but it's only about 15% faster so not enough to close that gap alone. AMD could also be stuck using the older, slower VRAM that Navi 10 used simply due to supply constraints as well, but in either case they need something to pick up a little bit of the slack.

The additional capacity is likely as a result of consoles moving to 16 GB of available memory. Obviously they split that between the CPU and GPU, but 10 - 12 GB is going to become the new norm over time. If someone buys one of these cards with the intention of holding on to it for five years, I suspect that's when we'll see a lot of titles where 8 GB isn't good enough, particularly at resolutions above 1080p. If you think of Navi 22 as a 1080p card, then yes the extra 32 MB of infinity cache doesn't get you much compared to what you can get with only 64 MB, but these are going to be positioned as 1440p cards and I think that if the clock speeds wind up being as good as they were with Navi 21, that they could also serve as an entry-level 4K card in much the same way that the 3060 Ti can pull an acceptable average frame rate in many titles at that resolution.