TL;DR: a 10% performance hit in single-threaded performance under Windows 8.1 on a Magny-Cours 2-module CPU is found when three factors are allowed to coincide: thread migration across modules is allowed, the OS timer is at a high resolution, and core parking is allowed.

I joined just to share a test result of mine, which might interest some in this discussion. I ran tests on a virtual Windows 8.1 instance with 8 virtual processors, all pinned 1-1 to the 8 cores on a single Opteron 6140 (Magny-cours) die. The reason for the test, and why I bring it up in this thread, is that Magny-cours has a couple of key similarities with Ryzen when it comes to CPU topology:

- Each Magny-cours die consists of two ”modules” which share 4-6 (4 on the 6140) cores and an L3 cache

- L1+2 are private per core, L3 is shared per module and is a victim-cache of L2

Thus, the invisible (to Windows) module boundary that exists between the CCX of a Ryzen chip is also present in Magny-Cours, and the cache works similarly. I reasoned that if the performance differences we see for some loads between 2CCX vs 1CCX on Ryzen are really related to issues with inter-CCX communication or the fact that the L3 is a victim cache, the same phenomenon should be replicable on Magny-Cours. If, on the other hand, performance issues has to do with some other idiosyncracy with Ryzen which we have not yet quantified, Magny-Cours should not be affected. Additionally, I considered the following factors:

- OS timer setting – AMD has mentioned that Win10’s habit of setting a high (~1ms) OS timer freq for games might hamper performance. As Win7 typically runs everything on the default timer setting (15.625ms), this could explain the small but stable performance delta for some loads between Win7 and Win10, which remains regardless of SMT.

- Power plan – thanks to Kromaatikse’s investigations, we know that scheduling issues on Ryzen interacts with or are driven by power settings, presumably core parking.

- Topology visible to OS – thanks to KVM/libvirt, I can present the Magny-cours die either as one processor with 8 cores, or as two processors on two sockets with 4 cores each, the latter implicitly telling Windows which cores are sharing L3 and go together.

In order to sort out how these factors together impact performance Magny-cours, I combined them in a factorial experiment with 8 conditions, described below, with performance in the syntetic Wprime benchmark as the dependent measure.

Test bed:

- Supermicro H8DG6, 2x Opteron 6140 (8x2.6GHz per CPU), 32Gb ram

- Ubuntu 16.10 4.4.0-64 generic (VMhost), Windows 8.1 (VM guest)

- ”Node interleaving” enabled in BIOS settings (NUMA topology flattened out and hidden from OS, in order to mimick Ryzen as closely as possible).

- All 8 cores on the second CPU MCM are dedicated to Windows (1-1 pinning, removed from linux scheduler with isolcpus)

Windows 8.1 sees: 8x Magny-Cours cores (host-passthrough), topology according to the two conditions described above, 14Gb ram

Experiment 1.

Factorial structure:

OS timer {15.625ms | .05ms} * Power setting {Balanced | High performance} * libvirt CPU topology {sockets=1,cores=8,threads=1 | sockets=2,cores=4,threads=1}, yielding 8 conditions

Dependent variable: Wprime single-threaded 32M test running time (shorter is better)

OS timer is set with the program TimerTool, power plan is set and monitored with ParkControl, and CPU topology is set by the libvirt <topology=...> XML tag in the VM config file.

Here are the results. I ran three tests in each condition in order to quantify variability, the median of each condition is

highlighted in the table given by the middle rows. The % column is the penalty of running the OS timer at 0.5ms rather than 15.265ms.

Code:

sockets=2,cores=4,threads=1 sockets=1,cores=8,threads=1

(aware of modularity) (unaware of modularity)

15.625ms 0.5ms %penalty 15.625ms 0.5ms %penalty

hi perf 66.36 66.38 0% 64.35 64.9 1%

66.84 66.67 0% 64.74 65.35 1%

67.4 66.9 -1% 65.57 65.89 1%

balanced 68.83 69.01 0% 66.08 73.58 11%

69 69.03 0% 66.11 73.81 12%

69.87 69.45 -1% 66.13 74.59 13%

(sorry for the hacky display, I tried to do bbcode tables but they seem unsupported).

We see that only when all factors combine together in a specific way a substantial performance regression due to the OS timer (~12%) can be seen. Interestingly, making Windows aware of the dual-module structure of the physical processor die removes the regression, perhaps through scheduling differences. Perhaps less surprisingly, the regression disappears almost entirely in high performance power mode (regardless of CPU topology). This suggests that it is the unparking of cores that is responsible for the regression, rather than overhead due to starting over with a cold cache on a new 4core-module (at least for this load).

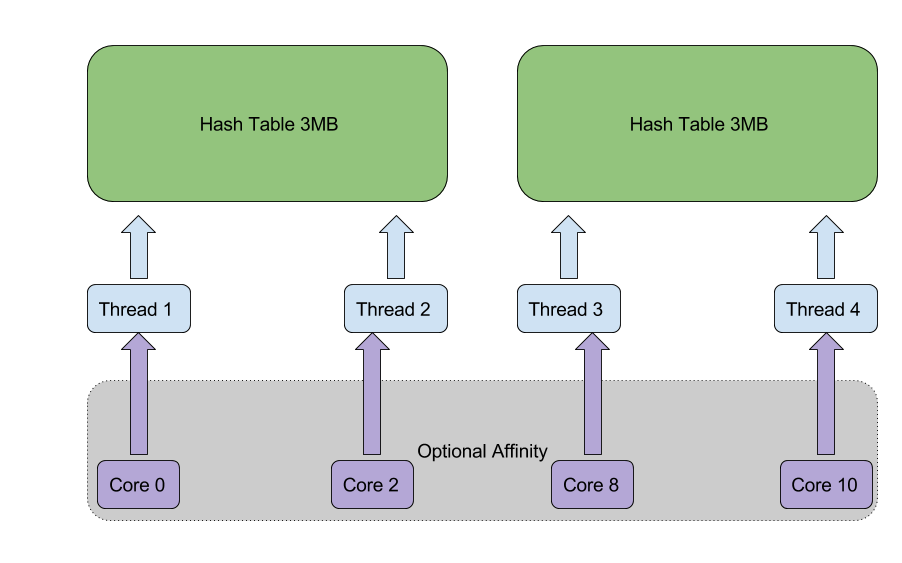

Then I ran a second experiment just to verify that the beneficial effect of making windows aware of the topology actually reflects more optimised scheduling, rather than some other aspect which we have not measured. This was achieved through letting Windows see only one socket with 8 cores, but setting CPU affinity of the worker thread manually to a single CPU core (setting affinity to all 4 cores of one module would have been a cleaner condition, but I realised that half-way through testing).

Experiment 2.

Factorial structure:

OS-timer {15.625ms | 0.5ms} x Thread Affinity {TA set | TA not set}

Factors from exp1 held constant: topology=1socket,8cores,1threads, powerplan=”Balanced”

Dependent variable: Wprime single-threaded 1024M test running time (shorter is better)

Code:

sockets=1,cores=8,threads=1

(unaware of modularity)

15.625ms 0.5ms %penalty

balanced, TA not set 2168.88 2394.9 10%

balanced, TA set 2061.27 2068.93 0%

I chose the longer Wprime test in order to increase the SNR, as I set affinity manually in each run (within the first 15s of the total 33-35 minute running time). As we see, setting the thread affinity of the process also removes the regression incurred by a higher OS timer resolution. So the effect of topology indeed seems to reflect whether threads are scheduled across modules or not. This is actually quite reasonable, as multi-socket multi-core systems where around before the introduction of NUMA, and Windows versions since NT should know what to do with such a system. CPU cores sharing a socket on a non-NUMA system would typically share a private LLC without sharing a private memory controller, why a dual-socket spoof would be better than a NUMA-spoof in order to make Windows aware of Ryzen/Magny-cours modularity. It remains to show, however, whether Windows’ default behaviour in such a case is optimal for Ryzen.

The OS timer seems the most important factor in this test. This could explain the win7-win10 performance deltas seen in some games, as win10 reportedly increases the timer resolution in games, and also shows lower game performance (also after SMT has been turned off) – this correlational hypothesis remains to be tested on Ryzen, suggestedly by overriding the OS timer setting. The timer aspect might amplify several other factors having to do with power state changes and maladaptive scheduling decisions – at higher timer resolution, whatever mischief Windows is doing it will do more often. Indeed, higher OS timer resolution should always lead to some more overhead (we see that on Linux too), but 10-12% is clearly over the top.

The evidence that the power plan is crucial for whether the performance regression appears is consistent with Kromaatsike’s theory on core parking adversely interacting with thread scheduling. This is also consistent with AMD’s current focus on power management settings rather than the Windows scheduler. This part is probably not surprising for readers of this thread, though I am still at loss on what exactly is happening with power management here. It should be emphasised, however, that the problem only appears if the scheduler is allowed to schedule across the Magny-Cours module boundary, so the modularity of the processor die does matter (so it should for Ryzen).

The bottom line

Why I think this experiment is interesting is that the hypotheses I test were all generated from looking at evidence and theory regarding Ryzen’s performance, and the hypothesis that these three aspects may interact was originally formulated for Ryzen. The evidence that Magny-Cours behaves as I expect Ryzen to behave indicates that we are indeed on the right track regarding suspecting the dual-CCX design and the victim design of the L3, rather than any other so far unmeasured idiosyncracy of Ryzen.

It would thus be interesting to see all or parts of this experiment replicated on Ryzen under Windows 8 or 10. Allegedly, there are relevant differences between Ryzen and Magny-Cours, for example the communication protocol between modules (direct HyperT links vs IF bus) and the presence of an L3 snoop-filter in Magny-Cours. I expect these differences to load on the magnitude of the effects, rather than on their presence. A final replication which I would like to see is on a dual-socket multi-core Intel system with ”node interleaving” enabled, in which a Windows VM gets a set of cores originating from two different physical processors. This would tell whether the phenomenon discussed here is ultimately an AMD issue or a basic architectural and/or Windows issue.