Ryzen: Strictly technical

Page 40 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

lolfail9001

Golden Member

- Sep 9, 2016

- 1,056

- 353

- 96

So, basically it still looks SMT aware to me, just that disabling core parking increases amount of jumps between cores significantly.Full core parking, using my tweaks:

https://www.dropbox.com/s/hle0l0reegxoumr/FullParking.png

Core parking a la Win7, with all physical cores unparked:

https://www.dropbox.com/s/v9q6w9bfeknu89v/CoreOverride.png

Core parking disabled:

https://www.dropbox.com/s/gmp2x7dd45ug3e3/NoParking.png

This is on Steamroller - Windows treats CMT the same way as SMT/HT.

Anyway, re: how to multithread games

I'm pretty sure the optimal thing, since there clearly seems to be DX12 and Vulkan issues where they rely on the shared cache and interconnectivity, is to have those running on the other CCX and "only" using those 8 threads for the actual graphics API calls. Also to only have graphics drivers running on one CCX, and somehow on that same one despite that being a separate application.

This might seem suboptimal, but the other CCX wouldn't sit there doing nothing. You still have your main loop running, i/o handlers, sound, and so on running on that CCX. You can be preparing the next draw calls to be sent to the other CCX

That, then, would be your only-cross CCX, going from your draw call prep to the actual draw calls. Which should be very little since you should run your physics and transforms on the "multithread" CCX

But even then... I'm not totally sure.

Like you want, it'd seem, to have your heavy single threaded tasks on one CCX without using SMT. So you don't want to load more on there.

Then on the other CCX, you want to run your tasks that are cheap to parallel-ize but that suffer from cross-CCX issues. Physics, object transforms, DX12/Vulkan... Pretty much the only single thread there being the main graphics API thread, your render loop, and your main dispatchers for those heavily parallel tasks if needed.

But... it's things going on on your main threads that trigger your object transforms happening.

So on top of that, you need some super tight optimized way to take your inputs and game systems on one side calling for transformations to happen on your actual render side.

In my mind, that's probably the roughly optimal set up.

And this is similar to how many games are set up, as far as I'm aware, with 3-8 main threads but which can spawn a dozen or two, or three, more for their highly parallel tasks which get stacked on top the more heavy single threaded tasks.

I think what a lot of people miss is that with their traditional view, that seems intuitive, on how multithreading works is you have say a main thread, render thread, sound, i/o. They think these are separate systems that you can split up easy.

But... that's not really the case. There's a performance penalty to splitting those up. Clock for clock (or watt for watt maybe more apt), things run worse split up like this and they tend to rely on L3 to make that work a bit better but not still quite as good.

But then add to that, people tend to think physics is another thing that might be put on one thread.

But no, physics on the other hand is often something that will run more efficiently when split up into many threads and using SMT. Similar to how in Cinebench, the 1800X gets a 162 single threaded score. Yet, the multicore is 1624. This is 10 times higher, not 8 times higher as a layman might expect.

So this is why I think the optimal layout is that you keep your main thread and other things that need to be kept "close" to it on one CCX, and you put your things that benefit from being parallel on the other.

Oh, and the other thing is that you can't benefit from SMT as much if you have something uneven blocking it, but on the other CCX with almost none of that going on you're free to write everything in a way that can benefit from SMT.

That no, this can't be it because it'd be 98ns to write to memory from the pinging core.

But when it comes to reading.. so say it instantly starts to read, well wouldn't it try to read from memory simultaneously to looking in its L3 cache? It should catch it a moment after it was just written.

If Ryzen had a lattency of 142 every time it tried to read from memory, that would mean it's checking L3 cache then memory first each time and it's truly 98-42ns, since you'd be factoring in the seeking from L3 cache before it actually. Which I don't believe it's that fast, I believe they're attempted simutaniously...

I'm explaining this very poorly..

Basically if the MMU checks L3 before even trying to read memory, that'd mean that the real memory latency is 98-42ns.

So the write speed should have been 56ns. And the read speed on the other core sould have been 98ns instead of 142ns. You're missing 44ns.

None of that can be true as far as I see, but I think I'm still wording this poorly.

I'm pretty sure the optimal thing, since there clearly seems to be DX12 and Vulkan issues where they rely on the shared cache and interconnectivity, is to have those running on the other CCX and "only" using those 8 threads for the actual graphics API calls. Also to only have graphics drivers running on one CCX, and somehow on that same one despite that being a separate application.

This might seem suboptimal, but the other CCX wouldn't sit there doing nothing. You still have your main loop running, i/o handlers, sound, and so on running on that CCX. You can be preparing the next draw calls to be sent to the other CCX

That, then, would be your only-cross CCX, going from your draw call prep to the actual draw calls. Which should be very little since you should run your physics and transforms on the "multithread" CCX

But even then... I'm not totally sure.

Like you want, it'd seem, to have your heavy single threaded tasks on one CCX without using SMT. So you don't want to load more on there.

Then on the other CCX, you want to run your tasks that are cheap to parallel-ize but that suffer from cross-CCX issues. Physics, object transforms, DX12/Vulkan... Pretty much the only single thread there being the main graphics API thread, your render loop, and your main dispatchers for those heavily parallel tasks if needed.

But... it's things going on on your main threads that trigger your object transforms happening.

So on top of that, you need some super tight optimized way to take your inputs and game systems on one side calling for transformations to happen on your actual render side.

In my mind, that's probably the roughly optimal set up.

And this is similar to how many games are set up, as far as I'm aware, with 3-8 main threads but which can spawn a dozen or two, or three, more for their highly parallel tasks which get stacked on top the more heavy single threaded tasks.

I think what a lot of people miss is that with their traditional view, that seems intuitive, on how multithreading works is you have say a main thread, render thread, sound, i/o. They think these are separate systems that you can split up easy.

But... that's not really the case. There's a performance penalty to splitting those up. Clock for clock (or watt for watt maybe more apt), things run worse split up like this and they tend to rely on L3 to make that work a bit better but not still quite as good.

But then add to that, people tend to think physics is another thing that might be put on one thread.

But no, physics on the other hand is often something that will run more efficiently when split up into many threads and using SMT. Similar to how in Cinebench, the 1800X gets a 162 single threaded score. Yet, the multicore is 1624. This is 10 times higher, not 8 times higher as a layman might expect.

So this is why I think the optimal layout is that you keep your main thread and other things that need to be kept "close" to it on one CCX, and you put your things that benefit from being parallel on the other.

Oh, and the other thing is that you can't benefit from SMT as much if you have something uneven blocking it, but on the other CCX with almost none of that going on you're free to write everything in a way that can benefit from SMT.

This is what I was going over in my post above yours..We get about 45ns or so within cores. We get 98ns or so within RAM. 45 ns (from within the CCX) + 98 ns (from the memory latency) = the 143 ns, which is about what PC Perspective is getting.

That no, this can't be it because it'd be 98ns to write to memory from the pinging core.

But when it comes to reading.. so say it instantly starts to read, well wouldn't it try to read from memory simultaneously to looking in its L3 cache? It should catch it a moment after it was just written.

If Ryzen had a lattency of 142 every time it tried to read from memory, that would mean it's checking L3 cache then memory first each time and it's truly 98-42ns, since you'd be factoring in the seeking from L3 cache before it actually. Which I don't believe it's that fast, I believe they're attempted simutaniously...

I'm explaining this very poorly..

Basically if the MMU checks L3 before even trying to read memory, that'd mean that the real memory latency is 98-42ns.

So the write speed should have been 56ns. And the read speed on the other core sould have been 98ns instead of 142ns. You're missing 44ns.

None of that can be true as far as I see, but I think I'm still wording this poorly.

Kromaatikse

Member

- Mar 4, 2017

- 83

- 169

- 56

My point is that *core parking* is SMT aware, but the core-parking algorithm is *not* part of the scheduler.So, basically it still looks SMT aware to me, just that disabling core parking increases amount of jumps between cores significantly.

Kromaatikse

Member

- Mar 4, 2017

- 83

- 169

- 56

If Ryzen had a lattency of 142 every time it tried to read from memory, that would mean it's checking L3 cache then memory first each time and it's truly 98-42ns, since you'd be factoring in the seeking from L3 cache before it actually. Which I don't believe it's that fast, I believe they're attempted simutaniously...

I don't think the 142ns figure is from a single cross-CCX access. I think it might be a more complex operation, such as a semaphore, which requires multiple accesses. That's why we need the code performing this test, so we know what it's actually measuring.

Agreed.I don't think the 142ns figure is from a single cross-CCX access. I think it might be a more complex operation, such as a semaphore, which requires multiple accesses. That's why we need the code performing this test, so we know what it's actually measuring.

But in addition, whatever does account for that latency, I don't think it's purely to blame for some games performing terribly (especially DX12/Vulkan).

I have a feeling there's something besides just the cross-CCX bandwidth that's getting overloaded when something tries to cross them too much.

DX12 and Vulkan (two cases where we see Ryzen performance seriously drop) likely rely on the L3 cache, sure, but I'd think the 22Gb/s is enough for their purposes if it were only down to that.

lolfail9001

Golden Member

- Sep 9, 2016

- 1,056

- 353

- 96

Fair enough.My point is that *core parking* is SMT aware, but the core-parking algorithm is *not* part of the scheduler.

And my point is that i see no convincing evidence scheduler is not SMT/CMT aware in your screens, because well, there is no conflict between scheduling 2 threads on same physical core to be seen with a single threaded load.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

I will wait for some time to be certain. I would like the 1400x instead of a possible 1400. Although if i can believe the news, the next one lower in line will be the 1300.

When i buy, i want the hardware to be stable with a matured bios.

I will go for a gigabyte motherboard again. I have good experiences with gigabyte. And as it seems for ryzen, the gigabyte is a good choice for a stable board since the release of ryzen.

I also would like a different custom cooler and i still have to do thorough research about what memory is best.

Will also be including a nvme and that is going to be the most expensive part of the whole new system, a samsung 960 pro 512GB.

I will be updating from a A10-6700 cpu , so it will be a big win in the end.

I can reuse the RX480 card that i have. And use the A10-6700 as a home theater system or backup pc.

There is no 1400X

Ryzen 5 1400 : 4/8 @ 3.2~3.4: $169

Ryzen 5 1500X : 4/8 @ 3.5~3.7: $189

There's no gap to place a 1400X..

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

There is no 1400X

Ryzen 5 1400 : 4/8 @ 3.2~3.4: $169

Ryzen 5 1500X : 4/8 @ 3.5~3.7: $189

There's no gap to place a 1400X..

There is as the 1400 has 50MHz XFR while the 1500X has 200MHz so a 450MHz gap.

William Gaatjes

Lifer

- May 11, 2008

- 23,102

- 1,551

- 126

There is no 1400X

Ryzen 5 1400 : 4/8 @ 3.2~3.4: $169

Ryzen 5 1500X : 4/8 @ 3.5~3.7: $189

There's no gap to place a 1400X..

Yeah, i found that out as well while reading the anandtech site.

My previous information came from wccftech.

What does the X stand for ?

The 1400 seems to have XFR as well.

It just seems artificially limited.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

I might have an answer for you.

Memory latency is 98 ns on Ryzen.

https://www.techpowerup.com/231268/...yzed-improvements-improveable-ccx-compromises

Let's think about this.

We get about 45ns or so within cores. We get 98ns or so within RAM. 45 ns (from within the CCX) + 98 ns (from the memory latency) = the 143 ns, which is about what PC Perspective is getting.

So it looks like it is going to DRAM. An L4 cache might be helpful here.

For a comparison, here's Skylake: https://techreport.com/review/31179/intel-core-i7-7700k-kaby-lake-cpu-reviewed/4

About 45ns, which is less than half of AMD's 98 ns.

If AMD could get memory latency down to Skylake levels, that would be awesome, because that would be about the same speed as the 5960X in terms of CCX + memory latency.

We expect a penalty in quad channel latency, but AMD's memory controller is slow - slower than even Bulldozer it seems.

I love the logic, but I think the memory latency figures for Ryzen are (at lest partly) inclusive of the CCX and DF latencies. Otherwise increasing core frequency shouldn't reduce it much (if at all) - but I saw 10ns drop going from a core clock of 3GHz to 3.8Ghz and 9GB/s more bandwidth - without touching memory settings.

How would a program work to isolate just the IMC to RAM latency? This is something I've never explored (well, creating benchmarks at all, actually, is something I've never had cause to do outside of critical program code).

Just benchmarking memcpy() performance and the average time for accesses to return (using the TSC for timing) is all that comes to mind. Calculate how long it takes to access some memory address you haven't dirtied from a single core.

How Intel systems can show latencies of 19ns is beyond me. There's some black magic going on there... that's lower than the time it takes to get data into a core from memory.

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

I think it all leads back to how AIDA64 benchmarks the cache.I love the logic, but I think the memory latency figures for Ryzen are (at lest partly) inclusive of the CCX and DF latencies. Otherwise increasing core frequency shouldn't reduce it much (if at all) - but I saw 10ns drop going from a core clock of 3GHz to 3.8Ghz and 9GB/s more bandwidth - without touching memory settings.

How would a program work to isolate just the IMC to RAM latency? This is something I've never explored (well, creating benchmarks at all, actually, is something I've never had cause to do outside of critical program code).

Just benchmarking memcpy() performance and the average time for accesses to return (using the TSC for timing) is all that comes to mind. Calculate how long it takes to access some memory address you haven't dirtied from a single core.

How Intel systems can show latencies of 19ns is beyond me. There's some black magic going on there... that's lower than the time it takes to get data into a core from memory.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

I don't think the 142ns figure is from a single cross-CCX access. I think it might be a more complex operation, such as a semaphore, which requires multiple accesses. That's why we need the code performing this test, so we know what it's actually measuring.

The accurate way to test it would be holding a spin-lock in one thread to protect a structure that simply stores the TSC value, setting that TSC value and releasing the spinlock from another thread. This release would need to happen at an interval MUCH greater than the largest possible latency, so something like 1ms should be fine. Real time thread priorities must be used.

The reader thread would keep an array of (rdtsc - ping->tsc) results. The rdtsc instruction takes about 60 cycles, the subtraction would just about be free, and the results would need to be stored on the stack - which sets some requirements for the operation (I like requirements, keeps the code simple

Using semaphores or sockets would be a sure way to end up including other variables into the results.

XFR was AMD's biggest mistake.Yeah, i found that out as well while reading the anandtech site.

My previous information came from wccftech.

What does the X stand for ?

The 1400 seems to have XFR as well.

It just seems artificially limited.

They should have just marked them as 3.45Ghz and 3.9Ghz "precision boost". Instead they make them look lower clocked and spread this lie that they overclocked them as good as your cooling allowed.

"X" just stands for "it's better xD"

Elixer

Lifer

- May 7, 2002

- 10,371

- 762

- 126

Isn't the Infinity fabric based off of Seamicro's Freedom fabric that ties the CCX units together?

That interconnect (Seamicro's Freedom fabric) was supposed to have over a terabit/sec bandwidth, and < 6 us latency per node.

Obviously, something is getting in the way here to slow down the transfer rate, assuming pcper's graph is correct, but, again, we need the darn source to see what they are actually doing.

In fact, I would be curious to see that same code run on linux 4.11+ kernel, and see what it shows there as well.

That interconnect (Seamicro's Freedom fabric) was supposed to have over a terabit/sec bandwidth, and < 6 us latency per node.

Obviously, something is getting in the way here to slow down the transfer rate, assuming pcper's graph is correct, but, again, we need the darn source to see what they are actually doing.

In fact, I would be curious to see that same code run on linux 4.11+ kernel, and see what it shows there as well.

DisEnchantment

Golden Member

- Mar 3, 2017

- 1,779

- 6,798

- 136

New AIDA version

https://www.aida64.com/downloads/ZT...64&utm_medium=update&utm_campaign=betaproduct

What does this K17.1 support indicate??

Is this Raven Ridge or new Ryzen silicon?

https://www.aida64.com/downloads/ZT...64&utm_medium=update&utm_campaign=betaproduct

What does this K17.1 support indicate??

Code:

AMD K15.6, K15.7, K16.6, K17, K17.1 PM2 fan sensor supportIs this Raven Ridge or new Ryzen silicon?

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

The GMI links are a key part of this thing too and 4 dies playing well together is important.

So any chance all data goes through some GMI related "filter" so they can get similar latency on die and in package?

So any chance all data goes through some GMI related "filter" so they can get similar latency on die and in package?

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

All the problem with Ryzen is that "coherent" data fabric. It runs at half the ram speed.

Why do folks complain about this, it's pretty much the default way to go about it as it scales with memory BW needs. Running it faster to reduce latency can be an upside but is less efficient.

coffeemonster

Senior member

- Apr 18, 2015

- 241

- 87

- 101

I really think they should have made a 1400X with the 1500X clocks(4/8 @ 3.5~3.7: $189)There is no 1400X

Ryzen 5 1400 : 4/8 @ 3.2~3.4: $169

Ryzen 5 1500X : 4/8 @ 3.5~3.7: $189

There's no gap to place a 1400X..

and had the 1500X be the true scaled down 1800X gamer quad @3.6~4.0: $209 or so

CrazyElf

Member

- May 28, 2013

- 88

- 21

- 81

Has anyone tried ECC on Ryzen? Asrock's boards should support X370. No idea about the other vendors.

AIDA64 wasn't giving correct numbers for L2 and L3, I know, but they said that L1 and RAM were ok.

From what I understood, it was to be incorporated into the next version of AIDA64? Do you have any more information?

True enough. Right now we are working with fixed memory multipliers and locked timings. No idea what Ryzen's memory controller is capable of once fully unlocked.

Hope it's good as it could really make a difference.

Not sure either.

An interesting question - what is the weighting?

Some of the time, the memory won't even need to be accesses because the data will be in one of the cores' L3 of the other CCX (Or L2 even? Due to L3 being a victim cache?).

I guess the answer might be:

Weighted average inter-CCX latency = latency within CCX + (% of time data is in L3 of other CCX x amount of time to access other CCX + % of time you need to get data from DRAM x time it takes to access DRAM) + probability of cache miss x average time of cache miss

I'm thinking that:

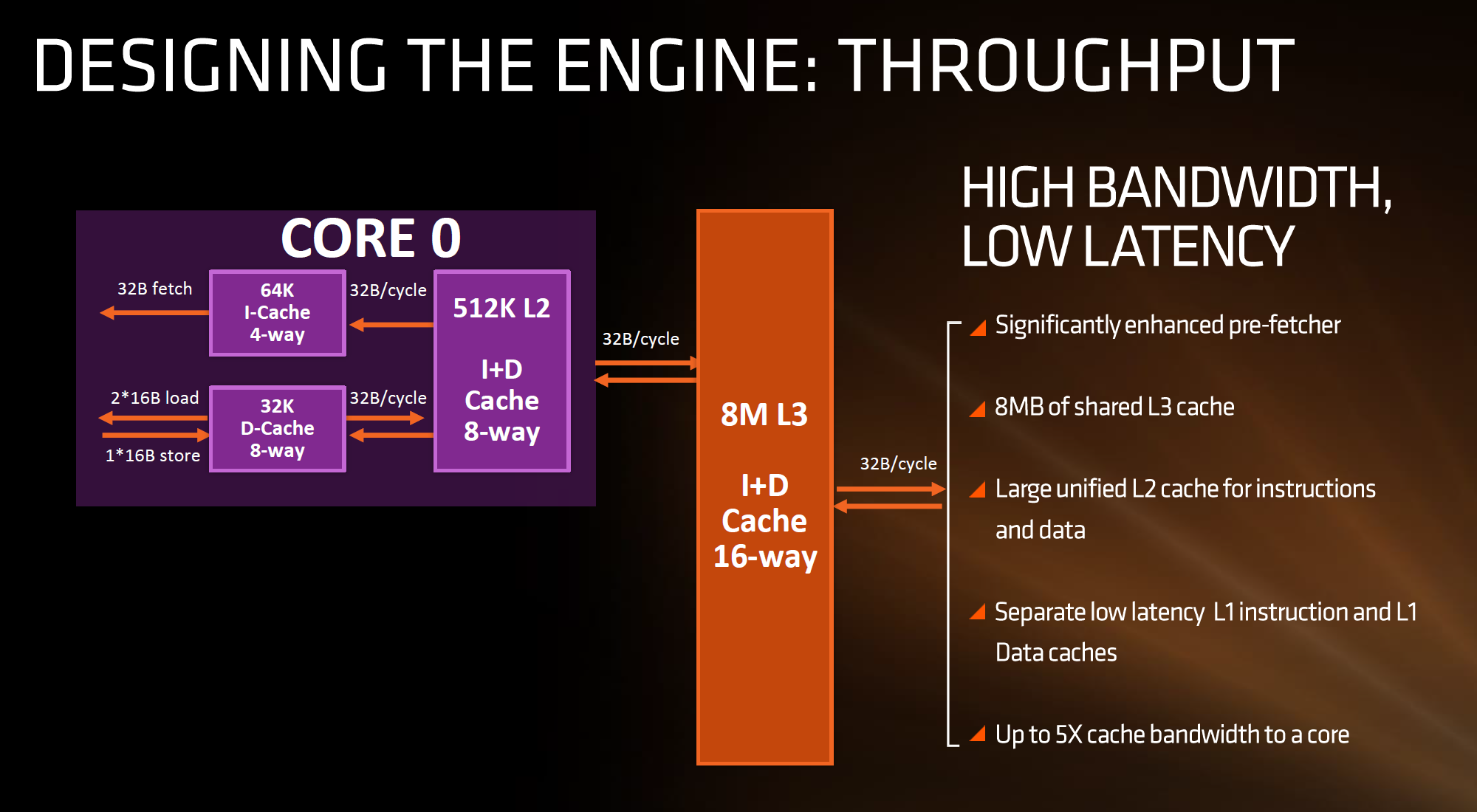

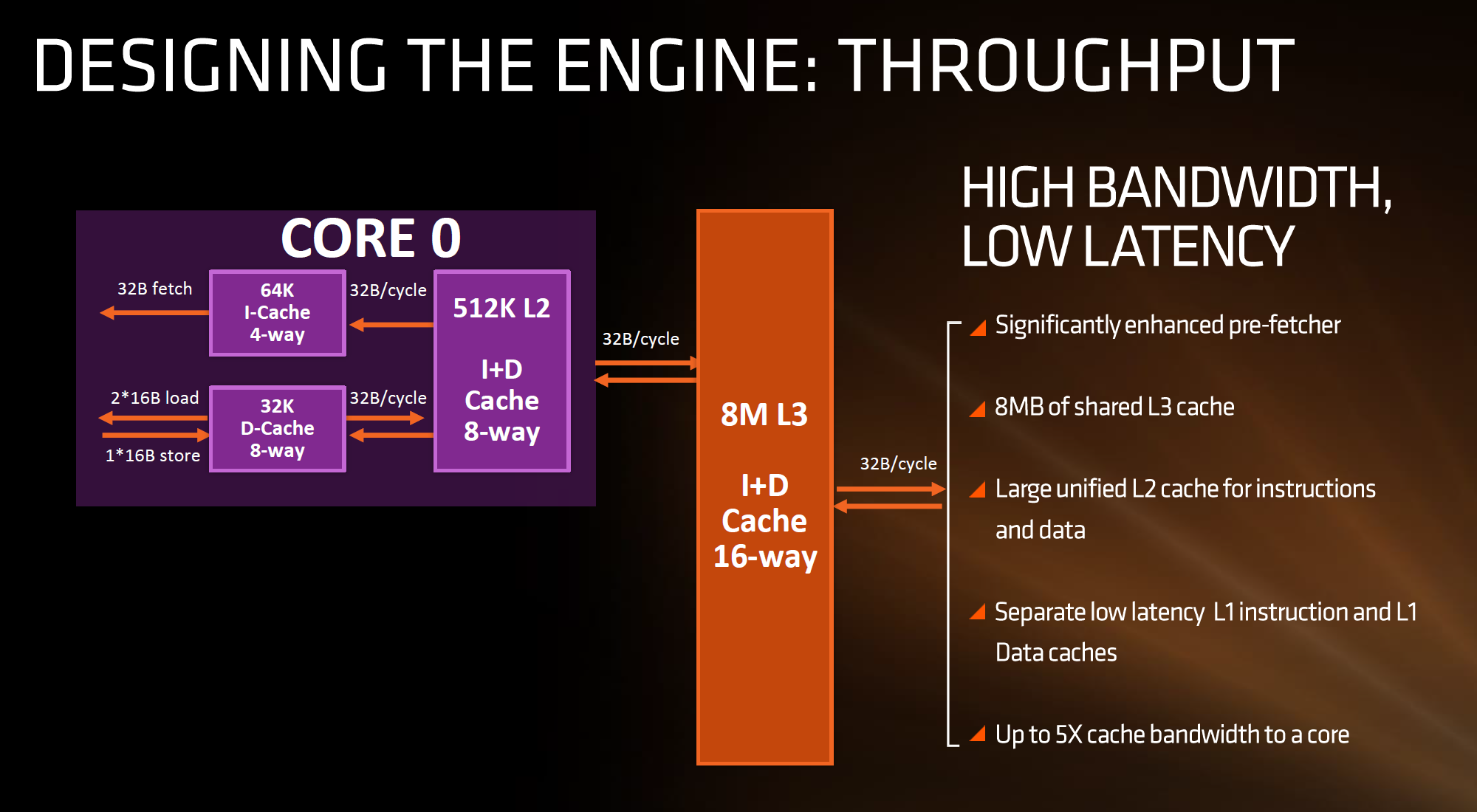

1. Overclocking core might reduce latency within CCX (since they're all tied together, CPU register, L1, L2, and L3)

2. Overclocking RAM might reduce latency in all areas where there is 32B/cycle

So that would mean all the caches and the data fabric.

Then the average latency between the cores would be (and this is assuming you are getting data out of a core):

So overall average latency between cores is, including both within and outside of the CCX:

3/7 x average latency within CCX + 4/7 x probability of going to L3 in other CCX x time to access other CCX L3 cache + 4/7 x probability of not finding data in other CCX x time it takes to go to DRAM + probability of cache miss inside CCX x time penalty for cache miss

I wonder what this is going to look like for Naples. How would they get 8 CCXs to talk to each other? I don't think that mesh is practical for 8 CCXs, so a bi-directional ring might be necessary. We will know by next quarter.

I suggest you read that particular page of the Hardware.fr review. They had AIDA64 engineers design a benchmark to test sequential L3 data access for different block sizes for a more detailed analysis. This is not incorporated in the software release of AIDA64, even in the beta that officially enabled support for Ryzen. Performance is comparable to a 6900K, even a bit faster, up to the ~6MB mark.

AIDA64 wasn't giving correct numbers for L2 and L3, I know, but they said that L1 and RAM were ok.

From what I understood, it was to be incorporated into the next version of AIDA64? Do you have any more information?

Skylake and Kaby were using 3866MHz CL18 in those TR tests. Ryzen would get to about 60ns with such RAM settings - may be achievable after the May update- and right now it gets to as low as 70ns with 3200 CL14 but there is no access to secondary timings yet. After all is said and done and BIOS settles, 60+ns might be doable with 3200MHz DRAM, close enough to Broadwell-E.

True enough. Right now we are working with fixed memory multipliers and locked timings. No idea what Ryzen's memory controller is capable of once fully unlocked.

Hope it's good as it could really make a difference.

I love the logic, but I think the memory latency figures for Ryzen are (at lest partly) inclusive of the CCX and DF latencies. Otherwise increasing core frequency shouldn't reduce it much (if at all) - but I saw 10ns drop going from a core clock of 3GHz to 3.8Ghz and 9GB/s more bandwidth - without touching memory settings.

How would a program work to isolate just the IMC to RAM latency? This is something I've never explored (well, creating benchmarks at all, actually, is something I've never had cause to do outside of critical program code).

Just benchmarking memcpy() performance and the average time for accesses to return (using the TSC for timing) is all that comes to mind. Calculate how long it takes to access some memory address you haven't dirtied from a single core.

How Intel systems can show latencies of 19ns is beyond me. There's some black magic going on there... that's lower than the time it takes to get data into a core from memory.

Not sure either.

An interesting question - what is the weighting?

Some of the time, the memory won't even need to be accesses because the data will be in one of the cores' L3 of the other CCX (Or L2 even? Due to L3 being a victim cache?).

I guess the answer might be:

Weighted average inter-CCX latency = latency within CCX + (% of time data is in L3 of other CCX x amount of time to access other CCX + % of time you need to get data from DRAM x time it takes to access DRAM) + probability of cache miss x average time of cache miss

I'm thinking that:

1. Overclocking core might reduce latency within CCX (since they're all tied together, CPU register, L1, L2, and L3)

2. Overclocking RAM might reduce latency in all areas where there is 32B/cycle

So that would mean all the caches and the data fabric.

Then the average latency between the cores would be (and this is assuming you are getting data out of a core):

- 3/7 times (there are 3 other cores in the CCX and 7 other on die), you will have the data within your own CCX

- 4/7 times you need to go to the other CCX, and in some cases out of that 4/7, find the information in the L3 cache of one of the cores in the other CCX

- 4/7 times you need to go outside your CCX, but in other cases of the 4/7, have to go to DRAM because the data is not in the other CCX's cores' L3 cache at all.

- I guess then there is the cache misses within the CCX as well

So overall average latency between cores is, including both within and outside of the CCX:

3/7 x average latency within CCX + 4/7 x probability of going to L3 in other CCX x time to access other CCX L3 cache + 4/7 x probability of not finding data in other CCX x time it takes to go to DRAM + probability of cache miss inside CCX x time penalty for cache miss

I wonder what this is going to look like for Naples. How would they get 8 CCXs to talk to each other? I don't think that mesh is practical for 8 CCXs, so a bi-directional ring might be necessary. We will know by next quarter.

Last edited:

CataclysmZA

Junior Member

- Mar 15, 2017

- 6

- 7

- 81

That no, this can't be it because it'd be 98ns to write to memory from the pinging core.

But when it comes to reading.. so say it instantly starts to read, well wouldn't it try to read from memory simultaneously to looking in its L3 cache? It should catch it a moment after it was just written.

If Ryzen had a lattency of 142 every time it tried to read from memory, that would mean it's checking L3 cache then memory first each time and it's truly 98-42ns, since you'd be factoring in the seeking from L3 cache before it actually. Which I don't believe it's that fast, I believe they're attempted simutaniously...

I'm explaining this very poorly..

Basically if the MMU checks L3 before even trying to read memory, that'd mean that the real memory latency is 98-42ns.

So the write speed should have been 56ns. And the read speed on the other core sould have been 98ns instead of 142ns. You're missing 44ns.

None of that can be true as far as I see, but I think I'm still wording this poorly.

When a request or a ping is made to L3 in a different CCX, both L3 cache in that second CCX and the unified IMC are pinged/strobed/checked at the same time.

If the inter-CCX latency of 140ns average is correct, then it can only be that the two latency values are being reported together, regardless of whether or not any data is retrieved in the next clock cycle.

Elixer

Lifer

- May 7, 2002

- 10,371

- 762

- 126

https://forums.anandtech.com/thread...but-mainboards-need-to-enable-it-and.2500613/Has anyone tried ECC on Ryzen? Asrock's boards should support X370. No idea about the other vendors.

In short, yes, ECC works on the right board/BIOS.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

XFR was AMD's biggest mistake.

They should have just marked them as 3.45Ghz and 3.9Ghz "precision boost". Instead they make them look lower clocked and spread this lie that they overclocked them as good as your cooling allowed.

"X" just stands for "it's better xD"

Exactly this. XFR would have been great if it also allowed one thread to hit something like 4.2GHz - a clock rate no one can achieve with ambient cooling and tolerable voltages.

Now, if XFR was something we could manually adjust as part of overclocking... that'd be insanely awesome. I know my 1700X can do 4.1GHz on two cores, but it can't handle 3.9GHz on all cores at the same voltage.

3.8 all-core and 4.1GHz dual-core turbo would makes that thing an absolute beast. Instead I'm stuck with 3.8GHz fixed - missing out on a good 8% of single threaded performance potential.

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.