Here is what Igor Pavlov writes about that:

"

About 7-Zip / LZMA speed for AMD Ryzen R7.

Decompression speed is OK at Ryzen.

Compression speed in fast mode with small dictionary probably is OK also.

Compression speed with big dictionary is not good. Compression with big dictionary uses big amount of memory and it needs low memory access latency.

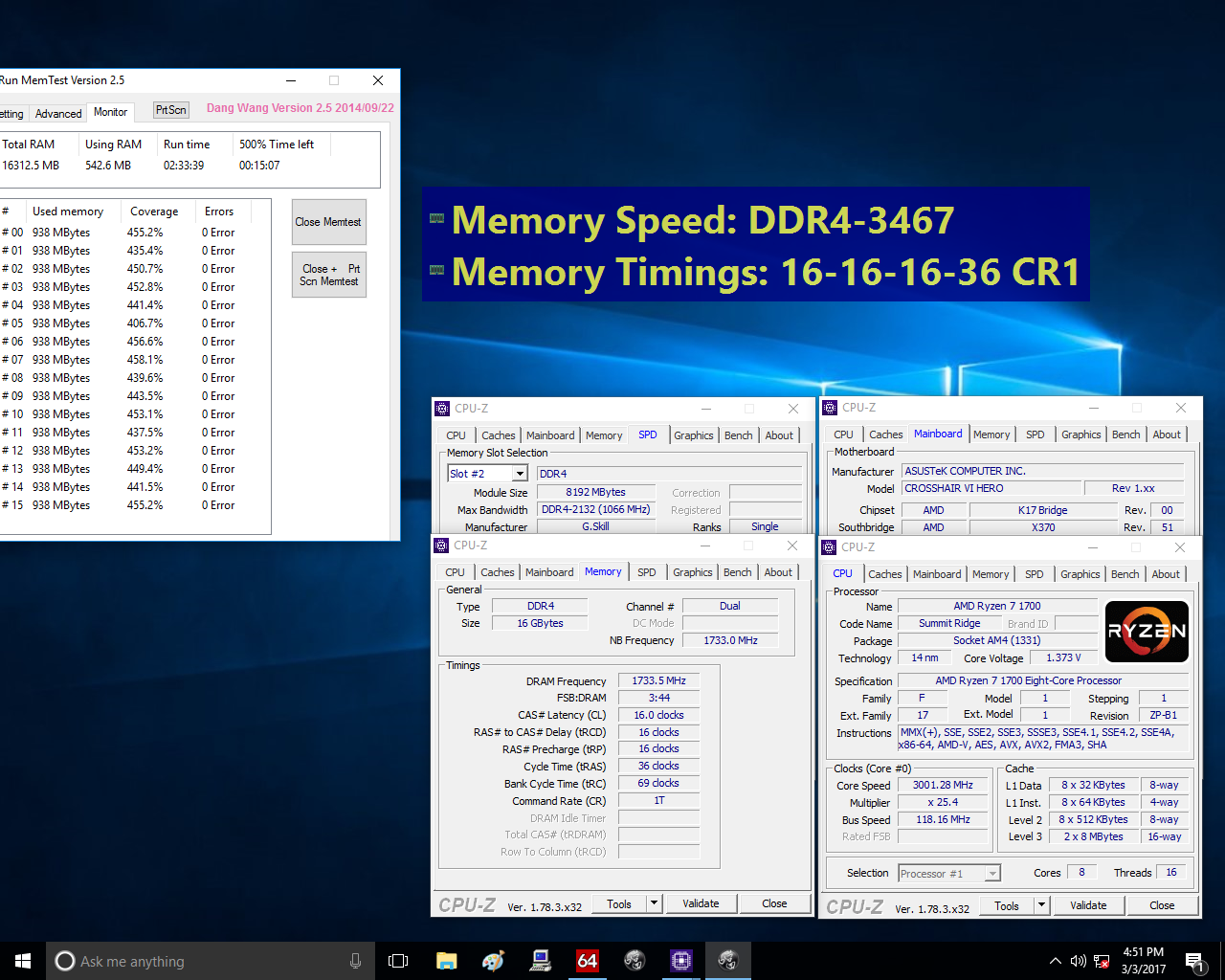

And memory access latency is BAD for Ryzen R7.

Look the following review with memory tests:

http://www.hardware.fr/articles/956-22/retour-sous-systeme-memoire.html

Also maybe shared cache in Intel CPUs is better than two separated caches in Ryzen CPUs for multithreaded LZMA compressing. Probably AMD will ask Microsoft to improve thread scheduling to reduce thread walking from one CCX to another CCX. Maybe such fixed thread scheduling can help slightly in some cases, but I'm note sure that it will help for 7-Zip compression.

Probably special thread scheduler that can be embedded to 7-Zip program will help, but it's difficult to develop it. Some versions of Windows don't like when program changes thread affinity. So such feature requires big development tests with different versions of Windows and different types of CPUs. It can be difficult.

But any improvement for memory latency will help for compression speed. I suppose it's difficult for AMD to reduce memory latency in current Ryzens. I hope they will try to fix it in next Ryzen revisions, if they will have strong understanding how memory latency is important for some programs.

I didn't contact with AMD about Ryzen."

I am not sure that multi-threading is the main benefit here, rather than that RAR5 archives are overall faster to decompress. This even is true if you decompress them via 7-Zip, which only uses a single core for their decompression.

I did a quick comparison, compressing a bunch of PDF files into a solid archive of 4.7 gb (7z) and 4.61 gb (RAR5) size, that about 80% compression ratio. Best/Ultra compression setting, 64 mb booksize, 4 gb solid blocks. Decompression times:

7Z via 7-Zip: 1:50 min

7Z via Winrar: 1:54 min

RAR5 via 7-Zip: 0:32 min

RAR5 via Winrar: 0:22 min

Yes, multi-threaded decompression via Winrar results in 31% faster decompression, but even via single-threaded decompression the RAR5 format decompresses in only 29% of the time that the 7Z archive takes. Interestingly Winrar taxes a second core for decompressing the 7Z archive, but still takes longer.

All that being said, if a 4.7 gb archive takes less than 2 minutes to decompress than you really have to do these chores regularly to make the times matter.

What I take from this, is that 7-Zip decompression should maybe be run with manually settings its affinity to a single core (CCX).