[Rumor, Tweaktown] AMD to launch next-gen Navi graphics cards at E3

Page 80 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

And 225W power delivery for 120W GTX 1660 Ti is explained perfectly well by its power draw?AMD are not being upfront with the information, to say the least, and it certainly does not explain a 8+6pin OEM 5700XT when 3/4s of a 40CU Vega at sane clocks should be a ~150w card, if even that.

GTX 1660 Ti perfectly well can work with just 6 pin connector. And yet NO GTX 1660 Ti has anything less than 8-pin connector and 225W power delivery.

Its also funny that you claim that I have said anything, when I just point out to what AMD has said, in the context of everybody claiming, before we have seen any review of Navi GPU, that is worse than RTX 2070 in efficiency. It might be, but it also might be better in efficiency than RTX 2070. Its simple as that.

Last edited:

Here you have it folks, straight from the top. Navi is, in the main, the gaming half with some light compute of AMD graphic lines. Should settle some arguments here.

PCWatch: Instinct is based on 7nm, so how about changing to the Navi architecture and performance?

Forrest Norrod: There's going to be some overlap between the two. I think Lisa eluded to this earlier, where GCN and Vega will stick around for some parts and some applications, but Navi is really our new gaming architecture so I don't want to go beyond that. You'll see us have parts for both gaming applications and non-gaming applications.

https://www.anandtech.com/show/14568/an-interview-with-amds-forrest-norrod-naples-rome-milan-genoa

PCWatch: Instinct is based on 7nm, so how about changing to the Navi architecture and performance?

Forrest Norrod: There's going to be some overlap between the two. I think Lisa eluded to this earlier, where GCN and Vega will stick around for some parts and some applications, but Navi is really our new gaming architecture so I don't want to go beyond that. You'll see us have parts for both gaming applications and non-gaming applications.

https://www.anandtech.com/show/14568/an-interview-with-amds-forrest-norrod-naples-rome-milan-genoa

insertcarehere

Senior member

- Jan 17, 2013

- 712

- 701

- 136

You see that sane clocks caveat you included? AMD's track record with that in shipping cards has been pretty awful recently.

Exactly, a hypothetical 5700xt with a 23% power reduction over some 40CU Vega 64 (if that's actually the case) while matching/beating RTX2070 performance would be used as extra thermal budget to push up clocks and performance to make a RTX 2080 rival. 40CU is a full Navi die and AMD's shot at a mainstream gaming gpu in 2019, they aren't gonna hold back on performance if it means having to sell the gpu at lower price points.

The size and number of PCIe power connectors has long been a staple of marketing fiction.and it certainly does not explain a 8+6pin OEM 5700XT when 3/4s of a 40CU Vega at sane clocks should be a ~150w card, if even that.

insertcarehere

Senior member

- Jan 17, 2013

- 712

- 701

- 136

And 225W power delivery for 120W GTX 1660 Ti is explained perfectly well by its power draw?

GTX 1660 Ti perfectly well can work with just 6 pin connector. And yet NO GTX 1660 Ti has anything less than 8-pin connector and 225W power delivery.

A 225w power delivery that is also used by reference GTX 1080/70, RX580/480, RTX 2060/70, and yet AMD thinks it isn't enough for the 5700XT and decides that sharing power delivery with 1080ti and 2080 is necessary out of the box as a reference card. Maybe they wanted an absolute shedload of headroom, but this would be the very first time that a reference card is built to such overkill levels out of the box (i.e 300w delivery for a card that may draw half that, with your claims).

Its also funny that you claim that I have said anything, when I just point out to what AMD has said, in the context of everybody claiming, before we have seen any review of Navi GPU, that is worse than RTX 2070 in efficiency. It might be, but it also might be better in efficiency than RTX 2070. Its simple as that.

You said

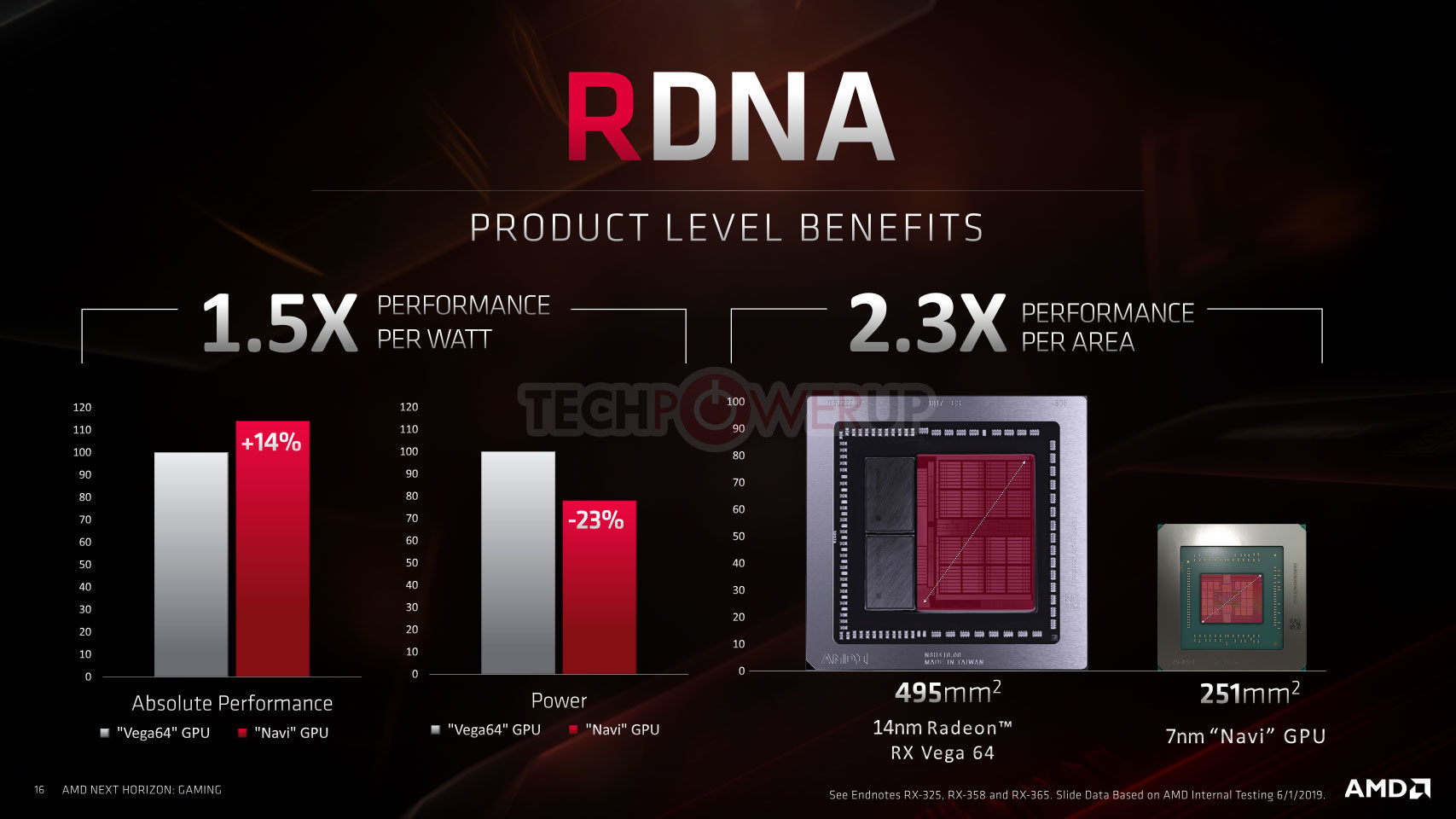

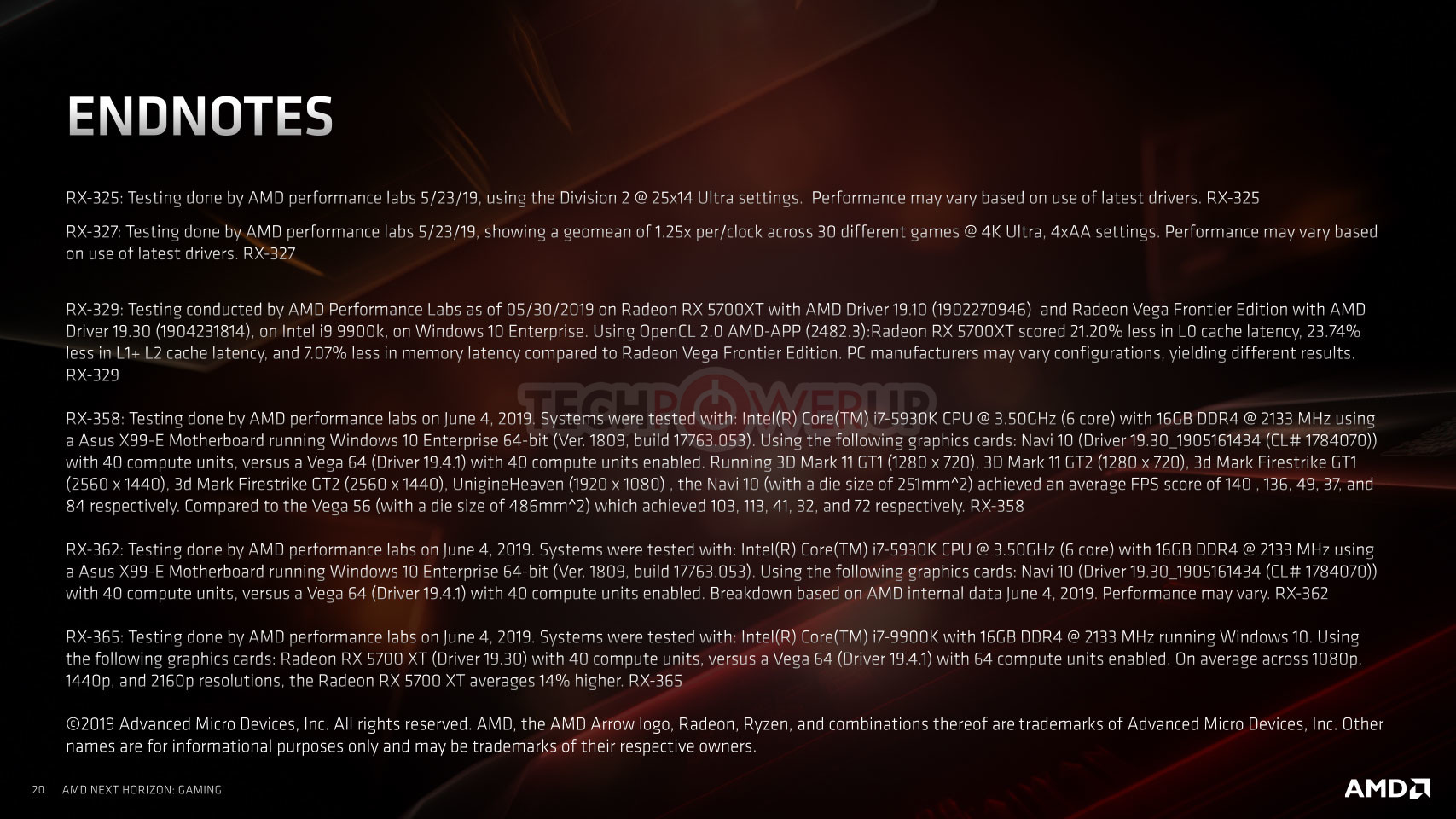

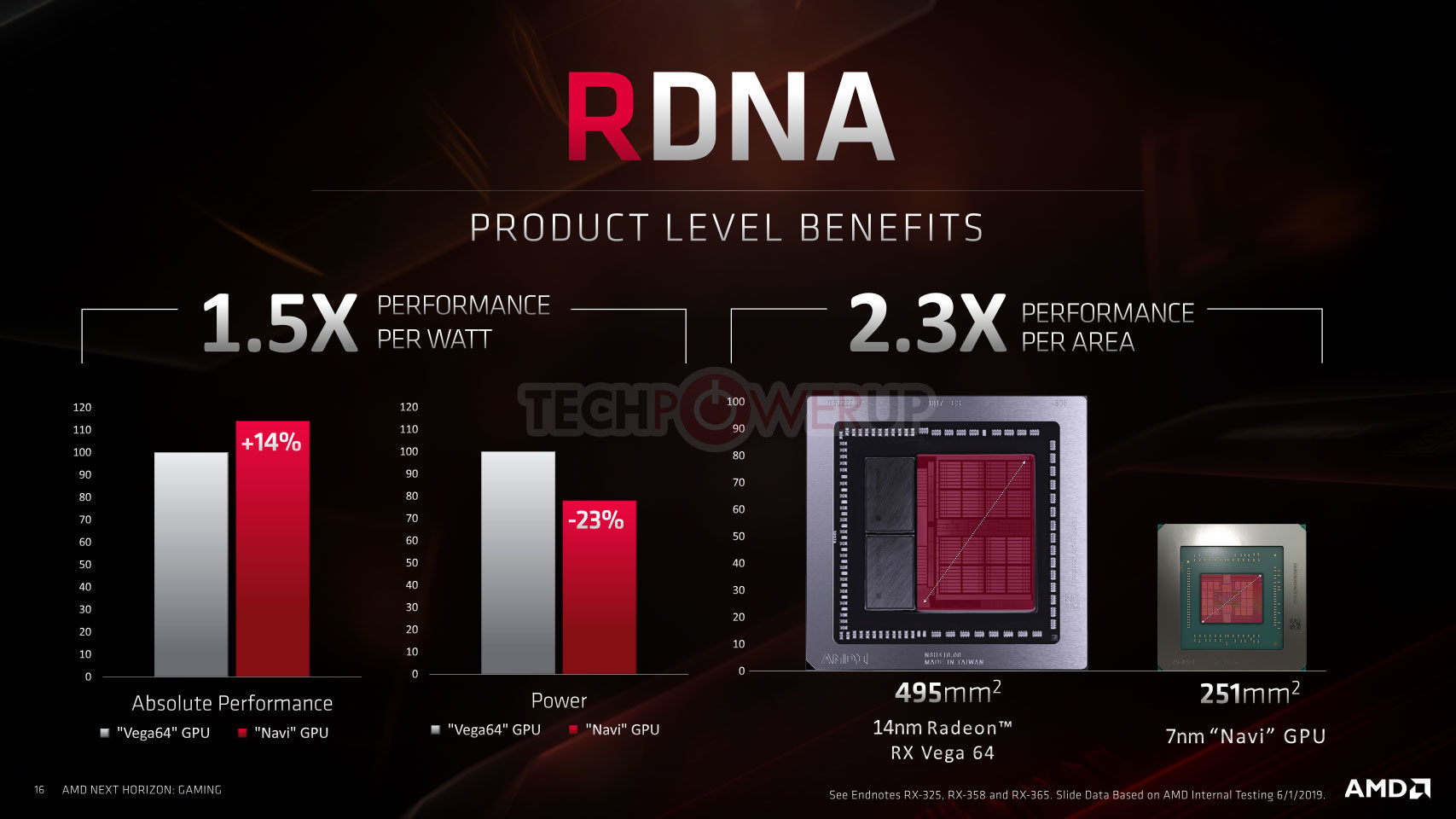

40 CU Navi GPU was 14% faster than Vega 64 with 64 CU's. And at the same time (i.e clocks), 40 CU Navi chip used 23% less power than Vega64 cut down to 40 CU's.

When frankly AMD themselves don't say it and their own 225W TBP spec for 5700XT (same as the RX590) is also very contradictory of that claim. I could end up eating crow and AMD actually built a RTX 2070-killer that draws power at Polaris 10 levels but nothing that is being shown now implies this is likely.

Last edited:

You misread the meaning in that - all it means is that a high end compute part to replace the current Vega line will not be forthcoming anytime soon.Here you have it folks, straight from the top. Navi is, in the main, the gaming half with some light compute of AMD graphic lines. Should settle some arguments here.

PCWatch: Instinct is based on 7nm, so how about changing to the Navi architecture and performance?

Forrest Norrod: There's going to be some overlap between the two. I think Lisa eluded to this earlier, where GCN and Vega will stick around for some parts and some applications, but Navi is really our new gaming architecture so I don't want to go beyond that. You'll see us have parts for both gaming applications and non-gaming applications.

https://www.anandtech.com/show/14568/an-interview-with-amds-forrest-norrod-naples-rome-milan-genoa

Forrest is not a uArch engineer, he is their datacenter/enterprise strategy guy.

So he is not going to say "sure RDNA compute cards are forthcoming" when they are not right around the corner - they don't want to cannibalise their MI50/MI60 sales before they have a viable replacement waiting in the wings.

Everything about the RDNA uArch that has been released implies a greater efficiency at compute overall, while retaining some level of compatibility with code written for Southern Islands (Tahiti/GCN1) derivative uArchs (Wave64), which is what the console vendors wanted for backwards compatibility.

Even without a chiplet package that can benefit games without Crossfire/mGPU, they can still make an MCM package like Naples that runs at a lower frequency with more chips for compute purposes.

Edit: They already do parts just for business with different performance, the Radeon VII does not manage half rate FP64, while MI60 does despite being the same chip.

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

You do realize that GTX 1660 Ti uses LESS power than GTX 1060? And still uses 8 pin connector versus 6 pin connectors on ALL of GTX 1060 GPUs?A 225w power delivery that is also used by: GTX 1080/70, RX580/480, RTX 2060/70, and yet AMD thinks it isn't enough for the 5700XT and decides that sharing power delivery with 1080ti and 2080 is necessary out of the box as a reference card. Maybe they wanted an absolute shedload of headroom, but this would be the very first time that a reference card is built to such overkill levels out of the box (i.e 300w delivery for a card that may draw half that, with your claims).

You said

When frankly AMD themselves don't say it and their own 225W TBP spec for 5700XT (same as the RX590) is also very contradictory of that claim. I could end up eating crow and AMD actually built a RTX 2070-killer that draws power at Polaris 10 levels but nothing that is being shown now implies this is likely.

How do you explain it? What for does not only Nvidia spec it at 225W power delivery, when it is 120W GPU? Why do Nvidia partners give it overkill power delivery, when it does not need it?

AMD does not say what I have said? And you think I made it up, or took it straight from the ********* footnotes of AMD's presentation?

Using the following Graphics cards: Radeon 5700 XT(!) with 40 Units, versus Vega 64 with 64 Units. Radeon 5700 XT was faster on average 14% across various games in 3 resolutions: 1080p, 1440p, 4K.

Using the following graphics cards: Navi 10, with 40 Compute units, versus Vega 64, with 40 CU's enabled, Navi GPU was 23% more efficient.

Performance may Vary.

alexruiz

Platinum Member

- Sep 21, 2001

- 2,836

- 556

- 126

A 225w power delivery that is also used by: GTX 1080/70, RX580/480, RTX 2060/70,

Most of the RTX 2070s are 8 + 6 pins power delivery, and a few are 8 + 8

Edit: The GTX 1660, all of them seem to be 8 pins

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

They do not seem. They ARE 8 pin connector GPUs. ALL of GTX 1660, and 1660 Ti.Edit: The GTX 1660, all of them seem to be 8 pins

insertcarehere

Senior member

- Jan 17, 2013

- 712

- 701

- 136

AMD does not say what I have said? And you think I made it up, or took it straight from the ********* footnotes of AMD's presentation?

Using the following Graphics cards: Radeon 5700 XT(!) with 40 Units, versus Vega 64 with 64 Units. Radeon 5700 XT was faster on average 14% across various games in 3 resolutions: 1080p, 1440p, 4K.

Using the following graphics cards: Navi 10, with 40 Compute units, versus Vega 64, with 40 CU's enabled, Navi GPU was 23% more efficient.

Performance may Vary.

Nice, now not only can that exact statement not be found in the endnotes anywhere, even if it did, it doesn't actually support your claim of:

40 CU Navi GPU was 14% faster than Vega 64 with 64 CU's. And at the same time, 40 CU Navi chip used 23% less power than Vega64 cut down to 40 CU's.

I am sure that a 40CU Navi GPU can be clocked to be 14% faster than a fully enabled Vega 64, or that it can clock to use 23% less power than a 40 CU Vega 64 (and be significantly more efficient than Vega at that clock). But you are saying it can do both at the same clocks, that's a very different statement.

Most of the RTX 2070s are 8 + 6 pins power delivery, and a few are 8 + 8

Edit: The GTX 1660, all of them seem to be 8 pins

Aftermarket cards with bigger boost clocks, better cooling and OCs in mind have beefier power delivery, in other news water is wet. The reference design is what matters for an apples-to-apples comparison, and that is a single 8-pin for the RTX 2070. (Hint: The GTX Turings have no reference cards so the partners get to decide for themselves, which is normally going to lead to beefier power deliveries than reference designs, sharing design elements with their RTX boards)

Last edited:

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

I am sure that a 40CU Navi GPU can be clocked to be 14% faster than a fully enabled Vega 64, or that it can clock to use 23% less power than a 40 CU Vega 64 (and be significantly more efficient than Vega at that clock). But you are saying it can do both at the same clocks, that's a very different statement.

From what I can see, the performance/watt graph corresponds only to RX 365 in the end notes.

So, a 40CU NAVI 10 is 14% faster than a 64CU VEGA 10 at 23% less power.

If you do the math the actual output is 1.48x higher perf/watt

Not sure how much I would trust that - surely the Vega 10 chip was power optimised for 2 specific configurations of 64 or 56 CU SKU's during design, and in the bios since.Guys, read Again the Footnotes. Slowly. Especially the one: RX-358. It talks about Vega 64 running 40 CU. In relation to the power comparison between Vega and Navi.

I have some doubt that a change to 40 would show ideal performance of the chip perf/watt.

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

I think it's weird that they would test any Vega product with only 40CU enabled.

I've just decided to wait until release. The last few pages of this thread haven't had anything useful. I'll catch you all after July 7th unless we actually get something worth digesting or the mods do some moderation (and if I get an infraction for this, I wouldn't be surprised).

And if you were versed on the rules, you know you cannot call the mods out.

There is only one venue for posting moderator complaints. Moderator Discussion forum.

Here, brush up on the rules.

https://forums.anandtech.com/threads/anandtech-forum-guidelines.60552/

Particularly numbers 12, 13.

esquared

Anandtech Forum Director

Last edited by a moderator:

DrMrLordX

Lifer

- Apr 27, 2000

- 23,224

- 13,303

- 136

RX 358 is about performance per mm2.

That might be interesting for internal benchmarking purposes, but I doubt the public (read: buyers) really wants data like that. You show me a commercial Vega64 product, like a reference card, and then you pit it against a reference 5700XT and show me the performance and power draw numbers. With all the board power oddities and other crap thrown in. That's what I want to see. I can't buy a 40CU Vega10 so why do I care about it?

guskline

Diamond Member

- Apr 17, 2006

- 5,338

- 476

- 126

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

The same slide is talking about Power draw compared to Vega 64. Where did AMD in the same slide:RX 358 is about performance per mm2.

Compared power draw of Navi GPu to Vega 56?

Nowhere. Because that RX 358 talks about BOTH: comparison of power/watt and performance/area. And the comparison for power is between Navi GPU and Vega 64 GPU, that has been cut down to 40 CUs.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,486

- 17,891

- 136

Friendly reminder that VII offers up to 40% higher perf/watt over Vega 64.

Guys, read Again the Footnotes. Slowly. Especially the one: RX-358. It talks about Vega 64 running 40 CU. In relation to the power comparison between Vega and Navi.

Why should I care about that contrived nonsense, involving a Vega SKU that never existed? I prefer to base my analysis on the hard numbers given elsewhere in the presentation. The "typical board power" for the RX 5700 XT is specifically listed as 225W. And if we can take the performance numbers provided at face value, the RX 5700 XT averages about 11% higher performance than the RTX 2070. We know from independent testing that (regardless of what Nvidia claims) the actual TBP of RTX 2070 Founders Edition is 200W. That means that if perf/watt of Navi was equivalent to Turing, then RX 5700 XT should be a 222W card. Since it's actually a few watts higher, this means that Navi has slightly worse perf/watt than Turing. If they were on the same node, that negligible difference would be fine, but Navi can barely keep up in perf/watt despite a full node advantage. That's the problem.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

Do you KNOW that that 225W is actual power draw of 5700 XT? What if it is 215W? What if it is 217W?Why should I care about that contrived nonsense, involving a Vega SKU that never existed? I prefer to base my analysis on the hard numbers given elsewhere in the presentation. The "typical board power" for the RX 5700 XT is specifically listed as 225W. And if we can take the performance numbers provided at face value, the RX 5700 XT averages about 11% higher performance than the RTX 2070. We know from independent testing that (regardless of what Nvidia claims) the actual TBP of RTX 2070 Founders Edition is 200W. That means that if perf/watt of Navi was equivalent to Turing, then RX 5700 XT should be a 222W card. Since it's actually a few watts higher, this means that Navi has slightly worse perf/watt than Turing. If they were on the same node, that negligible difference would be fine, but Navi can barely keep up in perf/watt despite a full node advantage. That's the problem.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,486

- 17,891

- 136

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.