You note similar die sizes, thing is, the 270X and the RX 480 do not have the same transistor counts at all as they are on different nodes. The RX 480 has double the transistors of the 270X and is much closer to Hawaii. Which makes sense as they have near the same performance, and Hawaii production ended because the RX 480 took its place. You mention they cannot be compared because of pricing. But pricing changes, and to this day, there has never been a replacement for Hawaii. We had Polaris, which matched its performance. And then a year later we had Vega, but its pricing was way above what the 390 was priced at.

Not even sure why this has to be argued. When AMD stated twice the Perf:Watt, they specifically mentioned the 390. As they had the same performance envelope, but Polaris used almost half the power.

AMD itself compared Polaris 10 to 270X, from which they came up with their claimed 2.8x performance/watt increase, it has the same die size, it fills the same market segment, targets similar power consumption; it's the right comparison. The only problem with AMD's comparison is that they used TDP; they didn't actually measure the power consumption of the card under load. It's hard to excuse that when review outlets are able to do it. Of course, this works out in their favor because 270X had a 180W TDP, though the card itself it only consumes 110W at full load. They said the RX 470 had a 110W TDP (later changed to 120W) and consumes 120W. There's a whole lot wrong with this - the 270X consumes 10W less than 470, not 70W more, and once again, AMD is the one who made this comparison. I linked the relevant slide in my post above.

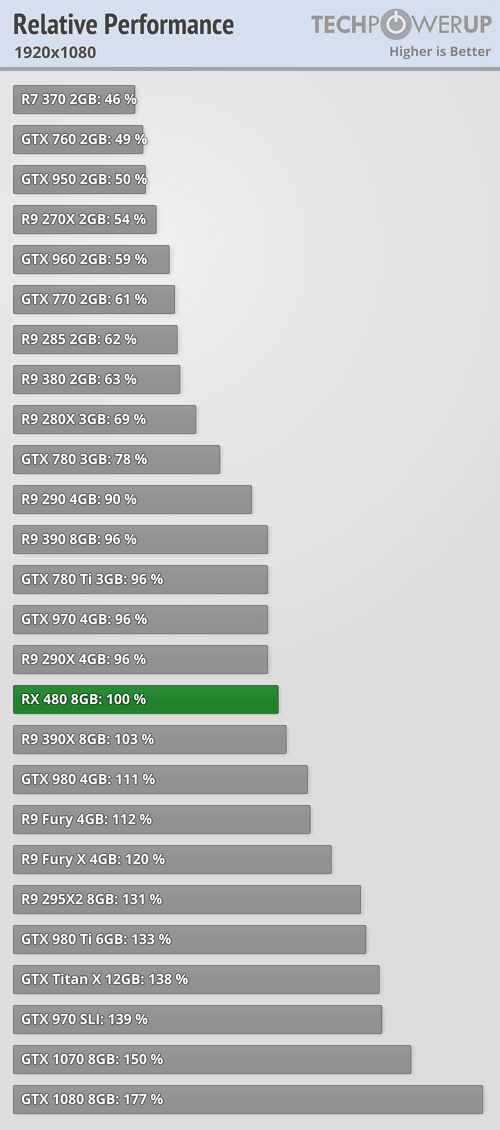

As for the 390, well, the RX 480's performance/watt advantage is only 70%; it gets a bit better with the reference 470 (87% advantage), though aftermarket 470s are less efficient, but that's still not double, and it's certainly not 2.8x, which was the number AMD showed us most prominently.

I know it's kind of lame to relitigate this nearly three years later, but the fact is, Polaris was about 70% more efficient on average than the previous gen, at best about 100% (compared to the 280-class cards) and at worst about 35%; AMD's claimed 180% increase was most charitably an

enormous stretch and less leniently considered, an outright lie, and it's not something they've given up on or corrected. Their use of a 6-pin power connector on the RX 480 was also a terrible idea; they wanted to have a smaller connector than the 970, so they actually compromised the actual operation of their product (mildly perhaps, but it absolutely would've been way more stable with an 8-pin) just so they could say that, even though it means nothing - the 970 consumes actually slightly less power than the 480.

I just don't like any of what they did - at every turn they tried to hide what Polaris actually was from us. It wasn't honest and it wasn't consumer-friendly. All they had to do was say it had 1.7x better efficiency (or they could even say 2x if they compared it to the 280X explicitly) and put an 8-pin connector on the 480 and everything would be perfectly fine; the RX 470 was actually quite great, but instead they lied to us. I'm not going to forget that in a hurry.

The closest you can get is the reference 470 vs the reference 280X - a 2.15x improvement, but still not 2.8x, and not made by AMD, and not the right comparison - neither by performance (390) and neither by market segment/die size/power target (270X).