Ok here we go, i dont have any new AAA titles to test so this does not represent in any way modern AAA gaming. Also avg fps does not tell the whole story, with the 10100F (and pci-e 3.0) i was having issues in some games with fps going to single digits for a short time every now and then, this is a clear pcie bandwidth issue, for example with RDR2, when Arthur is running out of the store and he shoots the first guard, fps drops to nothing in that moment, every time. Something that does not happens on the same system with a 11400. And i know it is the pcie and not the CPU because it also happens with the 4700S were i did some tests with the 6500XT a few weeks ago. Assessin creed Origins is another game that is severely affected by pcie bandwidth, even trough VRAM should be enoght.

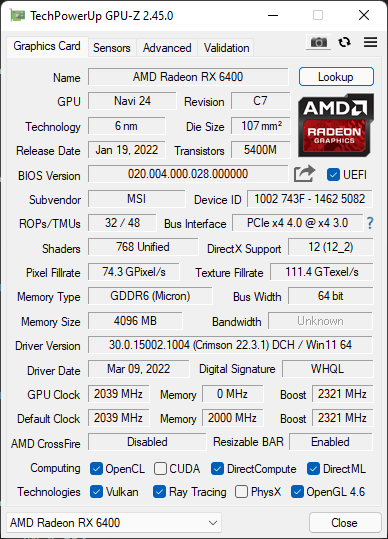

I3-10100F/16GB/MSI RX 6400/ASUS H510M-E

AC: Origins 1080p Very High

Cyberpunk 2077 1080p Medium

FarCry 5 1080p Ultra

RDR2 1080p High (i didnt enabled the advanced graphics settings)

Total War: Warhammer 1080p ultra

Witcher 3 1080p Ultra Hairworks off

Average framerate : 61.7 FPS

Minimum framerate : 54.6 FPS

Maximum framerate : 67.1 FPS

1% low framerate : 43.2 FPS

0.1% low framerate : 9.9 FPS

Shadow of the Tomb Raider 1080p Highest

Metro Exodus 1080p High

GTA V 1080p Ultra MSAA x4

Average framerate : 65.0 FPS

Minimum framerate : 40.9 FPS

Maximum framerate : 94.5 FPS

1% low framerate : 41.0 FPS

0.1% low framerate : 40.4 FPS

I5-11400/16GB/MSI RX 6400/ASUS H510M-E

AC: Origins 1080p Very High

Cyberpunk 2077 1080p Medium

FarCry 5 1080p Ultra

RDR2 1080p High (i didnt enabled the advanced graphics settings)

Total War: Warhammer 1080p ultra

Witcher 3 1080p Ultra Hairworks off

Average framerate : 65.1 FPS

Minimum framerate : 62.8 FPS

Maximum framerate : 68.6 FPS

1% low framerate : 54.6 FPS

0.1% low framerate : 47.8 FPS

Shadow of the Tomb Raider 1080p Highest

Metro Exodus 1080p High

GTA V 1080p Ultra MSAA x4

Average framerate : 65.1 FPS

Minimum framerate : 40.8 FPS

Maximum framerate : 99.2 FPS

1% low framerate : 40.9 FPS

0.1% low framerate : 40.5 FPS

There is any way for me to be sure this thing has the full 16MB IC?

www.techpowerup.com

www.techpowerup.com