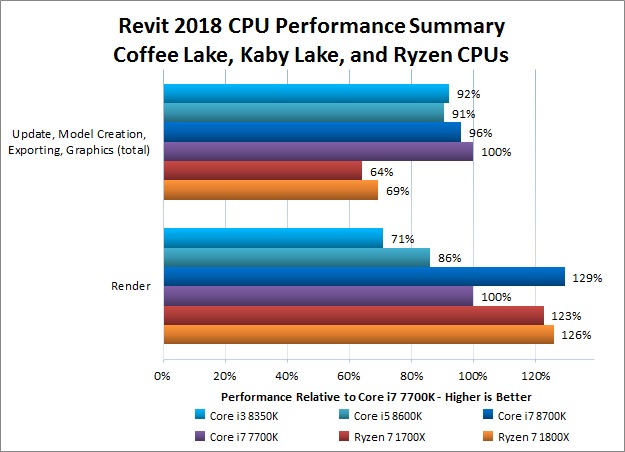

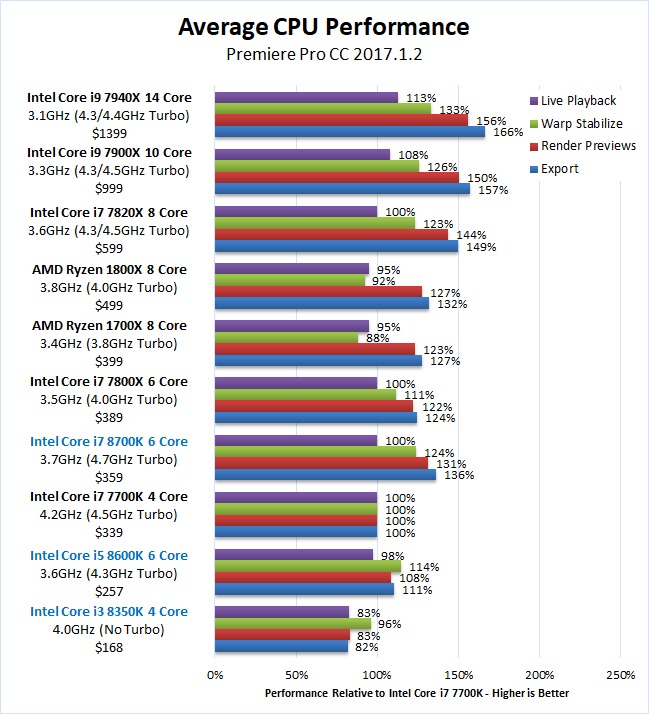

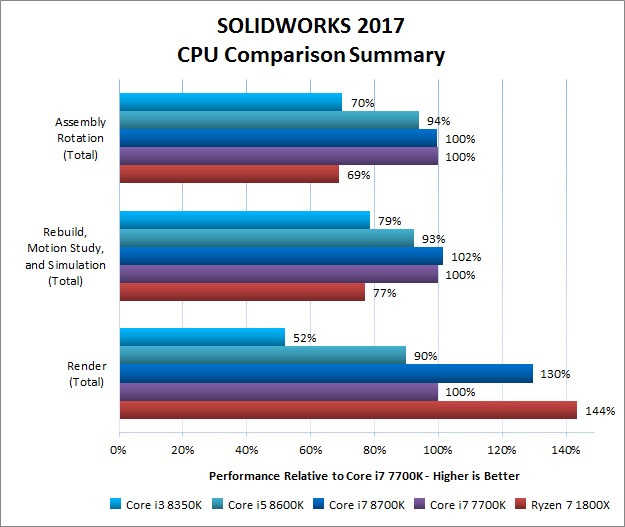

We are currently looking to replace 18 workstations that run a combination of Lightroom, Solidworks and Revit and possibly Premier Pro. The systems are currently all running Xeon E3-1225 V3's(hasewell QC). My first thought was TR 1920x, Ryzen 1800x or SKL-X. It's a pain in the ass finding reliable realworld benchmarks for these applications. I went through pugetsystems last week and noted AMD chips aren't doing well in the applications we will be using. What is the cause of the poor performance? Application optimization or?

https://www.pugetsystems.com/all_articles.php

https://www.pugetsystems.com/all_articles.php