PCIe 3.0 could be crippling AMD's RX 5500 XT performance

While the bandwidth provided by PCIe 3.0 is found to be sufficient for most GPUs with adequate VRAM, the 4GB Radeon RX 5500 XT is found to be struggling possibly due to its PCIe x8 limitation.

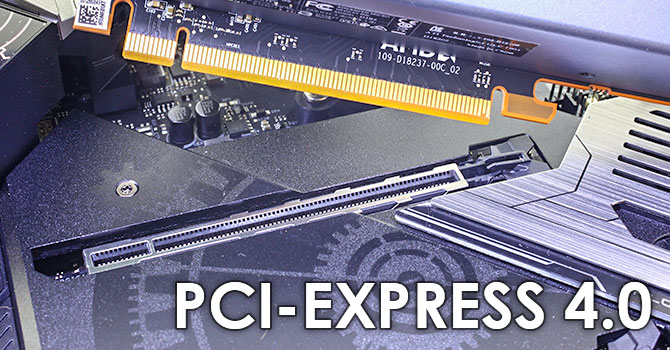

PCI-E 3.0 vs. PCI-E 4.0: Was bringt PCI-Express 4.0 mit einer RX 5500 XT wirklich?

Welche Vorteile bietet PCI-Express 4.0 wirklich? PCGH hat eine RX 5500 XT getestet und die Ergebnisse sind beeindruckend!

PCGH is the source of the benchmarks but since it's not in english, I'm linking a write-up on the findings as well.

This story popped up in my feed, not too sure what to think of it yet and don't have much free time to really dig into it but very interesting if true. Possibly could be a driver issue at work? I don't know, it seems like PCIe3 8x shouldn't be this restricting but maybe it is.