- Mar 20, 2000

- 102,407

- 8,595

- 126

Quit being dicks. You two are talking past each other and even have large swathes of agreement but because you for some reason see each other as the opposition who must be destroyed you're incapable of seeing it.

AT Moderator ElFenix

AT Moderator ElFenix

Seriously, get a clue. I actually own the hardware, and many of these games so I speak from firsthand experience. I've actually gained as much 150% or more with Doom Vulkan over OpenGL in the game's most CPU limited area, and the benchmarks will back up what I am saying.

Anyway, this is my last post addressing you because it's obvious you're just a troll.

Get your tone in order, especially when you are wrong on so many levels.

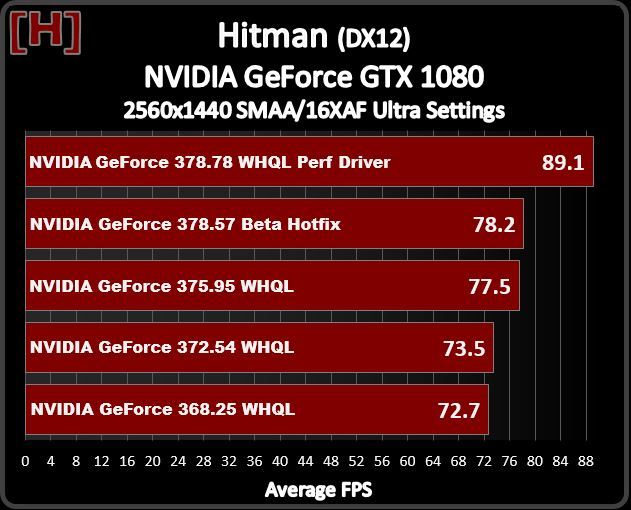

I own a GTX 1060 6GB and GTX 1080, but I'm not going from my personal numbers which actually confirm what I'm saying, I go by tests, there is literally a new thread right now about Hitman DX11 and DX12 test in actual gameplay, in it you can see AMD's card is on average about 5fps faster, while Nvidia 1060 is about the same average, with significantly lower frames in higher complexity scenes in DX12.

Again, check duderandom88, testinggames, artis, etc... on youtube for gameplay tests and you can see the 1060 6GB losing to the RX 580 8GB in pretty much all DX12 games, but what is more important Nvidia loses performance in most games when going to DX12 over DX11.

OpenGL was a crappy API, it's always performed terrible, usually 30%-50% worse than Microsoft's alternatives like DX11 or DX10, etc... so of course you are going to gain performance going to Vulkan over OpenGL on Nvidia, you will gain performance on an integrated Intel graphics in Vulkan over openGL.

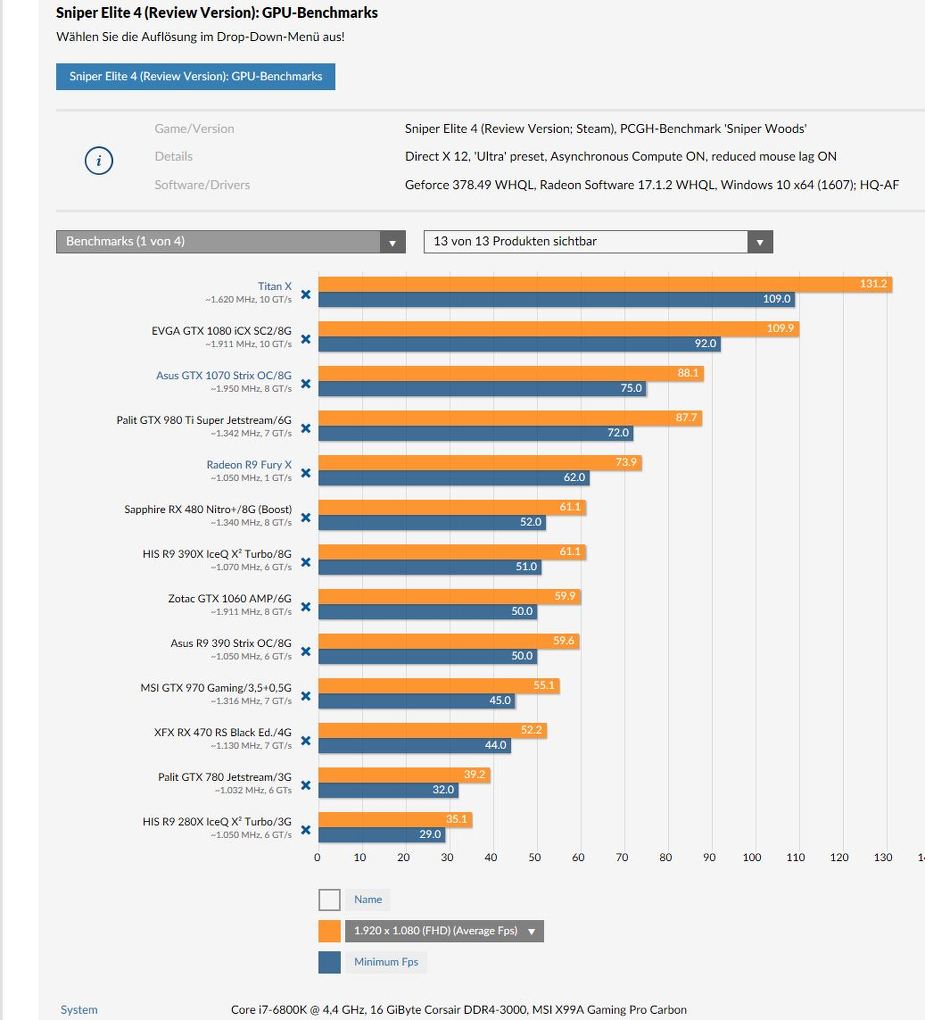

The facts are Nvidia gains 1-4fps in SE4 on AVERAGE, sometimes up to 8-9fps more in DX12, but sometimes that much lower, while AMD gains 15-20fps in DX12 over DX11.

Hitman is a wash in gameplay, about same performance on Nvidia compared to DX11, in all other game I've seen and tested, like Tom Clancy, warhammer, Deus-EX, ROTTR, Battlefield 1, etc... Nvidia cards lose performance going to DX12 over DX11.