Krteq

Golden Member

- May 22, 2015

- 1,010

- 730

- 136

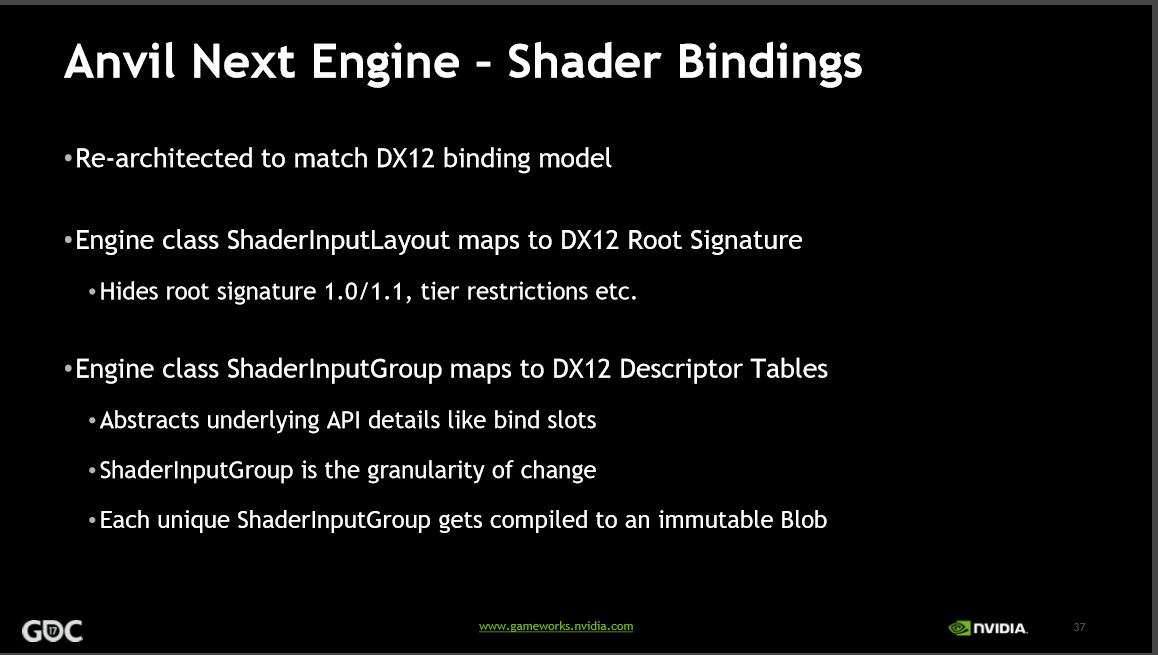

THX for explanation ZlatanBecause Microsoft changed the final resource binding specs too late. There was a lot of talk about what specs would be useful for the future, but the IHVs had to develop an implementation for the release of the D3D12 API. NVIDIA just unable to react for the final changes, and these opens the door for some emulation. Their hardwares still not fully bindless, but they can emulate the TIER_3 support with some overhead on the CPU side. But to do this, they need to write the emulation layer between the driver and the hardware, and this required a lot of code change on their original implementation.

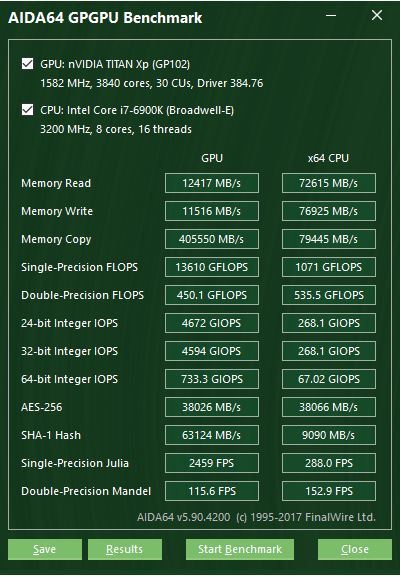

Can you somehow confirm that Paxwell cards have support for resource binding Tier 3 implemented now?