However if you look at the first quote in post #432 (which was originally post #338) you will see that SATA SSD is doing almost identical (if not identical) to NVMe NAND in most games* (4K video editing with an intermediate codec should be the same with either SATA SSD or NVMe NAND SSD).

*Only one game showed a strong difference in performance.

Perhaps you want to revisit your links, because while it doesn't show big of a gain as it does in synthetic tests, the differences are still significant.

Here are the links (originally posted in

#338):

https://forums.anandtech.com/threads/nvme-adapter.2556316/#post-39641478

https://forums.anandtech.com/threads/nvme-adapter.2556316/#post-39644028

https://forums.anandtech.com/threads/nvme-adapter.2556316/#post-39643985

If you look at the gaming results found in those there is only one strong exception and that is the one from the last link where Call of Duty Infinite Warefare is more than twice as fast with the 960 EVO (11 seconds) as it is with the MX300 (25 seconds). After that the next best result for NVMe NAND (also found in the last link) has the load time for SATA SSD 23% slower.

From the first link (which is the link with charts I can post) notice 850 EVO vs. 970 EVO:

In two of the games the 850 EVO is identical (actually .1 second faster in one) to 970 EVO and in third game (Shadow of Mordor) the 850 EVO is only 6% slower.

Here are some more games from the first link:

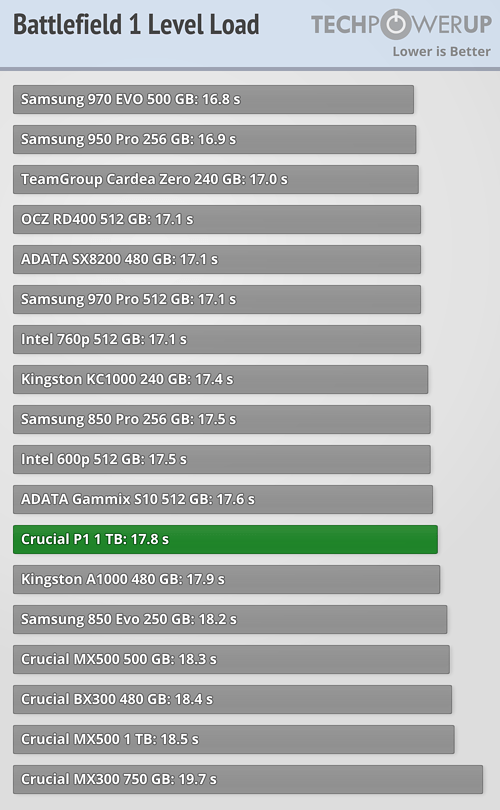

850 EVO is 8.3% slower than 970 EVO in Battlefield 1.

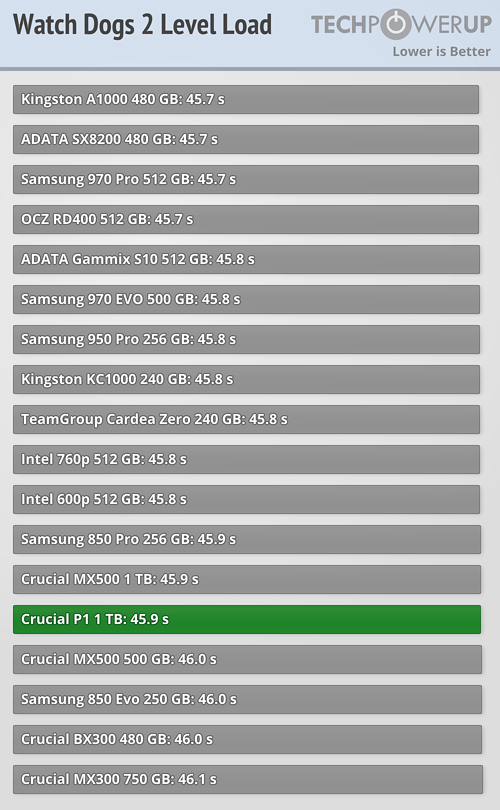

In the game above (Watch dogs 2) 850 EVO is only .7% slower than the 970 EVO.

And in the last comparison (from link one) we don't have 850 EVO but we do have the MX500 SATA SSD which is a hair faster than 970 EVO.

(So for link #1 4 games have SATA basically identical to PCIe 3.0 x4 NVMe NAND and two games where SATA SSD is only 6% and 8.3% slower. This is pretty much what I found with my Youtube video reseach* which is found in the second link at the beginning of this post.)

*In three of the Youtube videos NVMe NAND was no faster (or without much difference) than SATA SSD (for all games tested) and in the fourth Youtube video there were games where NVMe NAND was~ identical to SATA SSD but it also had three results where SATA SSD was slower by a larger margin than found in first three Youtube videos but this margin was only 18%,17% and 9% for the three games that were slower.

The following videos showed NAND based NVMe and SATA SSD either essentially the same or without much difference:

https://www.youtube.com/watch?v=EdF_aerWcW8

The video below had some titles loading around the same time and a few titles having a larger difference (eg, Battlefield 1 was ~46 seconds on NVMe and ~50 seconds on SATA SSD, Hitman was ~12 seconds on NVMe and ~14 seconds on SATA SSD, Rainbow Six Seige 6.11 seconds on NVMe and 7.10 seconds on SATA SSD)

https://www.youtube.com/watch?v=GKv8cAaJgqs

Final tally on the games in the three provided links:

1 Game where SATA is less than half the speed of PCIe 3.0 x4 NVMe (found in third link)

1 Game where SATA is 23% slower than PCIe 3.0 x4 NVMe (found in third link)

1 Game where SATA is 18% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 17% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 16% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 9% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 8.3% slower than PCIe 3.0 x4 NVMe (found in first link)

1 Game where SATA is 6% slower than PCIe 3.0 x4 NVMe (found in first link)

1 Game where SATA is 3.6% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 3.4% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 3.2% slower than PCIe 3.0 x4 NVMe (found in second link)

1 Game where SATA is 1.6% slower than PCIe 3.0 x4 NVMe (found in second link)

And......

16 Games where SATA is essentially identical in speed to PCIe 3.0 x 4 NVMe!

So most games really are almost identical (if not identical) in load times when comparing PCIe 3.0 x 4 NVMe NAND to SATA SSD.

P.S. Originally I missed one game in which SATA was 16% slower than NVMe (but this is now included in the above tally)