You could remove the graphs from my post to make yours a bit shorter!

He said his budget was up to $350. That's how the discussion of $400 670 started.

See that's what you are missing:

the differences in architectures and what's happening in modern games today.

I am going to address all of these points below.

(1) Tessellation

For example, Crysis 2 on Ultra + DX11 adds tessellation automatically. Why do you think HD6900 series and HD7800 series get hammered so much? You can't have GTX670 and HD7850 perform the same in a "hypothetical scenario" as you mentioned since there are 2 fundamental differences in the architecture that will ensure the Kepler is faster: tessellation and FP16 textures - part of modern DX11 games.

Those 2 things are a large part of the reason why GTX670 smokes 7850 in modern games by such a large delta - games that use Tessellation and FP16 textures (there are other reasons such as optimizations for drivers too). If you need more evidence, tessellation and FP16 are huge reasons why 670 smokes 7970 in some DX11 games (just like why HD7970's superior bandwidth allows it to lay waste to the 670 in memory bandwidth limited situation and with AA in high resolutions where memory bandwidth is a factor).

You keep ignoring tessellation as a non-factor but it's part of DX11 games and is partly WHAT makes GTX600 series so fast in modern games that have it!

Here is the evidence:

How do you explain GTX670 being 39% faster and GTX680 being 51% faster than GTX580 in Crysis 2? It sure

isn't related to memory bandwidth or pixel performance which GTX580 has plenty vs. 670/680. It also cannot be explained by texture performance since it wouldn't explain why GTX670 is whopping HD7970 that has gobbles of texture performance.

The same if we revisit an older game such as Lost Planet 2

So that's Tessellation covered.

(2) FP16 textures (64-bit textures)

I also noted another key advantage of the Kepler architecture: FP16 texture performance. You know which games uses FP16 textures? Dirt games for example, based on the EGO engine:

Again in all 3 of these cases, HD7850 is horrendously outclassed by the 670 because of 2 things that Kepler architecture excels at:

1) Tessellation performance

2) FP16 next generation texture performance.

Now before you call it a fluke, I'll even prove it to you using older cards.

Look at the

specs of GTX570 vs. GTX480.

- GTX480 has more memory bandwidth, more pixel & shader performance, more VRAM, and GTX570 has a tiny texture fill-rate advantage.

Now can you explain to me how in the world can GTX570 beat GTX480 by a whopping 5 FPS in Dirt 2 at 2560x1600? This should not happen under any circumstances based on their specs.

Do you know why? Because of the FP16 enhancements of GF110 over GF100. GF114 can perform 4 Texels/clock vs. 2 Texels/clock in FP16 texture. It says it right here in the

GF110 architecture breakdown. Dirt games use the EGO engine which

uses FP16 textures. Dirt 2 also has tessellation which GF110 performs better at than GF100.

GF110 has also improved tessellation performance over GF100. "NVIDIA has improved the efficiency of the Z-cull units in their raster engine, allowing them to retire additional pixels that were not caught in the previous iteration of their Z-cull unit. Z-cull unit primarily serves to improve their tessellation performance by allowing NVIDIA to better reject pixels on small triangles. ~

Source

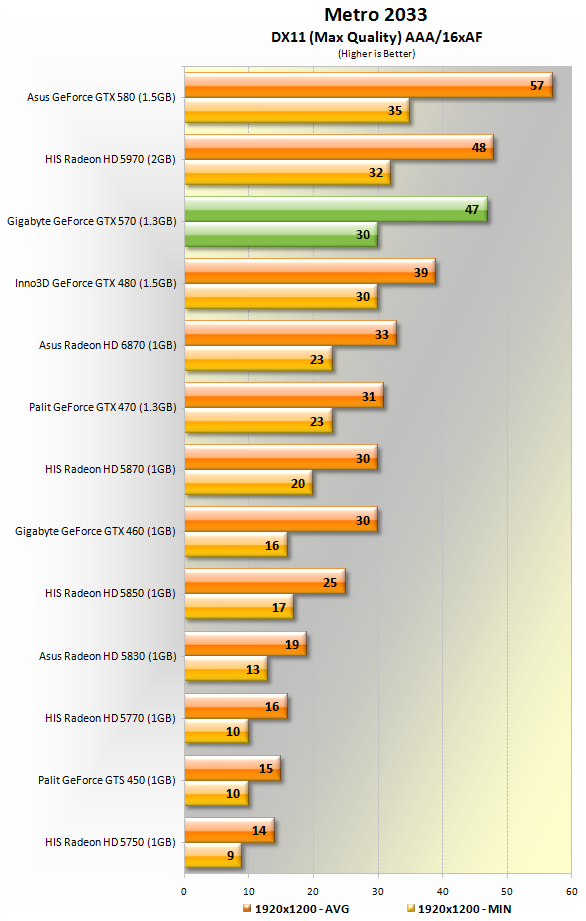

Ok so let's revisit tessellation with older cards in Metro 2033:

It should be impossible for GTX570 to beat GTX480 by that much. The answer?

Improved Tessellation in GF110.

(3) Deferred MSAA (Frostbyte 2.0).

Ok so what about BF3? I think there is an explanation for that too. AMD's Cayman and Cypress took a larger performance penalty in deferred MSAA game engines than Fermi architecture did.

This was investigated and proven by Bit-Tech using Battlefield 3. Architecturally, I haven't been able to find an explanation but it just could be that AMD stopped optimizing for MSAA as much due to MLAA. I don't know for sure. It looks like this hasn't changed much with GCN vs. Kepler which is why Kepler wins against 7970 in BF3.

In summary, it is my view that Kepler architecture has all 3 facets that are necessary for next generation games covered:

1. Tessellation

2. FP16 textures

3. Deferred MSAA

All 3 of these are going to be trending for next generation games. This is why it's very likely that AMD is working on improving at least on points #1 and #2 with GCN 2.0 / Enhanced because in current state GCN will fall apart rather quickly for next gen games.

Therefore, if I was betting, I'd say HD7850's performance will get much worse a lot sooner since it lacks in all 3 of those areas. No amount of overclocking will save 7850. Whether or not that extra performance is worth $150 depends on the person and his/her budget/upgrade frequency.

I guess it comes down to when you think next generation games will have more tessellation and higher resolution FP16 textures. I think it's already happening and why I think the performance delta between 7850 and 670 will only grow larger.

HD7850 is already falling apart in Dirt Showdown (EGO engine), while HD7950 can't even outperform the 580. Notice GTX570 again outperforming the 480?

In conclusion,

People on our forum are often quick to jump to the notion of "NV-biased games". However, if you dissect the architecture and look under the hood of the technology, it's not as simple as "NV paid more $ to make this game run faster". It sure appears to me that NV made Kepler architecture a lot more advanced for games than GCN is in its current form.

Theoretical tests even show that Kepler architecture does better in tessellation and FP16 textures and in my opinion, that's a large part of the reason why it's so fast in modern games despite 256-bit memory bandwidth.

Just my 2 cents. Feel free to present an opposing case.