These cards are definitely NOT going to be obsolete anytime soon, especially the high end ones.. ie. 670/680 & 7950/70.

We have legit specs on console and they are all going to be crap. We are getting another 8 years of consolitis.

DX11 is here to stay for a long long time. It just seems GCN is the better DX11 architecture, and i have no doubt GK110 will be heaps better than gk104 in that regards.

They will be in so far as the older cards are now, 20-25% faster is just a joke after two years.

My 9800 GT plays BF3 just fine, unbelievable how much arguing takes place over this awful generation.

It just seems GCN is competing with a mid-range nvidia design with a 256 bit bus, weather it's better or not seems an obtuse comment since it's slower at 1080p and only slightly faster at 1600p which is a trivial market segment no matter how loud they talk.

Did you notice any staggering IQ differences with compute shader tax in these resent Gaming Evolved Titles? You obviously didn't, neither does anyone else.

Compute Shader allows programmers to code more efficiently, generally resulting in better image quality through various techniques, and improved performance.

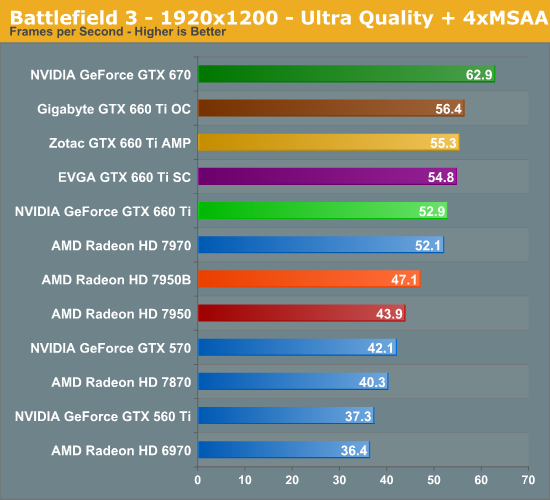

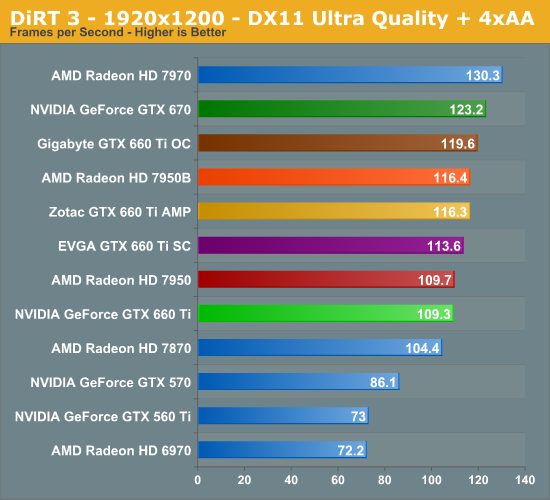

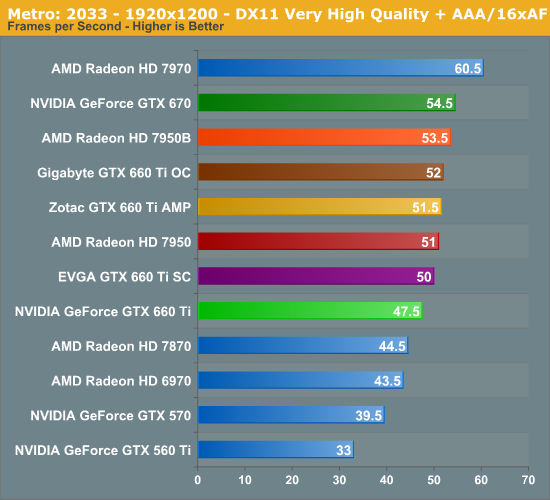

Except in Gaming Evolved titles, where the performance impact is quite large for everyone. Compute Shaders is really just DirectCompute, of which several titles such as Battlefield 3, DiRT 3, Civilization 5, and of course, Metro 2033 all use it.

Now let's look at performance in those titles, because aside from DiRT 3 (which released before AMD needed to create an advantage for their card), the rest of them aren't actually Gaming Evolved titles.

Nvida seems to be doing fine in this DirectCompute 5.0 title.

Nothing wrong here either, a Gaming Evolved DC 5.0 title.

I'm noticing a pattern here.

Nothing odd too place here, so what's really going on?

Does GCN represent a vastly superior design against Nvidia's mid-range product, or are we seeing AMD work closely with developers they're paying to maximize the bandwidth requirement in a direct attempt to not only reduce their own performance for minimal IQ improvements, but also cripple the bandwidth starved mid-range kepler cards?

You might be right, GCN might be the better DX11 design, or it could simply be a comparison of a $550-600 lackluster next gen product against a mid-range next gen product. A 6970 vs a GTX 560 Ti /w an additional 30w of TDP in clock rate if you would, a comparable situation just as this is.