lolfail9001

Golden Member

- Sep 9, 2016

- 1,056

- 353

- 96

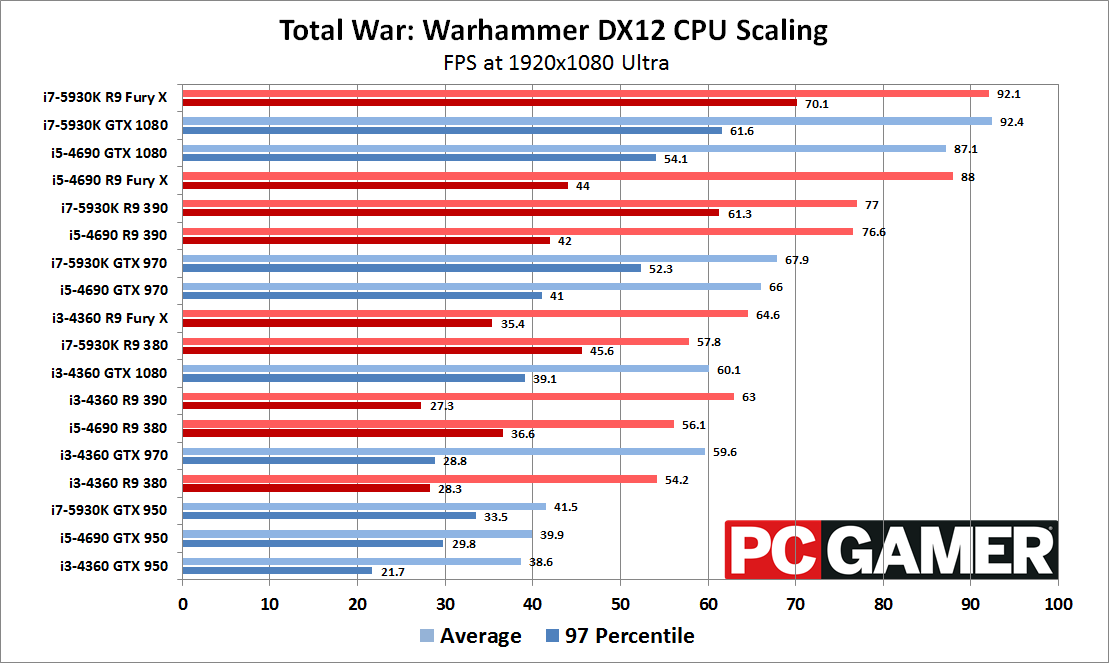

Dx12 performance in general is a chaotic mess, trying to make sense of it only leads to lost sanity.My only question is, if the Nvidia DX12 driver is crippling Ryzen, why doesn't it also cripple the 7700K? No idea.

/me bangs his head against the desk.There could be something to it, because afaik Nvidia still doesn't really support async compute at hardware level. Instead what could be happening is that they have implemented a driver hack to force concurrent draw calls etc to be serialized.

No, that hack is present for Maxwell only, Pascal does async compute perfectly fine.