Sorry, but you have to be completely daft to think the mirror tricks employed in those game screenshots are comparable to any form of real time ray tracing. The fact that you keep stating "it too reflects pixels" when NO REFLECTION CALCULATION is going on reinforces this baffling ignorance makes me wonder if you're the one actually trolling here.

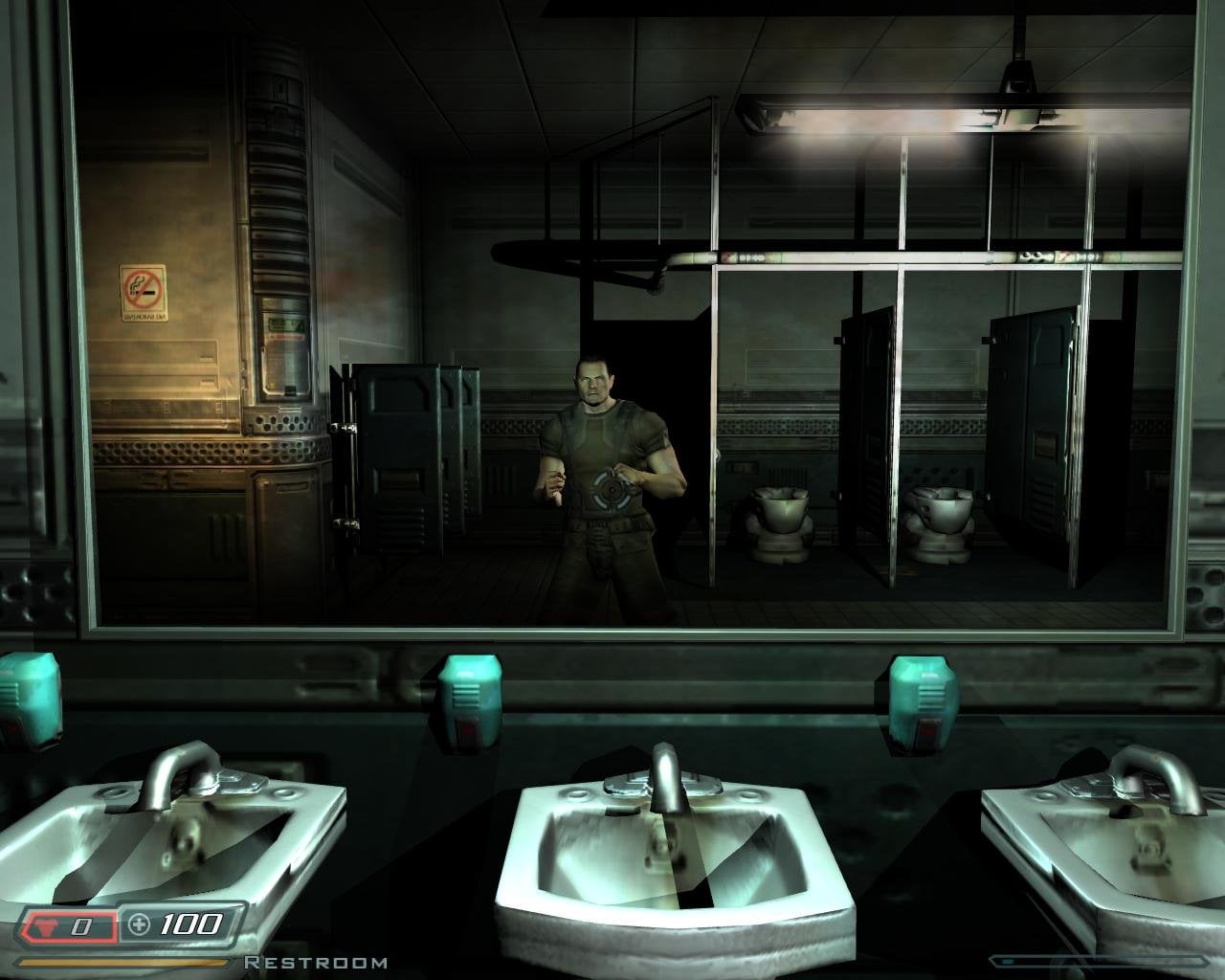

The DNF screenshot reinforces exactly what I said before. That is not a real time reflection, it's a projection. In fact there is not even any dynamic lighting in that scenario, everything is baked, which makes reprojection for mirrors all the more easier. Let me give you a quick lesson on mirrors and Unreal Engine, taken from the documentation itself.

"First you need to make your material, which should look like this:

Now if you try and put this material on an object and toss it in your world, it won't do reflections properly. This is because in video games it would be very costly to try and do reflections by ray-tracing. So instead we use what are called "Reflection Capture" elements. A "Reflection Capture" element is something that captures an image of the screen so that you can use it in your reflections, and allows the game to run much smoother while still using reflections in real time. Unreal Engine 4 has a very simple Reflection Capture system."

Do you understand that? Let me repeat....

Do you understand that? You need to take a static image of the surrounding to use as a basis for the reflection element since the engine can't actually do it in real time (ray tracing).

Better yet, I'm done doing your research. You cite me one reference where actual reflections are calculated in real time on any iteration of Unreal Engine.

Also, I like your Doom 3 reference. Why? Because that cinematic jump scare scene required a complex hidden room build to do all the effects required for that scene. That's why that is the only section in Doom 3 that has mirrors. In fact, this is a common tactic used in various other games to mimick a "reflection" in recent games. Here's an explanation:

"Game developers have used all kinds of wild tricks over the years to simulate stuff like this. In games with mirrors, developers have created entire rooms behind the mirrors, in which a double is rendered and moves with the same inputs that our character does, just flipped, so that it looks like a reflection, but really it’s a mindless double, like some horrifying

Twilight Zone horror movie nightmare.

With ray tracing, a lot of this goes away. You tell that table in the room how much light to absorb and reflect. You make the glass a reflective surface. When you move up to the window, you might be able to see your character if there’s enough backlighting."

https://www.technobuffalo.com/2018/09/06/why-ray-tracing-in-games-is-huge/

Listen, it's one thing to try and dismiss RTX, but using these examples are not the way to do it because they're fake effects and very limited in thier application. None of these projection methods can reflect light off a vase for example. So yeah, trying to bring up "20 year old tech" that "can do it" when it clearly can't I'm gonna call you out on it.

In fact, go ahead and make a thread on Unreal Engine, Unity, or any other engine forum and state how these mirror effects are just as good as real time ray tracing and that old pentium hardware has been doing them for 20 years. Let's see the responses you get.