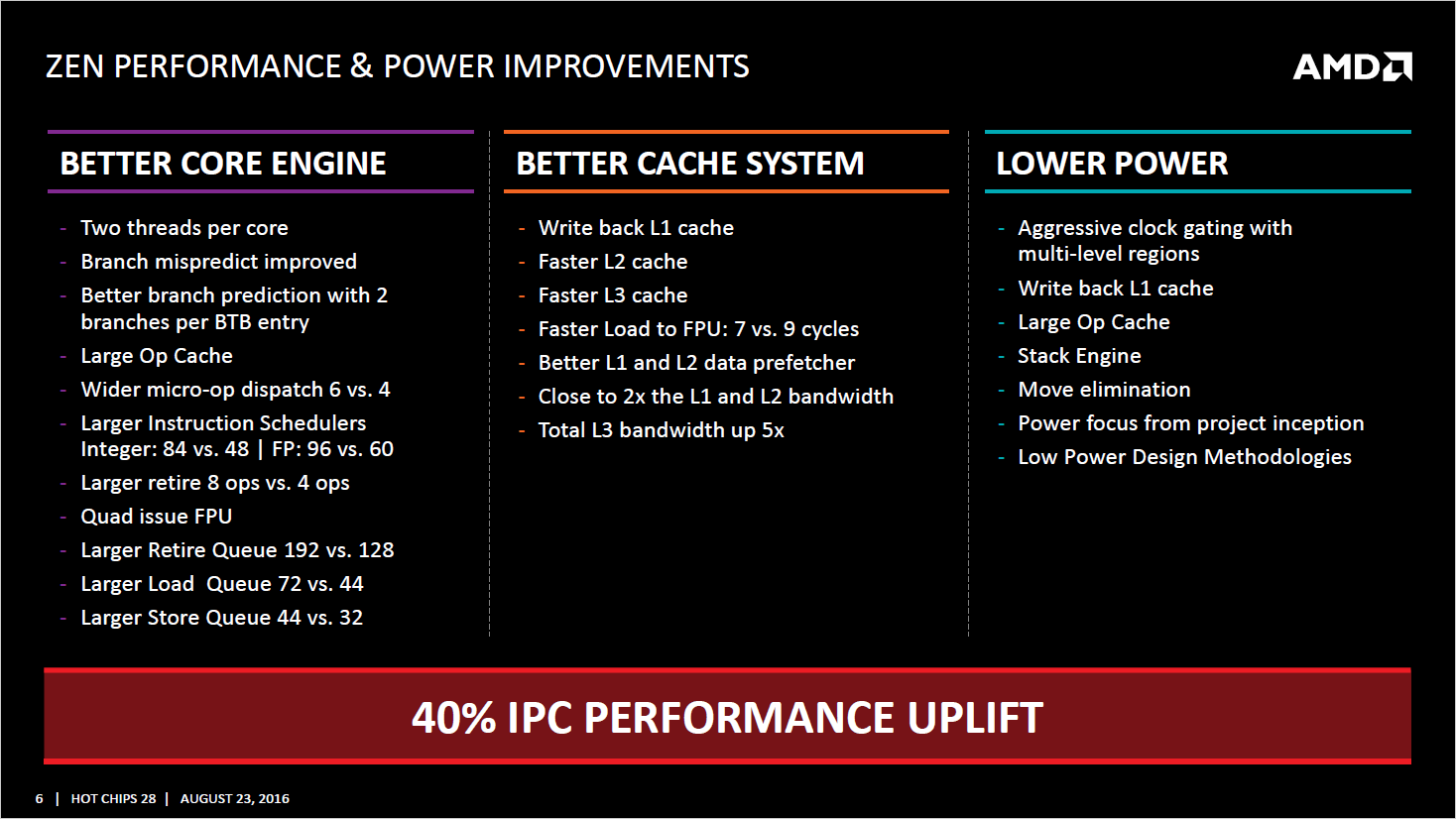

Because you have to SUSTAIN that cycle after cycle for it to make a difference, both can only decode 4 x86 ops and most x86 ops are 1 uop for both. So for all these extra ports to matter you have to be able to feed them and neither Zen or Skylake can Feed more ~6 uops from uop-cache or 4 from Decode.

They also both have the ~same amount of L/S and all the other structures i mentioned, when you have FP workloads for example you will see very high percentage of ops being Loads or Stores, with two threads that will bottleneck both Skylake and Zen before port congestion.

Now you need to find me this workload that is both scalar and SIMD heavy concurrently has a minimum ipc of 2 and is the perfect fit for SMT without bottlenecking the L/S system.

The perfect example of why all these theoretical super high cocurrent port usage doesn't matter is actually 256bit AVX SB/IB vs haswell. Both have the same amount of execution width but haswell is significantly faster because those FP heavy workloads because for 256bit ops it has twice the load and store bandwidth,

So answer me how is Zen going to SUSTAIN 8 128bit reads and 4 128bit writes a cycle when it can only get 2 reads and 1 write a cycle, its very common to see FP workloads with >50% of operations being loads or stores.

There are workload with more operations per each data. Example: there are sub bench of SPEC FP 2006 that have up to 2.4 of IPC (one thread). So 2 threads need 4.8 instructions per clock, mean. Even not counting conflicts, INTEL pipelines are not enough for the peak, just enough for the mean. Zen pipelines probabily yes.

You dont need to tell me how an X86 processor works, you also do not have, 10uops a cycle. you have 6. upto 6 to int and upto 4 to FP.

edit: before you try to claim its additive to 10uops please explain then why Micheal Clake says they have wider retire then dispatch because it helps to clear out the retire queue and get more instructions in flight, is he lying?

10 uops because we have 4 alu, 2 agu and 4 fp. I was talking of the execute stage. I know that the dispatch can be up to 6 uops of which max 4 FP. Also 10 can't be sustained because retire rate is 8 uops/cycle. But 6 uops cycle can be sustained. For INTEL it depends, because due to int/fp pipe conflicts, there could be slowdown that on Zen can't happen.

EDIT: Zen is designed for the peak. SKL will clog near maximum pipeline utilization. Zen will go smoothy. Do you know queue theory, when approaching max capacity the service time increase exponentially?