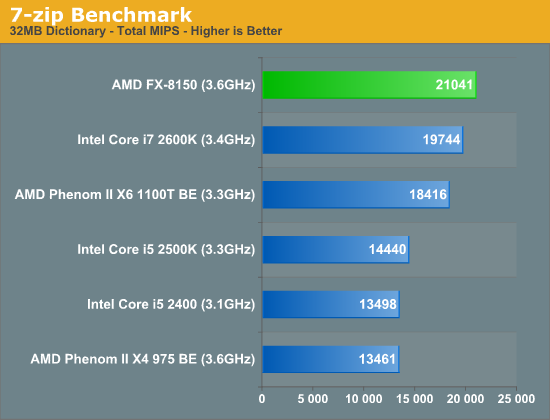

No. It kind of reminds me of this benchmark:

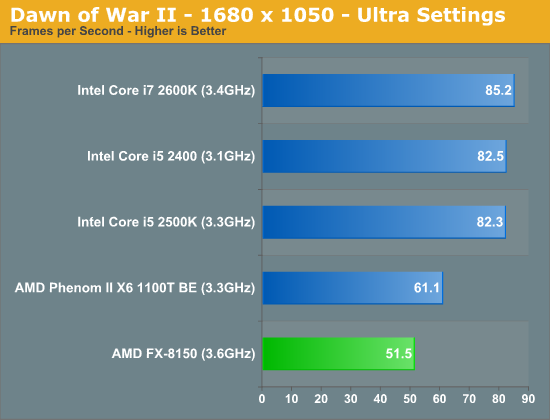

Oh look at that, Bulldozer was competitive with sandy bridge! Until you load up a game, then you get something like this:

Which was obviously a total disaster. A simple 10 second javascript benchmark is all it will take to tell us whether this disaster is going to repeat.

These "disasters" could be due to the substantial differences between core and bulldozer... Starting from the caches (L1 write through.... Brrrrrrrrr...)...

Now Zeh has same things that core, except few (that I will highlight later):

- fast writeback L1

- little (even if is bigger that INTEL's) and dedicated L2 cache, and INCLUSIVE and finally with a 2x256 bit bus...

- Big L3, with the same clock as the cores, victim cache and with big 2x256 bit bus

- uop cache

- SMT

- big buffers and mainly shared resources for SMT

Better than intel there are:

- separate int and FP scheduler: 4+4 vs 4 total for intel

- 2 fmul +2 fadd at 128 bit versus 2 256 bit pipelines: with legacy code this is and advantage

- l0 cache for stack operations

- separate, decoupled and speculative branch prediction

- bigger L2 and L3 with centralized switches versus ring bus: 8c Zen has average hops of 1.5 hops, while 8 core INTEL has at least 2 hops of average hops... If you see the MCC INTEL cores, with 1.5 rings the mean hops increase further. 10 core has at least 2.5 hops. But consumer 10 core are implemented with 1.5 rings and 15 core dices, which have greater mean hops...

Better than AMD:

- 2.5MB/core L3 cache, vs 2mb/core

- 2x256 bit FMAC vs 1x256 fmac. (but par with non fmac code, and lose with 128 bit non fmac code)

- quadchannel for the higher core parts

- 4x256 bit memory ports vs 3x128 bit memory ports

AMD should have better SMT especially in mixed int/fp threads (4+4 vs 4 total int+fp), but INTEL should be better in memory intensive tasks...

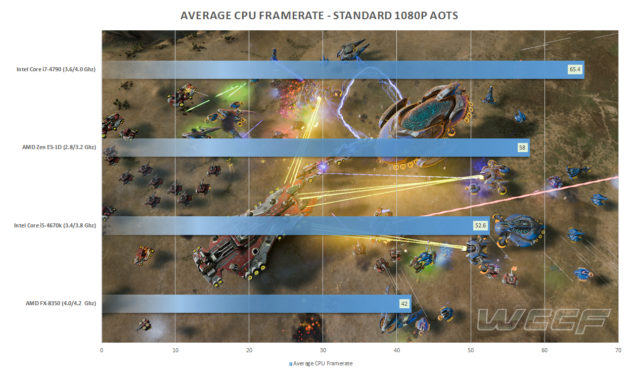

BD sucked in games for the poor L2 and L3 cache performance... Now L2 and L3 are on par or better than INTEL's...