So 3.6ghz > 4.2ghz on stock volts? What are stock volts for that chip anyway?

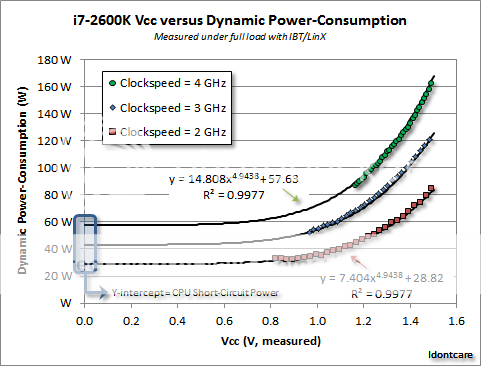

The power consumption is rising on that 2600K. From 3ghz>4ghz the power consumption jumped ~30-40W with an unchanging vcore. Not much, right? Well, the chip has a 95W TDP so that's 30%+ bump in power consumption. The watts consumed go up and they're absolutely noticeable. I'm not sure that graph is helping your cause here, dude.

And that graph is linear, like I said. Look at the vcore adjustments and you'll see they're exponential =P

The power consumption is rising on that 2600K. From 3ghz>4ghz the power consumption jumped ~30-40W with an unchanging vcore. Not much, right? Well, the chip has a 95W TDP so that's 30%+ bump in power consumption. The watts consumed go up and they're absolutely noticeable. I'm not sure that graph is helping your cause here, dude.

And that graph is linear, like I said. Look at the vcore adjustments and you'll see they're exponential =P

Last edited: