I just saw available mobos on local e-shop and what the hell is wrong with Gigabyte? They would be my go-to choice, if only the 3 so far available boards (Aorus Gaming 7,9 and Ultra Gaming) werent actually top 3 most expensive!? Aorus 7 for 456 Euros, when i can have TUF Mark 2 for slightly less than 250.... what is this?

Intel Skylake / Kaby Lake

Page 509 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

CHADBOGA

Platinum Member

- Mar 31, 2009

- 2,135

- 833

- 136

I am effectively a virgin purchaser and am using the IGP in my Ivy Bridge as we speak.Why? The only purpose for IGP on skylake-X would be a power save feature where it puts the gpu's in low power mode... and runs it off IGP.. (oh wait nvidia already does that...)

So no... IGP is pointless on skylake-x.

Unless your a virgin purchaser, meaning u have nothing to recycle, you should have a previous gen GPU to sit on.

If Half Life 3 came out, then I would likely be inspired to buy a discrete GPU.

- Sep 28, 2005

- 21,111

- 3,634

- 126

I just saw available mobos on local e-shop and what the hell is wrong with Gigabyte? They would be my go-to choice, if only the 3 so far available boards (Aorus Gaming 7,9 and Ultra Gaming) werent actually top 3 most expensive!? Aorus 7 for 456 Euros, when i can have TUF Mark 2 for slightly less than 250.... what is this?

Its called hash markup for early adopters.

Retailers will do it to make the most profit at launch, and then the prices pretty much bottom out to normal prices.

Problem with these boards, is i never trust intel at prelaunch or hash passing QoC, so a lot of the boards i bet will be plagued with problems. This is why I asked earily when the mature boards will come out.

My 2 cents, unless u absolutely want it, or your a blog/youtuber who needs those visitors counts at showing off launch skylake-x, its best to wait for the mature stuff to come out. Trust me, board vendors are slow as snails with bios updates.

I was looking at these but the prices of the motherboards are absolutely crazy. The cheapest mobo's being $260!? Most being over $300!

Factoring this in the overall cost, I'm def gonna be waiting for threadripper..

You know thats not really fair to compare launch scalp prices.

X390 board retailed at 699.99 @ some places.

And if you wanna get real technical... you cant even buy a RX 580 now without paying 1080ti prices, and well we know which is a better value unless your a coin miner.

I am effectively a virgin purchaser and am using the IGP in my Ivy Bridge as we speak.

If Half Life 3 came out, then I would likely be inspired to buy a discrete GPU.

well i dont think skylake is in your prospect then...

I think coffeelake would be more your pie, as it has IGP + most likely a more acceptable price tag on its performance / dollar vs skylake-x.

keep note coffee lake should be roughly 2/3rds the cost of skylake if not 1/2.....

Skylake is Enthusiast where as Coffee Lake is mainstream.

If your after the core count and u dont use a dedicated GPU, that tells me your probably more raw enterprise side, and well, i believe enterprise boards have onboard dedicated to cover the lack of IGP.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,115

- 13,217

- 136

So any guess when mature boards and 7920 cpu will be out?

Um October? Maybe? August for the 7920 (supposedly) but I do not think Intel can launch the 14c-18c parts on today's crop of motherboards.

LTC8K6

Lifer

- Mar 10, 2004

- 28,520

- 1,576

- 126

All of the R chips are BGA chips soldered on the mobo. Ball Grid Array. They are not traditional socketed desktop chips. You can't buy them and build a system.My bad, I mixed up R and HQ. There are several Desktop CPU's with L4, albeit at limited release; but they do exist. I believe you are thinking of unlocked CPU's with L4?

List of "Desktop" CPU's with L4 Cache:

Haswell

- i7-4770R

- i5-4670R

- Xeon 1284LV3

Broadwell

- i7-5775C

- i5-5675C

- i5-5575C

- Xeon 1284Lv4

- Xeon 1278Lv4

Skylake

- i7-6785R

- i5-6685R

- i5-6585R

Skylake differs in that there are no desktop Xeons with Iris Pro? Frankly, I don't care too much about the iGPU past the point that it can drive 4k60 content.

I care more about using the L4 cache as a victim cache to the L3 cache. Skylake introduced more ways of utilizing the L4 cache as well. It is prime time to have another "halo" Iris product for mainstream.

Edit: I use my iGPU mainly for OS X. Without my iGPU, upgrading to my 1070 would have been a nightmare as Pascal support was non existant for a year. I hate adding additional drivers to my OS X build for fear of kernel panics (old fear) so using built in HD driver kexts was such an easy fix.

The Xeon chips are low power OEM server chips, of course.

There is no 5575C chip that I know of.

Those server chips seem to be either expensive, or OEM only, or both.

So you are left with just the two socketed Broadwell chips for desktops, the 5775C and the 5675C, that you could reasonably buy and make a desktop system with.

Um October? Maybe? August for the 7920 (supposedly) but I do not think Intel can launch the 14c-18c parts on today's crop of motherboards.

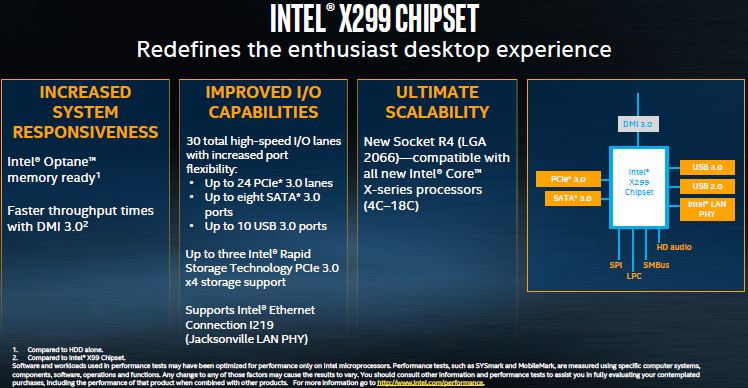

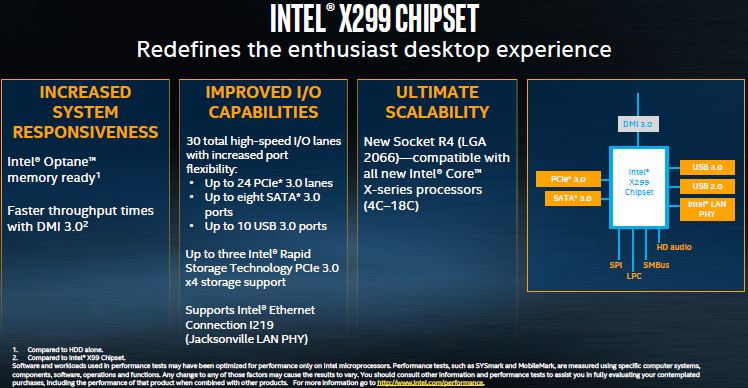

Socket R4 boards are compatible with all new X-series CPUs.

beginner99

Diamond Member

- Jun 2, 2009

- 5,320

- 1,768

- 136

t is prime time to have another "halo" Iris product for mainstream.

Yeah 6-core coffe-lake + L4 cache would buy for more than 7800k cost. That would be the ultimate gaming chip. I mean the 5775c punched so much above it's weight (ghz) solely due to l4 cache. no clue why intel doesn't include it in i7 or hell make an i9 desktop by all means. I don't even care if it only has GT2 or no GPU, just put the l4 there. I wonder if that wouldn't also help tremendously with server chips...

I noticed a strange result for me

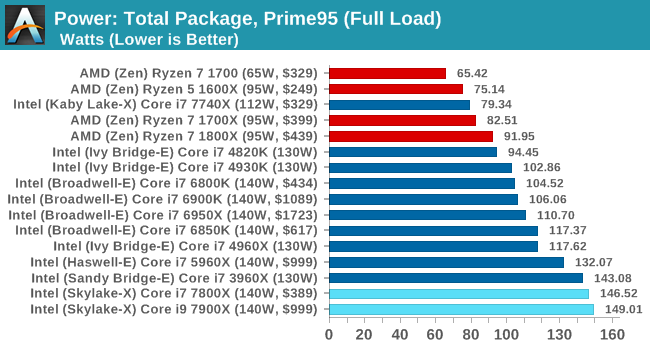

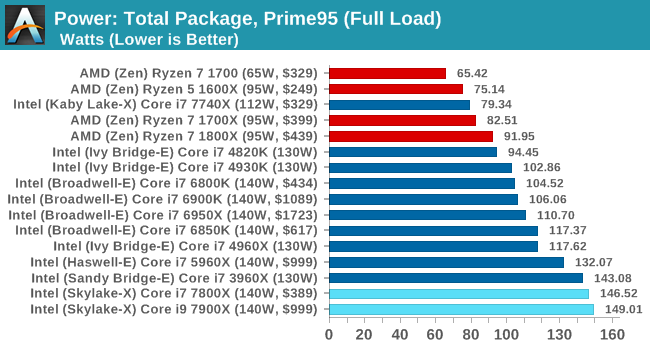

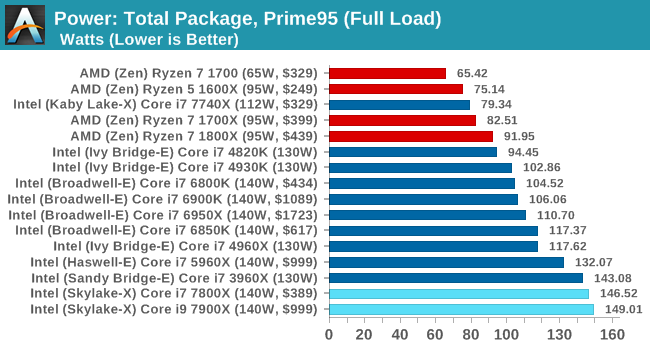

the 6core broadwell consumes 104W, 8core 106W, 10core 110W and 6core with higher freq even 117W

skylake X 6 core 7800X 146W and 10core 7900X 149W

does the core count really have an impact on power consumption? the difference seems too low for me. I know the die is the same but...

the 6core broadwell consumes 104W, 8core 106W, 10core 110W and 6core with higher freq even 117W

skylake X 6 core 7800X 146W and 10core 7900X 149W

does the core count really have an impact on power consumption? the difference seems too low for me. I know the die is the same but...

LTC8K6

Lifer

- Mar 10, 2004

- 28,520

- 1,576

- 126

How are we going to get an 18 core CPU in under the 165W socket power constraint, though? We seem to be at the limit already with the 10 core chips.Socket R4 boards are compatible with all new X-series CPUs.

Either the 18 core chip is going to be running quite slow, or there's something we don't know?

You don't need to guess Intel Core i9-7980XE specs; it already exists as the Xeon Gold 6150: 2.7-3.4-3.7 GHz base-all-core-max frequency, 165 W.

I noticed a strange result for me

the 6core broadwell consumes 104W, 8core 106W, 10core 110W and 6core with higher freq even 117W

skylake X 6 core 7800X 146W and 10core 7900X 149W

does the core count really have an impact on power consumption? the difference seems too low for me. I know the die is the same but...

AVX-512

And that's why this means nothing without the load and time to complete info.AVX-512

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

Can't tell without knowing version number.AVX-512

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

It could be because core to core ping times are rather slow.

What's the uncore frequency? As I remember its quite low for Skylake-X. It would be directly responsible for the higher latency.

w3rd

Senior member

- Mar 1, 2017

- 255

- 62

- 101

I have read 4 reviews total, & skimmed another half dozen more, so far:

I am not convinced any of the websites or reviews are that overwhelmingly positive. They all give Intel's new platform & chips good reviews, and really have nothing bad to say, but it seems like all the reviews are bland. And more speculative on promised featured, or things to come.

But it seems to me, the Consumer's have taken a back seat once again. As none of these reviews I've read, are like a Review... but a Preview.

I am not convinced any of the websites or reviews are that overwhelmingly positive. They all give Intel's new platform & chips good reviews, and really have nothing bad to say, but it seems like all the reviews are bland. And more speculative on promised featured, or things to come.

But it seems to me, the Consumer's have taken a back seat once again. As none of these reviews I've read, are like a Review... but a Preview.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

With the 7700K we were paying for an IGP we didn't want, but at least we could use it if we wanted to. Now if we bought a 7740K, we'd be paying for an IGP that's disabled and unusable.

Why do people repeat this mantra? You aren't paying for it. The mass users that need iGPU pay for the miniscule physical cost difference and the initial R&D.

It costs way more to make another die that lacks the iGPU. It's the same reason they rebrand 6700/7700 chips into Xeon E3's and rebrand low core count Xeon E5's as HEDT. Another mistake in assumption is that the iGPU somehow contributes to power usage when disabled. It doesn't. It's power gated.

If it is this bad with the LCC die, I suspect it will be worse on the HCC and XCC dies.

I don't think so. The consumer chips need to run at 4GHz+ frequencies to accomodate the users that need single thread performance(or at least balanced).

The server chips run 1GHz or so less than the consumer chips. Relative latency in cycles will be lot better even with same uncore speeds because the CPU core frequencies are lot lower as well.

If Intel really gave you enthusiast oriented HEDT chip, they'd have make a scaled-up version of 6700/7700K rather than a lower core count Skylake-SP.

Last edited:

Well, I must say I dont get it.AVX-512

How come that Skylake-X 6 core consumes 146W and Skylake-X 10 core consumes 149W ? 3W difference, 6vs10 cores? the same frequency?

Each core has its own AVX512 unit, or not?

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

Well, I must say I dont get it.

How come that Skylake-X 6 core consumes 146W and Skylake-X 10 core consumes 149W ? 3W difference, 6vs10 cores? the same frequency?

Each core has its own AVX512 unit, or not?

Per Anandtech review:

the 6-core and 8-core parts only support one FMA per core, whereas the 10-core supports two FMAs per core.

It's incorrect to attribute that to AVX-512 because of that. It also does not explain why the 10 core consumes relatively less, not more.

The reason for Turbo Boost is to use up your thermal headroom. Because they are all at 140W, its reasonable to assume the 6 core runs at higher frequencies(and voltage) to get to close to 140W as possible and the 10 core is at less frequencies and voltage due to being able to run them in more cores.

Intel Core i7-7800X, 7820X, and i9-7900X, all run 4.0 GHz at all cores; Turbo Boost 2.0 does not try to maximize frequency until TDP is reached, it boosts to a pre-defined frequency, if power (irrelavent because of unlocked power in motherboards) and temperature permit, based on number of active cores.

Why 7800X and 7900X have very similar power? AVX[-512]? offset.

Why 7800X and 7900X have very similar power? AVX[-512]? offset.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

We shouldn't forget what happened when Intel moved to the ring-bus with Sandy Bridge. Nehalem's interconnect wasn't a mesh but a traditional point to point interconnect. Still the differences were interesting.

(Techreport)

L3 cache latency Westmere: 43

L3 cache latency Sandy Bridge-E: 30

PCPer shows ~25% latency difference between BDW-E and SKL-X. It's easily due to the difference between ring-bus and the mesh.

While mesh/P2P sounds better than ring, I'd assume in practice with low amount cores(like in the consumer space) and very high clocks ring is much simpler and thus far easier to clock higher.

The idea of Turbo Boost 2.0 was that there was a difference in time between a heatsink reaching thermal saturation and power consumption. While a heatsink would take some time before it couldn't take its thermal load anymore, power consumption goes up instantly. While this would be easier to explain in a notebook system because its heavily thermally constrained there, the same system is in a Desktop chip.

To keep it from going overboard and heating up too much the system eventually reaches a steady state, the power use would essentially be identical to the TDP rating.

(Techreport)

L3 cache latency Westmere: 43

L3 cache latency Sandy Bridge-E: 30

PCPer shows ~25% latency difference between BDW-E and SKL-X. It's easily due to the difference between ring-bus and the mesh.

While mesh/P2P sounds better than ring, I'd assume in practice with low amount cores(like in the consumer space) and very high clocks ring is much simpler and thus far easier to clock higher.

Turbo Boost 2.0 does not try to maximize frequency until TDP is reached, it boosts to a pre-defined frequency based on number of active cores.

The idea of Turbo Boost 2.0 was that there was a difference in time between a heatsink reaching thermal saturation and power consumption. While a heatsink would take some time before it couldn't take its thermal load anymore, power consumption goes up instantly. While this would be easier to explain in a notebook system because its heavily thermally constrained there, the same system is in a Desktop chip.

To keep it from going overboard and heating up too much the system eventually reaches a steady state, the power use would essentially be identical to the TDP rating.

Last edited:

We shouldn't forget what happened when Intel moved to the ring-bus with Sandy Bridge. Nehalem's interconnect wasn't a mesh but a traditional point to point interconnect. Still the differences were interesting.

(Techreport)

L3 cache latency Westmere: 43

L3 cache latency Sandy Bridge-E: 30

PCPer shows ~25% latency difference between BDW-E and SKL-X. It's easily due to the difference between ring-bus and the mesh.

While mesh/P2P sounds better than ring, I'd assume in practice with low amount cores(like in the consumer space) and very high clocks ring is much simpler and thus far easier to clock higher.

The difference is that the Ring Bus increased latency per core (or was it pair of cores) added. The new mesh should allow stable and predictable latency throughout the whole lineup. This might mean worse Latency on the LCC but the MCC and HCC dies should see improved latency compared to their Broadwell counterparts.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

The difference is that the Ring Bus increased latency per core (or was it pair of cores) added. The new mesh should allow stable and predictable latency throughout the whole lineup. This might mean worse Latency on the LCC but the MCC and HCC dies should see improved latency compared to their Broadwell counterparts.

Exactly. Until the LGA115x platform goes to higher core counts ring will be a better solution. Ring added just 1 cycle per hop, which is insignificant for the low count cores considering the overall decrease.

I don't think it'll be a straightforward latency reduction either. In cases where a many core part needed to "hop" to a different ring using a router, the latency would have increased significantly. Within the ring though the differences won't be big. You'd still need to coordinate the L3 frequency throughout the whole chip, which means mesh fares better in the within-ring case.

Really, this is what means by server-optimized versus client.

Last edited:

Exactly. Until the LGA115x platform goes to higher core counts ring will be a better solution.

The question will be EMIB. There is the potential for them to use that on consumer lineup if they feel they have to compete with AMD. 7nm Zen looks like it will be 12c dies. If Intel hasn't already decided by then to create a higher core count die for the consumers they may resort to using emib to keep up. But then you are looking at them probably starting to drop the mesh and move to ring bus dies connected with EMIB for most of the lineup. Which oddly enough will be a mid place between Ryzen and TR. The dies will perform like CCX modules, they will see increased latency between dies, but needing twice the dies to catch up though EMIB is probably going to be a lot better latency than the current IF between MCM dies.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

But then you are looking at them probably starting to drop the mesh and move to ring bus dies connected with EMIB for most of the lineup.

Ring Bus for off-die communications? I don't know how that would work.

I'd think EMIB for consumers will be just to connect cores with the other parts. For example, you'd have 8 cores with memory controller in one die, and you'd have a beefy GPU in the other. 8 cores would be small on 10nm.

It'd still be cheaper for them to not use EMIB at all and fit everything in one die because consumer space is very price sensitive. I mean, for GT2 die they can do that with everything on one die rather than EMIB.

Making something like 5775C would be easier with EMIB though. Assuming Cannonlake GT2 is 40EU, perhaps with Icelake they can have a EMIB version with Die 1: 8 core + memory controller, Die 2: GT4 160EU, Die 3: HBM memory.

Or, since there's a reasonable proof to believe they might use AMD GPUs on the high end, the Die 2: AMD GPU, Die 3: HBM

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.