Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

And Coffee Lake!

I don't know much about Coffee Lake, not sure if it's just the same SKL cores rehashed again or if it's updated cores in 14nm+.

And Coffee Lake!

Yes, for the K series where you overclock, increasing clockspeed does not mean much if the overclocking frequency stays the same. But for mobile, where you cant overclock, a clockspeed increase is just as good as an ipc increase, assuming it can maintain the higher cloclspeed.I think that what he's trying to point out, is that if you equalize clocks between SKL and KBL, there's virtually no IPC increase. Which shouldn't be too surprising.

I don't know much about Coffee Lake, not sure if it's just the same SKL cores rehashed again or if it's updated cores in 14nm+.

Intel notes that it has the industry's highest transistor density, and since 14nm+ doesn't involve a lithography shrink, the density metric likely remains unchanged. Instead, Intel is optimizing its transistors by improving their fin profile with taller fins and a wider gate pitch. It's also improving the transistor channel strain.

Of course, Intel does not provide exact measurements for the new fin profile and gate pitch for comparison, but a glance at an IDF 2014 presentation illustrates the company's previous advances and the scale of the problem. Intel hasn't officially named the new process as its next-gen tri-gate, but it is safe to assume that it is.

Finally, there's some question over what it takes at a fab level to produce 14nm+. Though certainly not on the scale of making the jump to 14nm to begin with, Intel has been tight-lipped on whether any retooling is required. At a minimum, as this is a new process (in terms of design specificaitons), I think it's reasonable to expect that some minor retooling is required to move a line over to 14nm+. In which case the question is raised over which Intel fabs can currently produce chips on the new process. One of the D1 fabs in Oregon is virtually guaranteed; whether Arizona or Ireland is also among these is not.

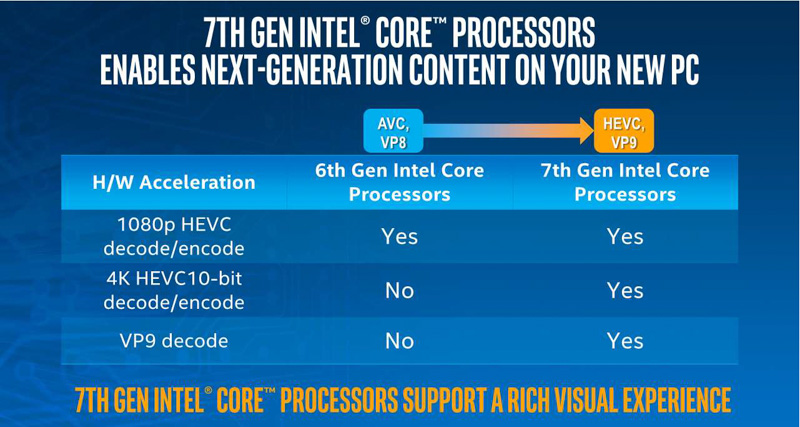

Intel claims the addition of HEVC 10-bit hardware acceleration increases battery life during 4K streaming by 75% (up to 9.5 hours). It also indicates that users can view 360-degree 4K video for up to seven hours on a single battery charge.

Kaby’s biggest advertised feature is improved support for 4K. All Kaby Lake integrated GPUs will support hardware-accelerated decoding and encoding of 10-bit HEVC/H.265 video streams and decoding of 8-bit VP9 streams. If you don’t already know, supporting hardware acceleration for certain codecs means that the GPU (usually via a dedicated media block) handles all the processing instead of the CPU. Not only does this use a fraction of the power that a CPU uses to accomplish the same task, but it frees the CPU cores up to do other things.

With Skylake, the hardware control around Speed Shift has improved. Intel isn’t technically giving this a new name, but it is an iterative updated which I prefer to call ‘v2’, if only because the adjustment from v1 to v2 is big enough to note. There is no change in the OS driver, so the same Speed Shift driver works for both v1 and v2, but the performance means that a CPU can now reach peak frequency in 10-15 milliseconds rather than 30.

What I wonder with this talk about the larger gate pitch, is:The performance increase comes from an enhanced 14nm process that allows for higher clocks at a given level of power consumption. Don't downplay this, this is a good achievement from Intel. The process and circuit implementation folks really did a good job here.

We'll see the micro-architects strut their stuff in Cannonlake.

Notebookcheck said:Unseren anspruchsvollen 4K-Trailer (HEVC Main10, 50 MBit/s, 60 fps) spielt der i7-7500U bei einer durchschnittlichen Leistungsaufnahme (CPU Package Power) von lediglich 3,2 Watt flüssig ab, wohingegen das Video auf dem i7-6600U trotz 16,5 Watt Chipverbrauch sichtbar vor sich hin ruckelt.

We'll probably get more information about 14+ and 10nm at Investor Meeting.. I hope. I always liked Bill Holt's presentation. Wait, didn't he retire? So someone else will do them then, hopefully.

The Skylake hardware was already able to decode H-265/HEVC videos at a low power consumption, but it did not support the Main10 standard for contents with a 10-bit color depth. Kaby Lake changes that: Our demanding 4K trailer (HEVC Main10, 50 Mbps, 60 fps) is handled smoothly by the i7-7500U at an average power consumption of just 3.2 Watts (CPU Package Power), while the video stuttered noticeably on the i7-6600U despite a consumption of 16.5 Watts.

WinRAR results suggests that Kaby Lake had faster ram is that true? What RAM was used in those tests? I'd also like to see Under Volting results to see how much better technically KL really is. It may be that KL works much closer to its potential to achieve those gains making the gains seem less impressive. Of course all of that is largely irrelevant for most buyers because how many people actually under-volt their CPUs? Nonetheless I have seen some very impressive gains in graphics performance from UV that were similar to those of KL. UV made the CPU draw less power and greatly increased the power available to the iGPU.NotebookCheck already has their Kaby Lake review up. The performance improvement on mobile is better than the last Tock(s) (new architecture) from Intel. Compared to i7-6500U, i7-7500U is:

Kaby Lake (Core i7-7500U) tested: Skylake on steroids

- CPU

14-16% faster @ Cinebench 11.5

16% faster @ 3DMark 06 CPU

14-24% faster @ X264

16-17% faster @ TrueCrypt

28% faster @ WinRAR

(...)

Intel have really started to focus on improving their iGPU's in the five years or so, and it's starting to pay off. I really hope AMD hit a home run with Zen, we need competition.

Question do we think we will get a pentium based kabylake core m? Not at first but lets say 3 or 6 months down the road.

I'd also like to see Under Volting results to see how much better technically KL really is. It may be that KL works much closer to its potential to achieve those gains making the gains seem less impressive.

I don't get it. If Intel has done a really good job improving performance/features while keeping prices pretty much flat even as AMD has not been all that competitive, why exactly do we need competition? This is a legit question.

NotebookCheck already has their Kaby Lake review up. The performance improvement on mobile is better than the last Tock(s) (new architecture) from Intel. Compared to i7-6500U, i7-7500U is:

Kaby Lake (Core i7-7500U) tested: Skylake on steroids

- CPU

14-16% faster @ Cinebench 11.5

16% faster @ 3DMark 06 CPU

14-24% faster @ X264

16-17% faster @ TrueCrypt

28% faster @ WinRAR

After 5 minutes of load, i7-7500U can sustain similar clocks to an i7-6600U at 25W mode.

- iGPU / Gaming

31% faster @ 3DMark 11 GPU

39% faster @ 3DMark Fire Strike Graphics

33% faster @ Just Cause 3 1366x768 Medium

39% faster @ Bioshock Infinite 1366x768 High

42% faster @ Battlefield 4 1366x768 High

51% faster @ Star Wars Battlefront 1366x768 Medium

http://www.notebookcheck.com/Kaby-Lake-Core-i7-7500U-im-Test-Skylake-auf-Steroiden.172422.0.html

Now one detail from Intel's slides caught my attention. Kaby Lake-U's HD Graphics 620 scores 339 pts @ 3DMark Time Spy Graphics (DX12). In comparison, a Geforce GTX 950M scores 281 pts according to NotebookCheck.

...that clanging sound was my jaw hitting the floor. "Why do we need competition?" Seriously?! Have you just not been paying attention to, oh, the last 6,000 years of human history?

Kaby Lake is a massive one for mobile. Utter fantastic product in that regard with large performance benefits.