Tidekilla115

Member

- Feb 28, 2016

- 148

- 0

- 16

I had that board, and a G3258 running nicely at high overclock.

The overclock ability disappeared with updates. I can't overclock with the board anymore.

Is it because of windows 10 or something else?

I had that board, and a G3258 running nicely at high overclock.

The overclock ability disappeared with updates. I can't overclock with the board anymore.

Because people want to bring back the fun of budget CPU overclocking to Intel systems. Looks like a more sofisticated solution than before, and I'm glad ASRock is still comitted to this.

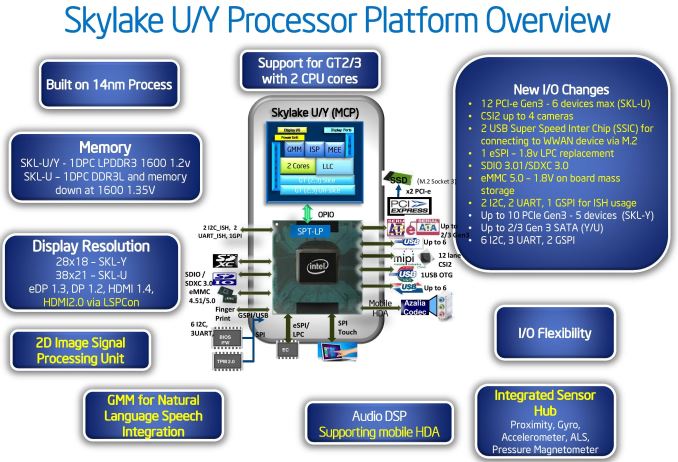

Just buy a mobo with the LSPcon. I dont think you are ever going to see HDMI 2.0 without. HDMI 1.4 already comes from a DP 1.2 conversion. In other words, I only think Intel supports DP out of the CPU now and future.

Is that one reason why I have so much trouble with HDMI audio handshake issues on my Asus H110M-A board and my i3-6100?

Because people want to bring back the fun of budget CPU overclocking to Intel systems. Looks like a more sofisticated solution than before, and I'm glad ASRock is still comitted to this.

IP6200 is probably faster than a GT730, since it's sometimes close to a GTX750.

http://media.bestofmicro.com/T/J/497431/original/17-IGP-GTA-V.png

I don't really care which way they take to get it there. *If* they want to be competitive with Polaris/Pascal parts that will bring 2x performance across the generation, they need a 2.5TFlop, 70GTexels/s equivalent GPU by that time. Otherwise, forget about it. It's possible that Kabylake's highest GPU is coming in mid-2017, meaning it has go against AMD's Zen APU with HBM.Would be fun to see a monstruous iGPU with lower clocks, probably more efficient than the current ~1GHz Iris Pro models.

GTA 5 was released in April of 2015 for the PC, and the Tom's review of IP6200 was in June of 2015, so I think your criticism of that chart is unfair. Also, these IGPs are meant for low res games, so that's what I expect to see in a comparison.

Intel has been pushing the performance per watt aspect and GPU performance heavily in the last few generations, making each successive NUC generation more attractive than the one before. We have already looked at multiple Broadwell NUCs. The Skylake NUCs currently come in two varieities - one based on the Core i3-6100U and another based on the Core i5-6260U. The i5 version is marketed with the Iris tag, as it sports Intel Iris Graphics 540 with 64MB of eDRAM.

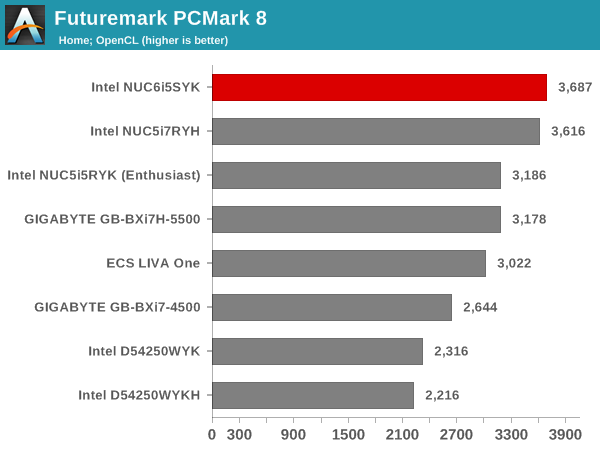

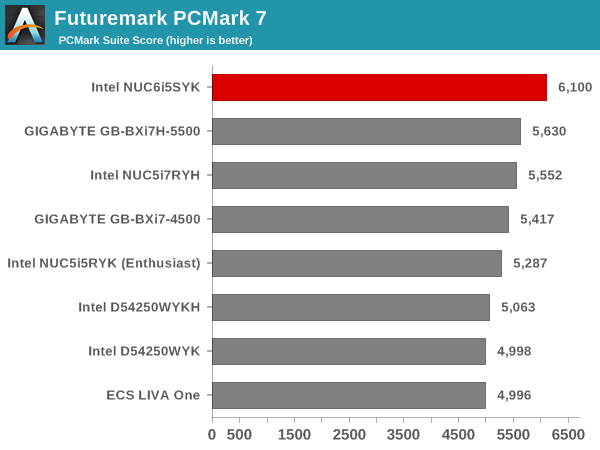

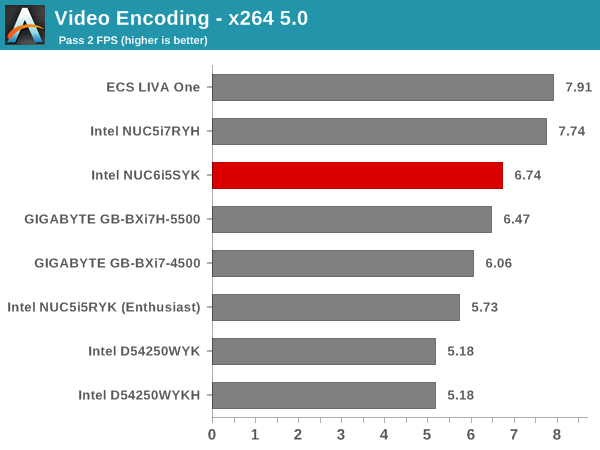

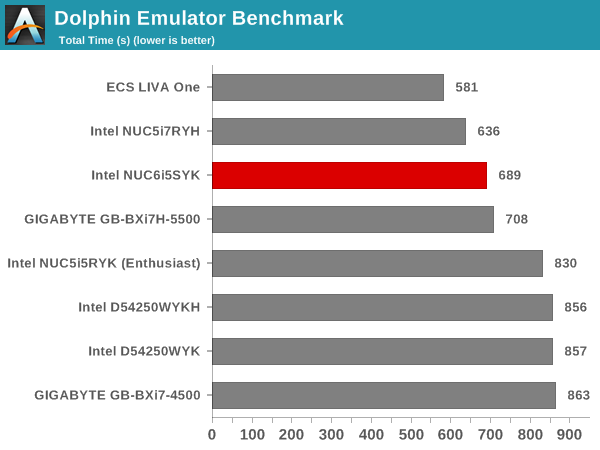

The benchmark numbers show that it is a toss-up between the Broadwell-U Iris Core i7-5557U in the NUC5i7RYH and the Core i5-6260U in the NUC6i5SYK. The former is a 28W TDP part and can sustain higher clocks. Despite that, the performance of the two are comparable for day-to-day usage activities (such as web browsing and spreadsheet editing), as tested by PCMark 8.

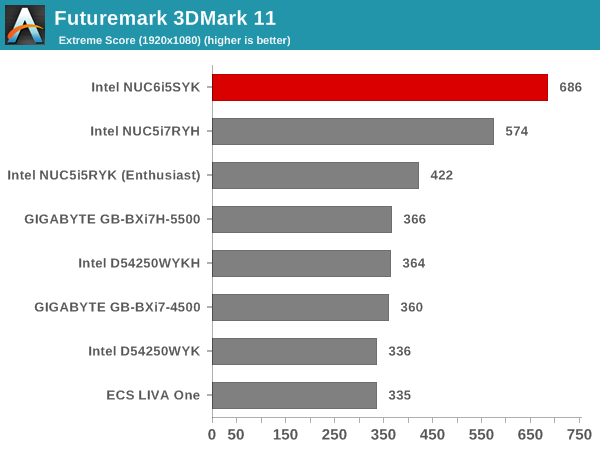

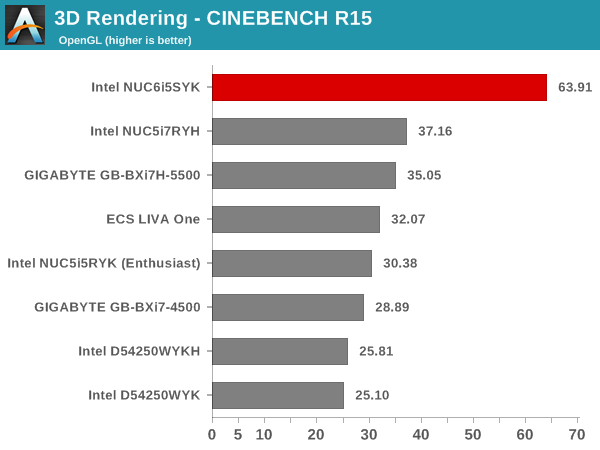

The thermal design continues to be good, and the default BIOS configuration ensures that the Core i5-6260U can sustain higher operational power levels than what is suggested by its TDP of 15W. This is particularly interesting, since the processor doesn't officially have a configurable higher TDP. The Skylake GPU has also shown tremendous improvement compared to Broadwell and previous generations, and this is evident in the 3D benchmarks. The NUC6i5SYK also sports an Iris GPU with 64MB of eDRAM that helps improve performance for various workloads.

On a NUC?! I can understand the criticism, but "massive fail"...Pretty poor from them, no game tests is a massive fail.

On a NUC?! I can understand the criticism, but "massive fail"...

I didn't even know they started using eDRAM in non "Iris Pro" parts. Congrats Intel, you managed to completely mutilate what was left of your GPU naming scheme.Well the NUC is with EDRAM and all

If they also leave out games with the i7 NUC with both Iris Pro and external GPU support. Then its truly a massive fail.

Massive fail in product naming scheme as well.

Just buy a mobo with the LSPcon. I dont think you are ever going to see HDMI 2.0 without. HDMI 1.4 already comes from a DP 1.2 conversion. In other words, I only think Intel supports DP out of the CPU now and future.

Intel will have native HDMI 2.0 eventually. They have to.

Pretty poor from them, no game tests is a massive fail.

The question is how viable HDMI is in the long run. DP is slowly getting into TVs. And I for one wouldn't mind a single standard for everything.

It's a review of NUCs. You can't even put in a discrete graphics card. They are made to replace people's home computers who don't play games beyond very light casual games.