Gentlemen,

You know it's not as simple as that. There are many parameters involved.

When the first Hyperthreading CPUs came out in the form of Xeon, the performance was uneven and there was a loss. It was pretty bad. Then when they introduced it on the consumer parts, it became much better.

But it was with Nehalem they fixed nearly all the regressions and became a "free" performance feature.

Details, details, details. We don't know them. It's not just Single thread vs. Embarassingly Parallel. There are hundreds of in betweens. It's not Hyperthreading benefitting MT versus Gracemont. Hyperthreading is a potential zero gain in some MT cases because the architecture itself can be fully utilized and HT just causes contention. In those cases, Gracemont will be an adder. Of course adding Gracemont means there will be overhead due to asymmetrical cores. Potentially they are saying a cluster of 4 Gracemonts are better then two Golden Cove cores, so the maximum performance gain is far above what Hyperthreading can offer, since it rarely exceeds 30%.

By the way, overhead exists for adding extra cores. That's why scaling is not ideal. The Xeons, the EPYCs, the POWERs add whole bunch of extra circuitry to just transfer data between the cores to basically minimize the overhead.

We're living in an increasingly complicated world. Look at security. There's a never ending war between people who hack and those that secure them. That's why we went from the simplest passwords, to basically hacking a CPU.

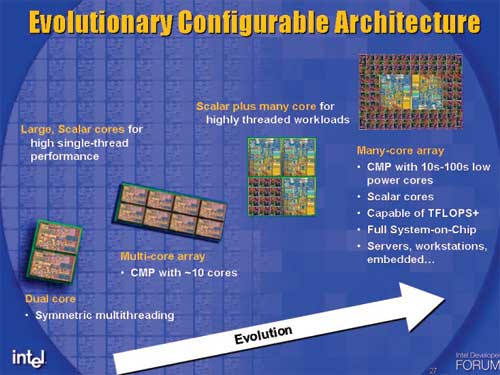

Single cores -- Multiple cores -- CPU + Accelerators -- Asymmetrical cores + Accelerators

Sometimes an idea dies not because it's a wrong one but because it arrived before it's time. We'll see where the hybrid approach falls.

This is what the future may hold:

Presentation by then Intel CTO Justin Rattner in 2003 Intel IDF.

The ideal for a hybrid CPU is to use few ridiculously large cores for maximum ST and low thread count performance and many efficient and tiny cores for maximum multi-threaded performance.

We might see the CPUs split like in server where some "High speed" SKUs exist to maximize low-thread count performance, but others exist with many -mont cores alongside them.