At the risk of sounding like a broken record, I'll repeat what Andrei has said many times - testing at a lower fixed frequency artificially inflates IPC by reducing the effective memory latency. Thus, testing for IPC should always be done at the highest possible frequency the architecture was designed to operate at. IPC is not independent of the frequency at which it is measured.

Ok, when you do

IPC perf/clock tests, you do it for the purpose of finding out how they'll fare in the future with hypothetical scenarios.

Stilt didn't run his chips at 2.5GHz or some odd low frequency, he ran them at 3.8GHz, which is fairly high. Even in AT tests the difference in frequency between the 9900K and 3900X is only 7.5%. The point of fixing frequencies is especially valid because for whatever reason its easy to knock off 100MHz or 200MHz when its left to the CPU to auto boost to its max. And of course when you want to see how the architecture does you want to fix it anyway.

I am going to link to two more perf/clock tests-

AMD's new Ryzen CPUs can clock as high as 4.5 GHz, a notable bump over previous models, but what about AMD's purported IPC gains? - Page 2

hothardware.com

Pro mnohé hráče jsou Ryzeny 7 stále drahé a zvažují zakoupit procesor spíše z řady Ryzen 5. Ryzen 5 3600 nabízí šest jader a výkon jako starší Core i7-8700K od Intelu, ale stojí polovinu. Spolu s levnou starší deskou nemá z hlediska poměru cena/výkon konkurenci. Bohužel nejde moc taktovat, a to...

pctuning.tyden.cz

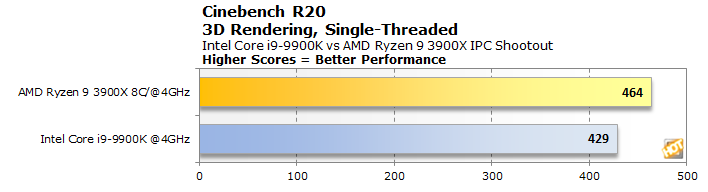

Zen 2 has a lead in Cinebench and other more vector and SIMD oriented code while Intel has lead in Integer. You can even see that reflected in Sisoftware Sandra tests with CFL leading in Dhrystone and Zen 2 leading in Whetstone, and also Anandtech tests. Continuing the tradition of Intel fairing better on Integer and AMD on FP.

SpecCPU2006

Int - 1.9% lead for CFL

FP - 6.5% lead for Zen 2

SpecCPU2017 Rate 1*

Int - 5% lead for Zen 2

FP - 7.8% lead for Zen 2

(Strictly speaking Integer is the better indicator of a faster architecture as ILP is higher in general with floating point code and you can get easy double digit gains by doubling vector unit resources, but as a product you have to consider both)

Based on tests it seems SpecCPU2006 is a better indicator of performance for the two architectures.

*Not to mention for some bizarre reason the tester decided to use Rate benchmark set to 1 thread for SpecCPU2017 rather than use the Speed version which is by default the benchmark for scalar performance.