Well, I have no interest in you believing this, so think what you want. I'll just say that this poster that turned out to be a fluke was not the actual evidence for Kaby Lake-G in the first place, so claiming "victory over the rumours" based on it is shortsighted. It was just the impulse for this thread, nothing more, nothing less.

There are three pieces of actual info that say the plan does exist (or used to at least). Out of those, two are public and not "debunked": the BenchLife leak, and the NUC roadmap that matches it.

Anyway, I'm all for dropping this topic and waiting for confirmation next year. In the meantime, something to think about:

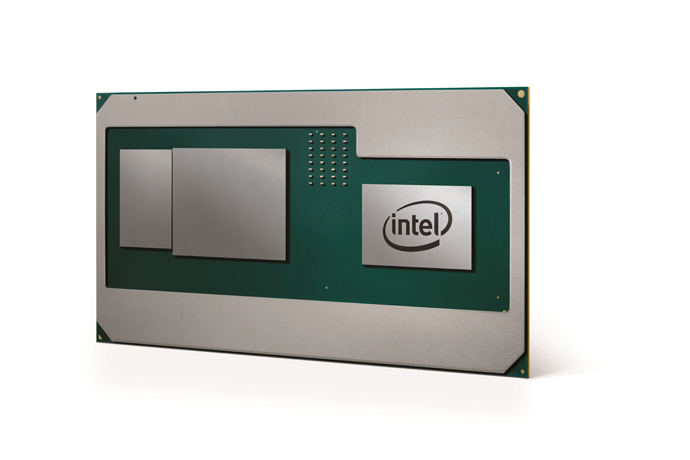

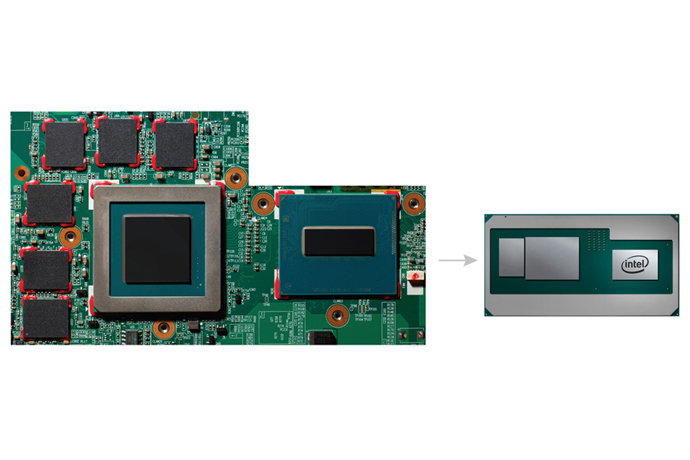

Kaby Lake-H has package size 42mm x 28mm, which is the same as the package used for Skylake-H CPUs with GT4 GPU and eDRAM on package (see ARK). Now, acording to BL, the purported Kaby Lake-G chip comes in package size of 58.5 × 31.0 mm. And it is not because of the PCH coming onto the substrate, because there is a note saying "2-chip platform type" (which means it is like current Kaby-H with external PCH).

So, why the big package suddenly? Actually, let's brainstorm, what do you guys think? Should be fun

For the "denials" see my previous opinion.