for 680 benches they should be running it with boost disabled, and with boost enabled, and clearly label it. that is all I am saying. nothing more. any resistance to that idea is either ignorance to stats, general ignorance, or fanboyism for the current Nvidia cards.

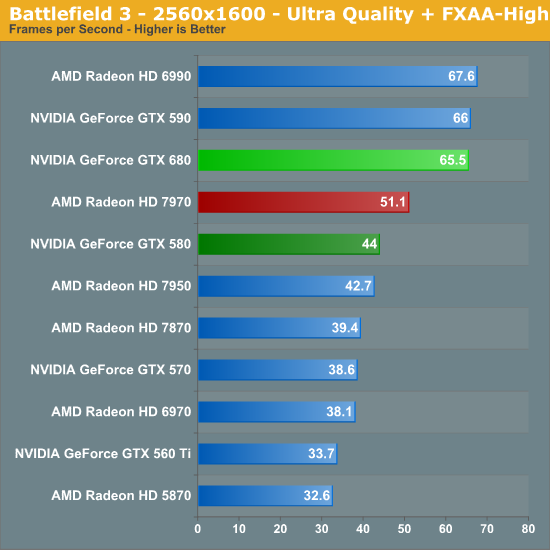

OK look at some of the games where GTX680 leads. Does it look like a 3-4% variation will change the conclusion of how fast GTX680 is in those games?

I doesn't change anything. Where GTX680 leads, the lead is massive. Just like CPUs should be tested with CPU boost, GPUs should be tested with all the features turned on because that's what the average end user will get out of the box.

It's no different than Nissan GTR's engine is not exact out of the factory since the engine is hand assembled. No 2 Nissan GTRs will ever produce the exact same horsepower. Does it change the conclusion that Nissan GTR is insanely fast? No, it doesn't. If you bought a Nissan GTR and it produced 550hp but your buddy's GTR put down 540 hp, and Nissan rated GTR at 540 hp, are you going to complain? NV rates GTX680 at 1058mhz and everything after is pure bonus.

If HD7970 could automatically scale from 925mhz to 1.2ghz, let it scale. Manual overclocking is a far bigger luck of the draw than GPU boost is because the % variation among manually overclocked HD7970 cards appears to be far greater than the % variation in GPU boost among GTX680s. So if anything, GTX680s overclocking is more consistent. Some 7970s crap out at 1125-1175mhz, while almost all 680s will do 1200mhz, easy.

There was almost never a baseline performance to begin with. So that statement is a fallacy. Our systems are not identical to those used in reviews. At best we can get an idea of how particular hardware will perform in a particular system, but we cannot expect identical results since there is still some variation.

The variation in GTX680 reviews is not enough to change the conclusion whatsoever.

That's why we often look at average performance to minimize outliers. By looking at a large sample size of reviews, we minimize outliers in reviews.

Do you have statistical data to show that the variation among GTX680's GPU Boost

materially impacts the conclusion of how GTX680 performs against HD7970? It would only be imperative to disable this feature if there was a material impact on the actual user experience that GTX680 cards bring. However, reviews consistently show that GTX680 easily outperform a stock HD7970 and that an overclocked HD7970 cannot outperform an overclocked 680.

If you look at 20-30 reviews, nothing changes about the final conclusion. If you are concerned with exact testing methodology, using the scientific method by keeping all variables constant, then you'd have a problem with the GTX680. We aren't doing a lab experiment here to achieve perfection or isolate variables to look at cause and effect relationships or derive a formula with some relationships. If someone asked you what card you'd recommend right now at $500, what would you say HD7970?

Videocard testing is itself subjective since the testing methodologies, particular parts of the games tested, the hardware used in each review themselves vary among reviewers. At best we can extrapolate how cards stack up to each other in that particular review and then compare those results across many other review samples to derive a broader conclusion.

If anything, GTX680 signals that reviews should do more manual game benchmarking and rely less on canned benchmarks. So in effect more than ever the only way to tell how well a GPU runs is by actually running a 2-10 minute sequences benchmark.

Any slight GPU boost variations will be negated over a prolonged testing sequence.

Given that across 20 or so reviews the conclusion is very much still the same regarding GTX680 vs. HD7970 or OCed 680 vs. OCed 7970, it appears you are more upset about the consistency of benchmarking being YMMV.

I don't think we sit there and scrutinize reviews and say Oh in this review Crysis 2 got 67 fps and in this review the card got 63 fps. No one expects exact performance. We look to reviews for guidance, not mathematical certainty and proof. This isn't the Large Hardon Colider where the accuracy of results is paramount.

It's already assumed that all reviews on the Internet are +/-5% within a margin of error.

Your dilemma is 2-fold:

- You are bothered by Dynamic OCing of GTX680 since not every GTX680 will Boost the same way

- But at the same time, not every HD7970 can operate at 1280mhz with overclocking either. Since manual overclocking is not guaranteed either, you are still left with a variation in what you'll get as an end user.

Therefore, it's unrealistic to expect the utmost accuracy in these type of reviews. Just use your judgement, read a lot of reviews, see if in general the card in question is "faster" or "slower".