Which graphics card should you buy for 1080p60 gameplay?

http://www.eurogamer.net/articles/digitalfoundry-2016-lets-build-a-1080p60-gaming-pc

Nvidia GeForce GTX 1060 3GB vs 6GB review

http://www.eurogamer.net/articles/digitalfoundry-2016-nvidia-geforce-gtx-1060-3gb-vs-6gb-review

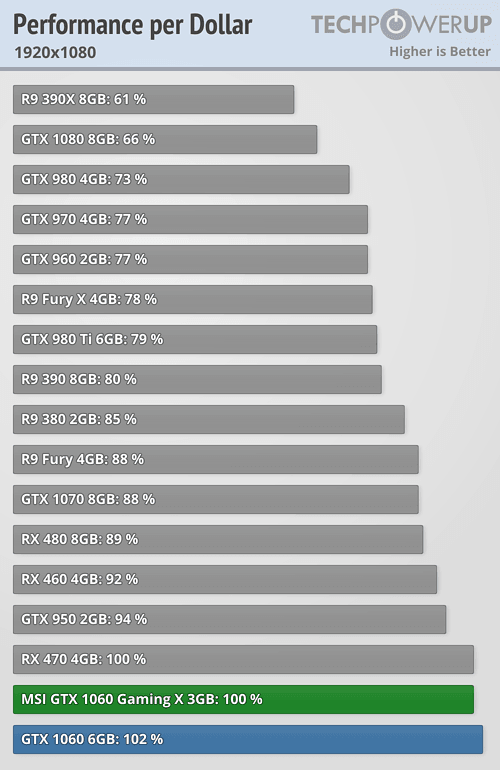

We'd be quite happy with either the Radeon RX 480 8GB [?] or GeForce GTX 1060 6GB [?] for our build, but titles such as No Man's Sky do still seem to suggest that AMD's single-threaded OpenGL and DX11 drivers can still hold back the generally excellent hardware in certain titles. Combine Nvidia's driver advantage here with its small speed bump and right now, we'd be opting for the higher-specced GTX 1060 as the GPU of choice.

http://www.eurogamer.net/articles/digitalfoundry-2016-lets-build-a-1080p60-gaming-pc

Nvidia GeForce GTX 1060 3GB vs 6GB review

In almost all cases, the budget Nvidia offering outperforms the RX 470 (and remember, this will be boosted by a couple of frames owing to its factory OC) along with both iterations of the RX 480. Even Ashes of the Singularity - a weak point for Nvidia - sees it offer basic parity across the run of the clip. In all of the benches here, there is no evidence to suggest that the 3GB framebuffer causes issues - except in one title, Hitman. Here, the performance drop-off on GTX 1060 3GB is significant - and we strongly suspect that DX12, where the developer takes over memory management duties, sees the card hit its VRAM limit.

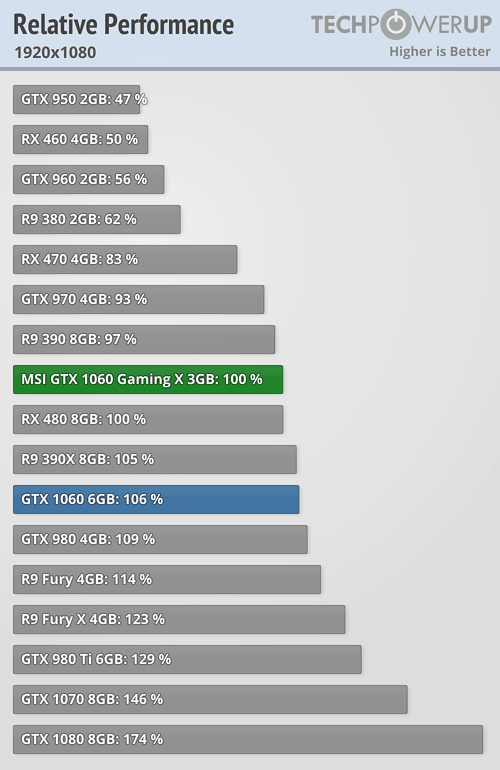

Re-benching a few titles, the impact of the overclock becomes clear - we're looking at a 12 per cent increase in performance over the GTX 1060's stock configuration, dropping to eight per cent when compared to the factory OC we have with the MSI Gaming X's set-up. This isn't exactly a revelatory increase overall - the days of 20 per cent overclocks with the last-gen 900-series Maxwell cards are clearly over. However, it is enough to push the cut-down GP106 clear of the fully enabled version when we're not limited by VRAM.

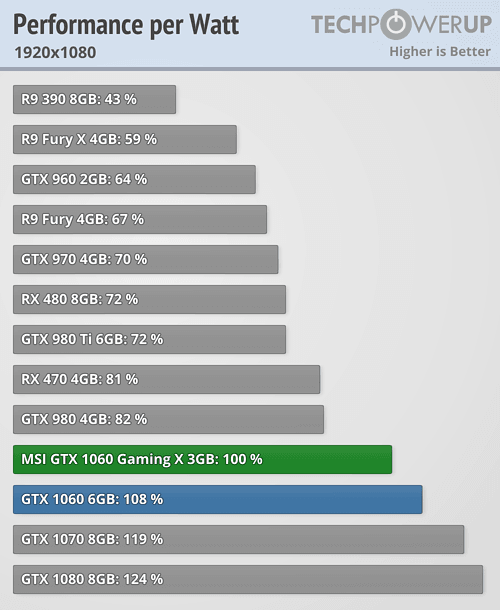

Going back to our GTX 1080 review, we were pleasantly surprised to see how well the old GTX 780 Ti held up on our modern benchmarking suite bearing in mind its 3GB of VRAM. The new GTX 1060 3GB has the same amount of memory but an additional two generation's worth of memory compression optimisations - the end result is that three gigs is indeed enough for top-tier 1080p60 gameplay - as long as you stay away from memory hogs like MSAA (which tends to kill frame-rate) along with 'HQ/HD' texture packs and extreme resolution texture options. By and large, the visual impact of these options at 1080p is rather limited anyway - generally speaking, they're designed for 4K screens.

http://www.eurogamer.net/articles/digitalfoundry-2016-nvidia-geforce-gtx-1060-3gb-vs-6gb-review