- Feb 2, 2009

- 14,003

- 3,362

- 136

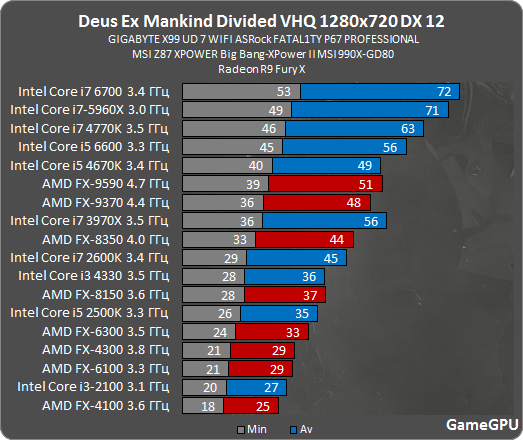

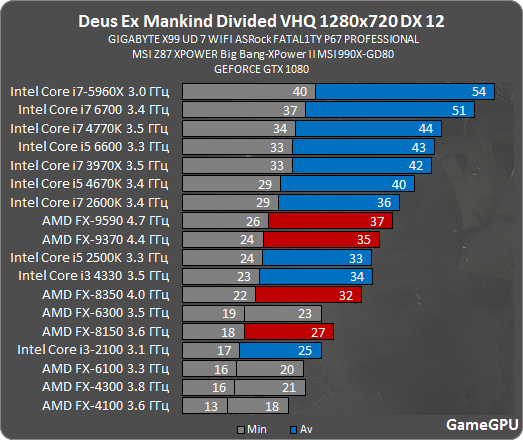

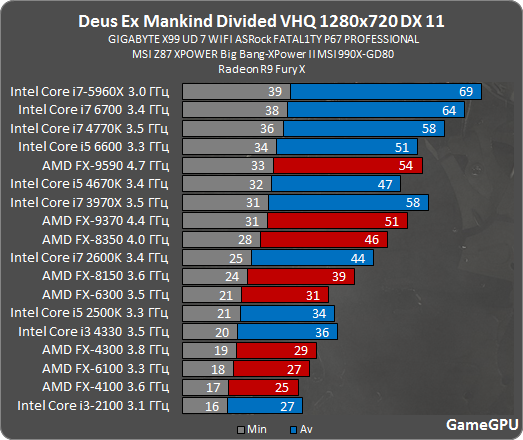

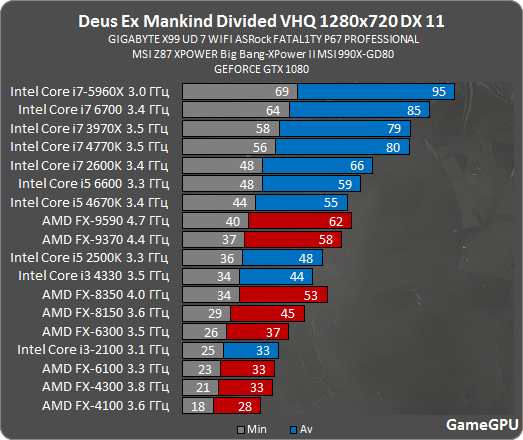

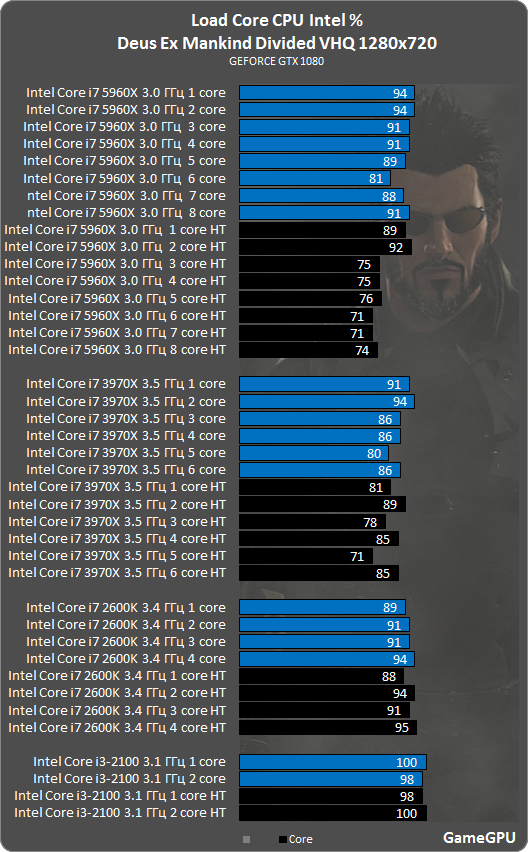

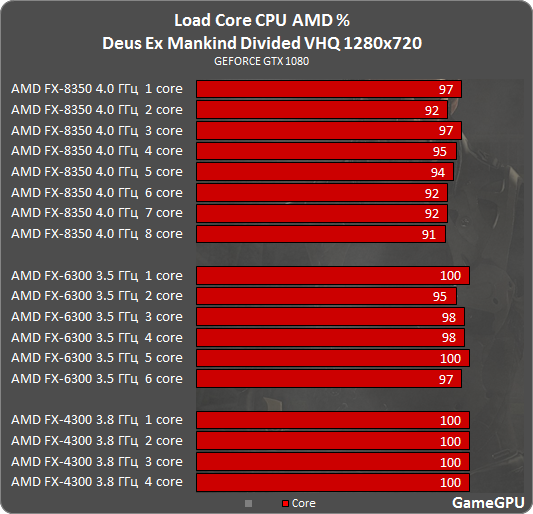

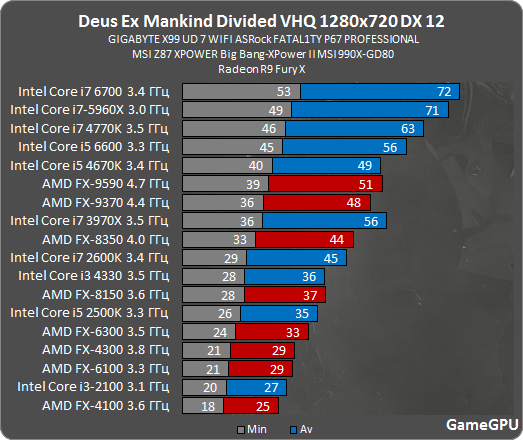

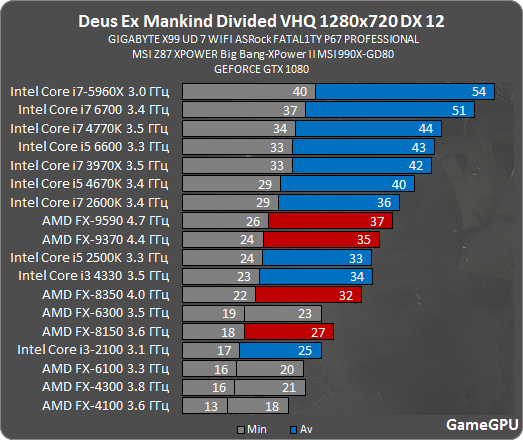

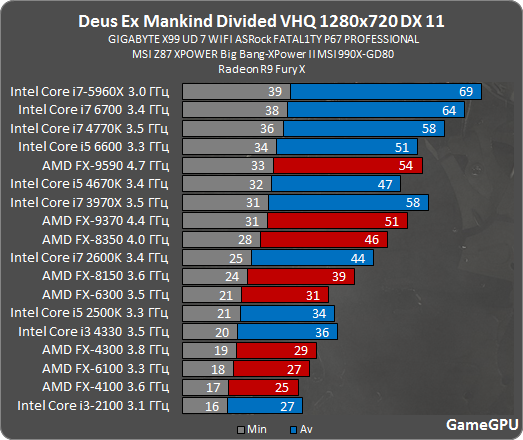

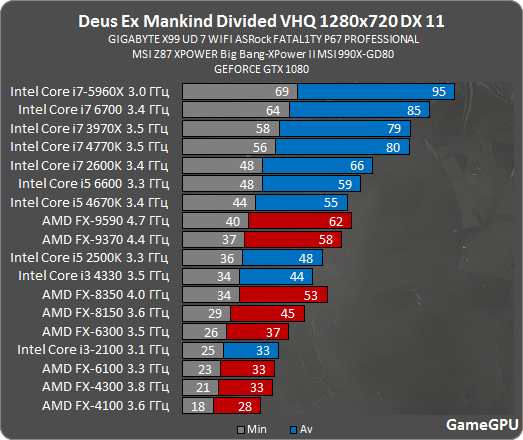

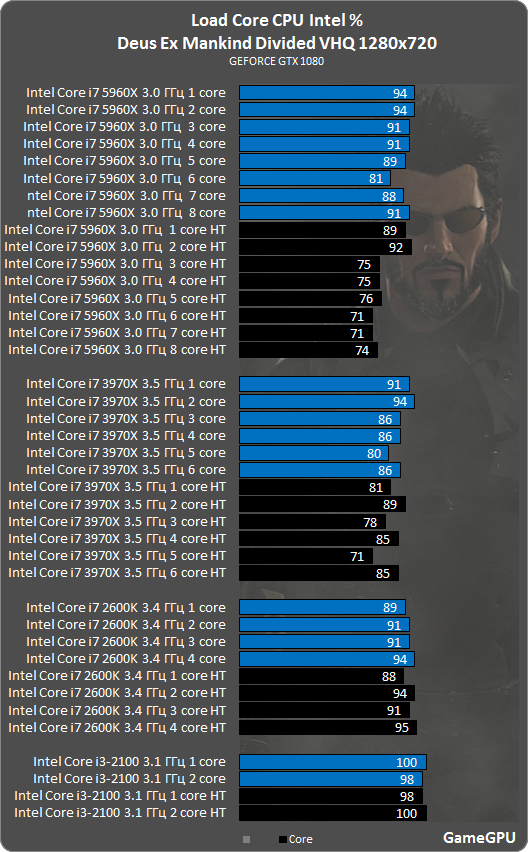

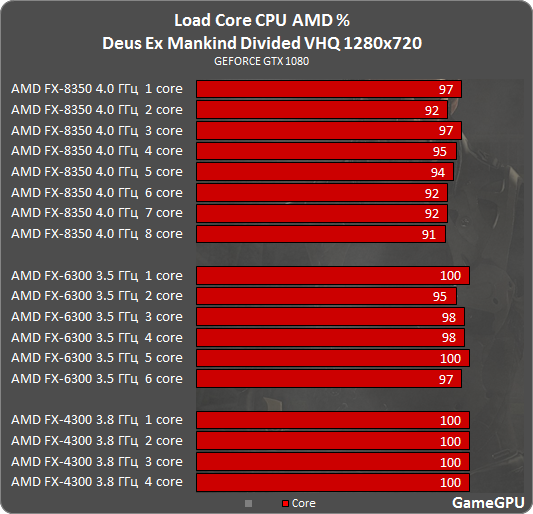

General Deus Ex : Mankind Divided thread for CPU thread scaling and performance.

First official review from GameGPU.ru, i will update the OP with every new review available.

Just a reminder, Deus Ex official DX-12 will be available via a patch on the 5th of September. So the numbers bellow may change.

https://steamcommunity.com/games/337000/announcements/detail/930377969893113169

http://gamegpu.com/action-/-fps-/-tps/deus-ex-mankind-divided-test-gpu

Edit: DX-11

This is an amazing CPU thread Scaling.

First official review from GameGPU.ru, i will update the OP with every new review available.

Just a reminder, Deus Ex official DX-12 will be available via a patch on the 5th of September. So the numbers bellow may change.

https://steamcommunity.com/games/337000/announcements/detail/930377969893113169

The Deus Ex franchise originated on PC, and we’re passionate about continuing to provide the best experience possible to our long-time fans and players on the PC.

Contrary to our previous announcement, Deus Ex: Mankind Divided, which is shipping on August 23rd, will unfortunately not support DirectX 12 at launch. We have some extra work and optimizations to do for DX12, and we need more time to ensure we deliver a compelling experience. Our teams are working hard to complete the final push required here though, and we expect to release DX12 support on the week of September 5th!

We thank you for your patience, passion, and support.

- The Deus Ex team

http://gamegpu.com/action-/-fps-/-tps/deus-ex-mankind-divided-test-gpu

Edit: DX-11

This is an amazing CPU thread Scaling.

Last edited: