RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

You claimed the "majority" of NVidia Gameworks titles are terribly broken and unoptimized at launch, and yet you focus on only one? And not only do you focus on only one, you focus on only one feature; Hairworks. How is Hairworks any different than AMD's TressFX in Tomb Raider which had terrible performance on NVidia at launch?

TressFX only had terrible performance at launch because at that time NV did not have access to (direct) source code of the game. Once they started to actually optimize the drivers for Tomb Raider, the game ran like butter on their cards. I am not going to spend a lot of time finding reviews or digging through the data but it's been proven many times over that TressFX 1.0 was more efficient than HairWorks. By now, AMD already has TressFX 3.0 which is much more efficient than the early implementations were in Tomb Raider.

I'll tell you how it's different. Hairworks can at least justify some of the performance hit, since it's applied to MULTIPLE entities on screen at the same time and it improves IQ tremendously for the fur or hair of animals and monsters. TressFX on the other hand was restricted to Lara Croft, and even then it bombed performance.

Revisionist history, huh? GTX680 managed 44 fps average with 2xSSAA (!) and 7970 managed 50 fps average at 1080p with everything else on Ultra. That was with almost no optimizations around launch.

Here is a modern comparison between The Witcher 3 and Tomb Raider:

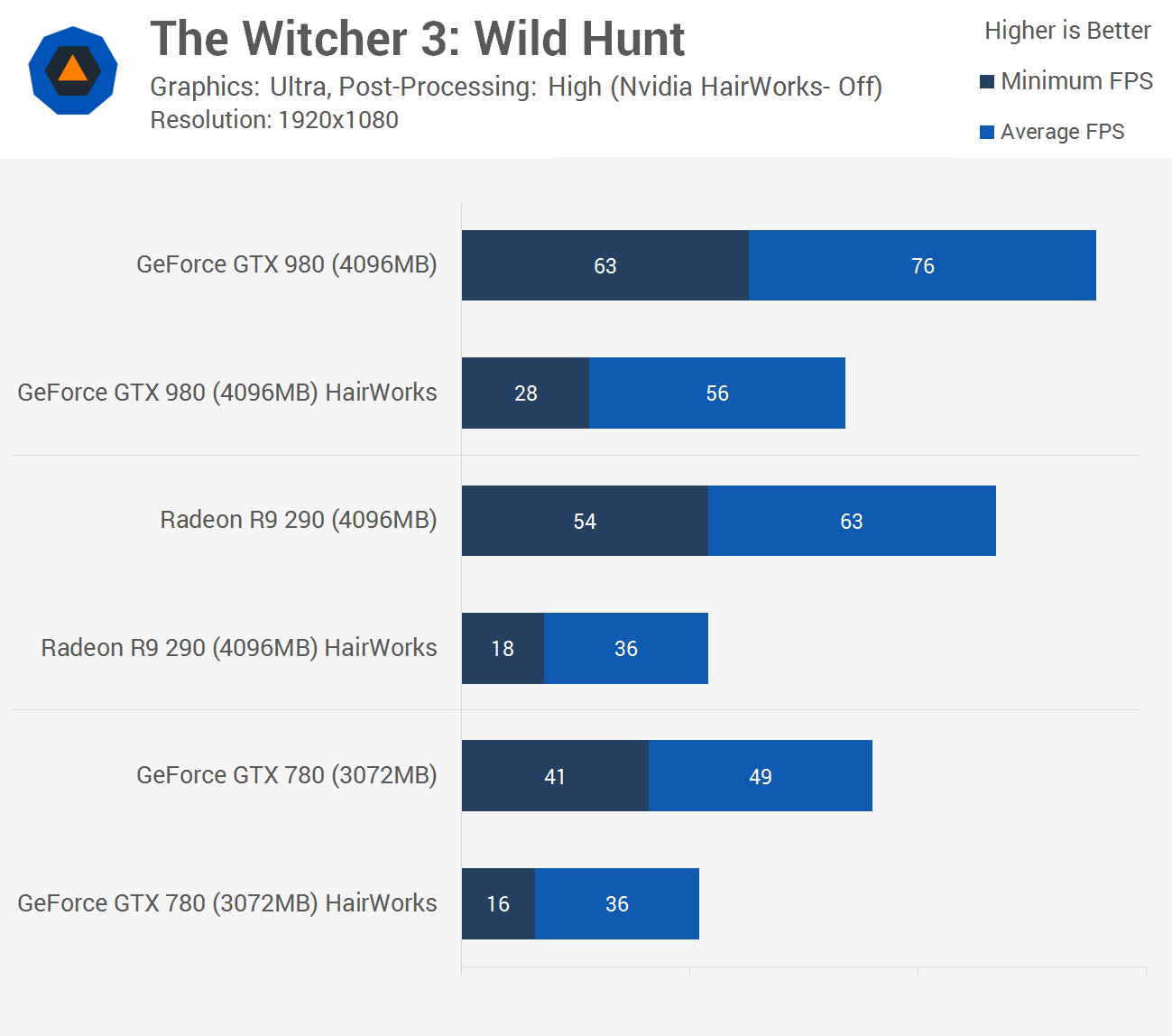

"With HairWorks disabled the minimum frame rate of the R9 290 is 3x greater, while the GTX 780 saw a 2.5x increase and the GTX 980 a 2.2x increase. The average frame rate of the GTX 980 was boosted by 20fps, that's 36% more performance. The GTX 780 saw a 36% increase in average frame rate which was much needed going from just 36fps at 1080p to a much smoother 49fps. The R9 290X enjoyed a massive 75% performance jump with HairWorks disabled, making it 17% slower than the GTX 980 -- it all started to make sense then."

GTX780 and R9 290 ran Tomb Raider like butter, but the 2 cards choked in The Witcher 3 with max HairWorks.

That's what I'm getting at you see. At least NVidia's Gameworks stuff improves IQ over the baseline, even if it has a huge performance hit. AMD's Gaming Evolved actually degrades IQ and lowers performance, which is a double whammy. Their CHS in particular is a joke, and so is HDAO:

Sounds like something I'd read from an NV marketing pamphlet. DE:MD may be the best looking 2016 PC game, and its graphics are surpassing Crysis 3. The game actually looks way better than in all the previous trailers leading up to release -- the completely opposite of The Witcher 3 and Assassin's Creed Unity.

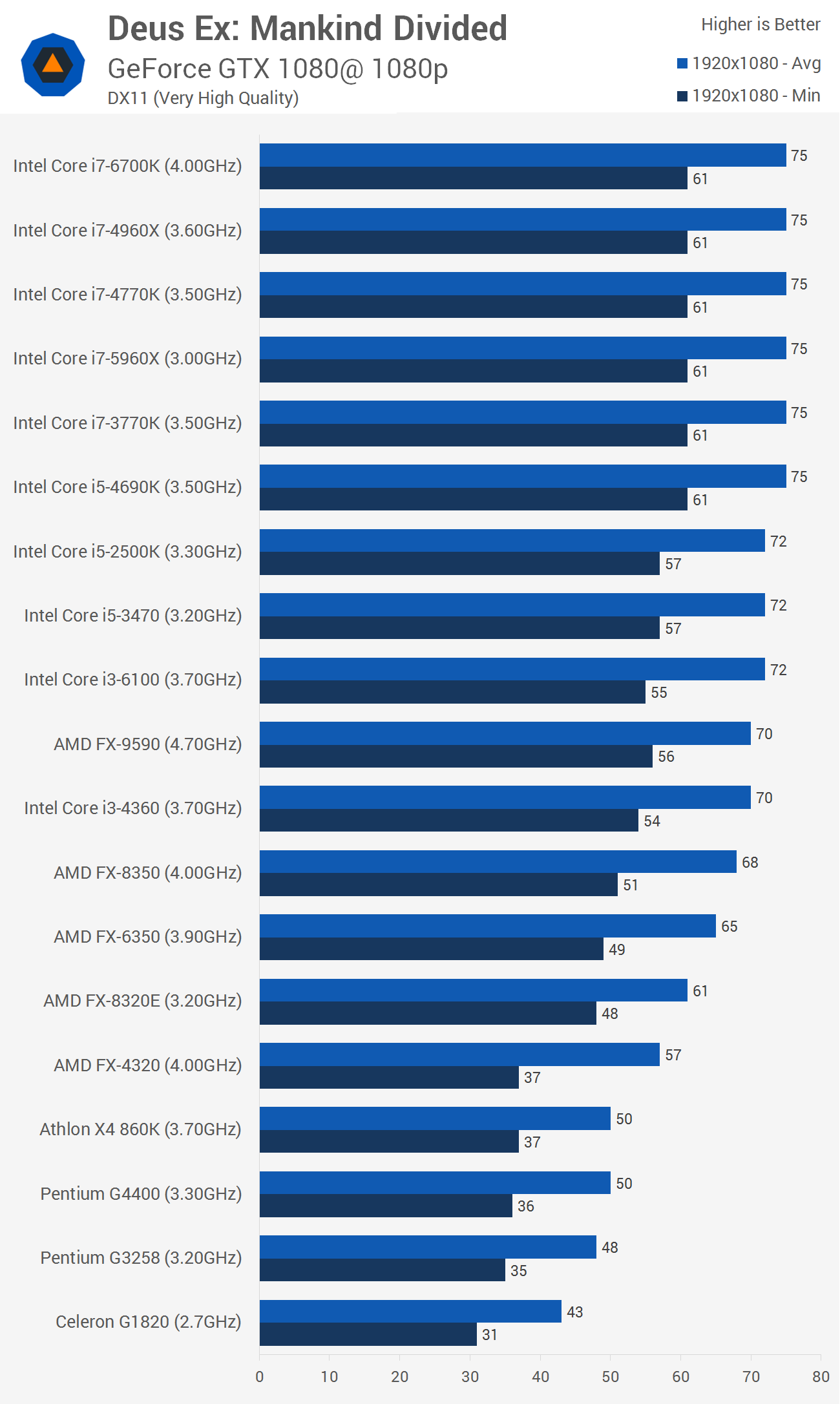

The joke is that CHS is broken in Deus Ex MD, and so is their temporal ambient occlusion. Both of these settings on max quality actually degrades IQ, whilst still suffering a massive performance hit.

Assassin's Creed Unity that you hyped on forums for months and then defended for more months was broken for almost 6 months straight. Some would argue it was never fixed.

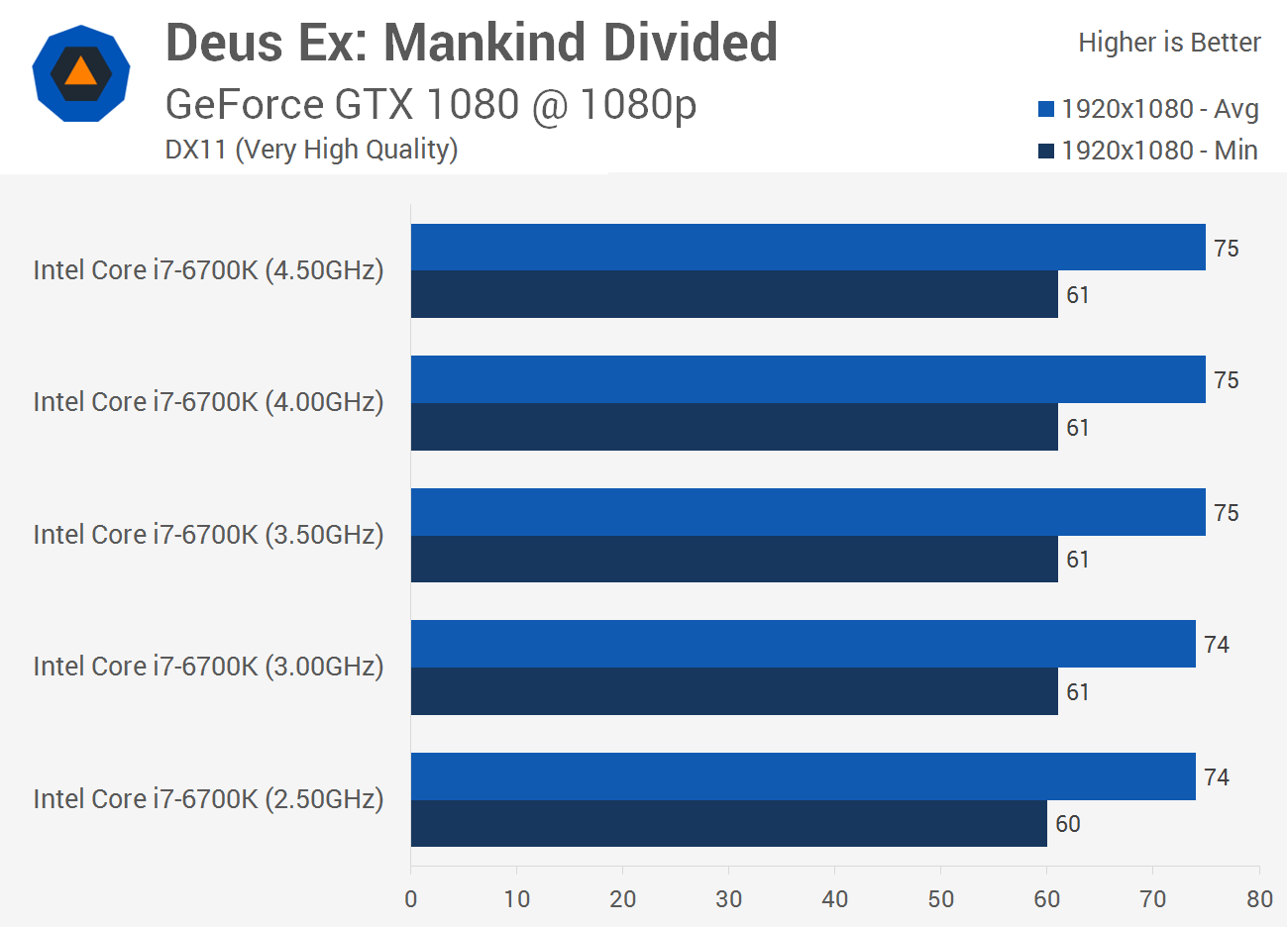

If Deus Ex MD had used PCSS and HBAO+ instead, it would not only look better but run better

The interesting part is you have everything black and white regarding PCSS vs. CHS. Takes 5 minutes disprove that PCSS is not the definitive shadow rendering choice.

NV's PCSS has 3 major issues you failed to address:

1. NVIDIA PCSS fails to accurately portray the amount of detail in the real-world, or the detail lost is often too exaggerated.

2. It blurs the entire shadow around the characters/objects but that is NOT how soft shadows work. Soft shadows does not mean the entire shadow is a blur.

3. It starts the transparency effect closer to the character, once again incorrect.

This example highlights all of these errors:

-> The shadow details from the leaves/tree are all washed out/degraded

-> The shadow being cast by the main character is rendered the wrong way --> in the real world shadow gets lighter and more blurrier the farther it is from the object. In this instance it is clear NV's PCSS is rendering incorrectly.

NV's PCSS got it completely wrong -- they rendered the shadow completely opposite of real life with more blur and transparency near the character and more shadow detail away from the character.

One other thing that happens with NVIDIA PCSS as well is that the shadow becomes lighter in color, more transparent, and in some cases almost removes the shadowing altogether from being seen. The end result is NV's PCSS often appears to mimic shadows in games from 5-7 years ago where all the detail is/was completely washed out. If you think those are good shadows, that's your opinion but I'd pick GTA V's softest or AMD CHS implementation any day in these instances:

This also highlights all 3 errors I mentioned earlier:

> transparency and lack of detail around the chacter's feet - wrong

> blur for the entire character's shadow - wrong

> lack of shadow detail from power lines/trees - wrong

HardOCP even commented "Another example of how shadows can seemingly disappear under NVIDIA PCSS is shown above. In the screenshots you can see these power lines are visible in the world and across the back of the legs of our character with Softest and AMD CHS. However, with NVIDIA PCSS the shadows are extremely hard to see. This is why we question the strength of diffusion into the distance of shadows under NVIDIA PCSS."

In fact, shadows are such a complex topic, it's not even determinate if PCSS or CHS or other implementations are superior. "It depends".

In the real world you can have defined shadows like this:

or completely blurry like this:

One could easily just as argue that in certain games/places, any of the implementations "could look like real life"

There are instances where CHS > PCSS, where PCSS > CHS and where both are flawed and worse than alternative methods, and yet you conclusively paint PCSS superior to CHS.

Last edited: