- Feb 12, 2013

- 3,818

- 1

- 0

https://www.youtube.com/watch?v=uUsRhZJzsqg

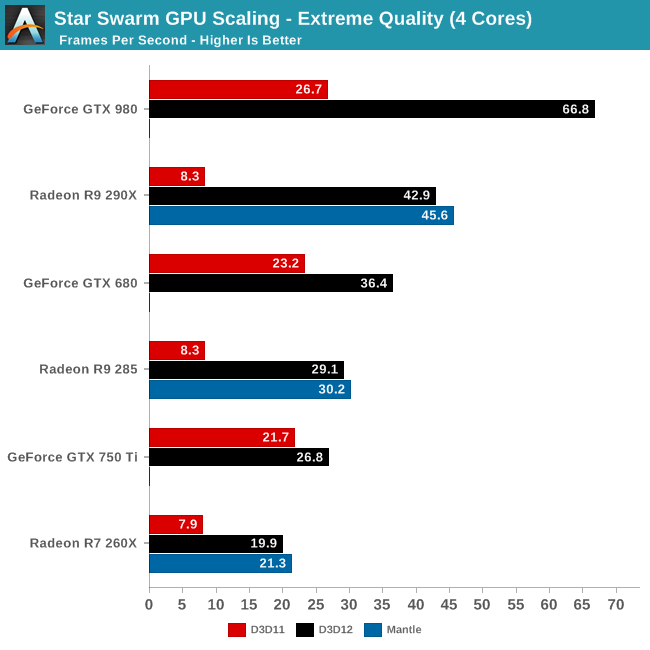

and he has a video of the 980 running dx12 starswarm

http://www.anandtech.com/show/8962/the-directx-12-performance-preview-amd-nvidia-star-swarm

and he has a video of the 980 running dx12 starswarm

http://www.anandtech.com/show/8962/the-directx-12-performance-preview-amd-nvidia-star-swarm

Last edited: