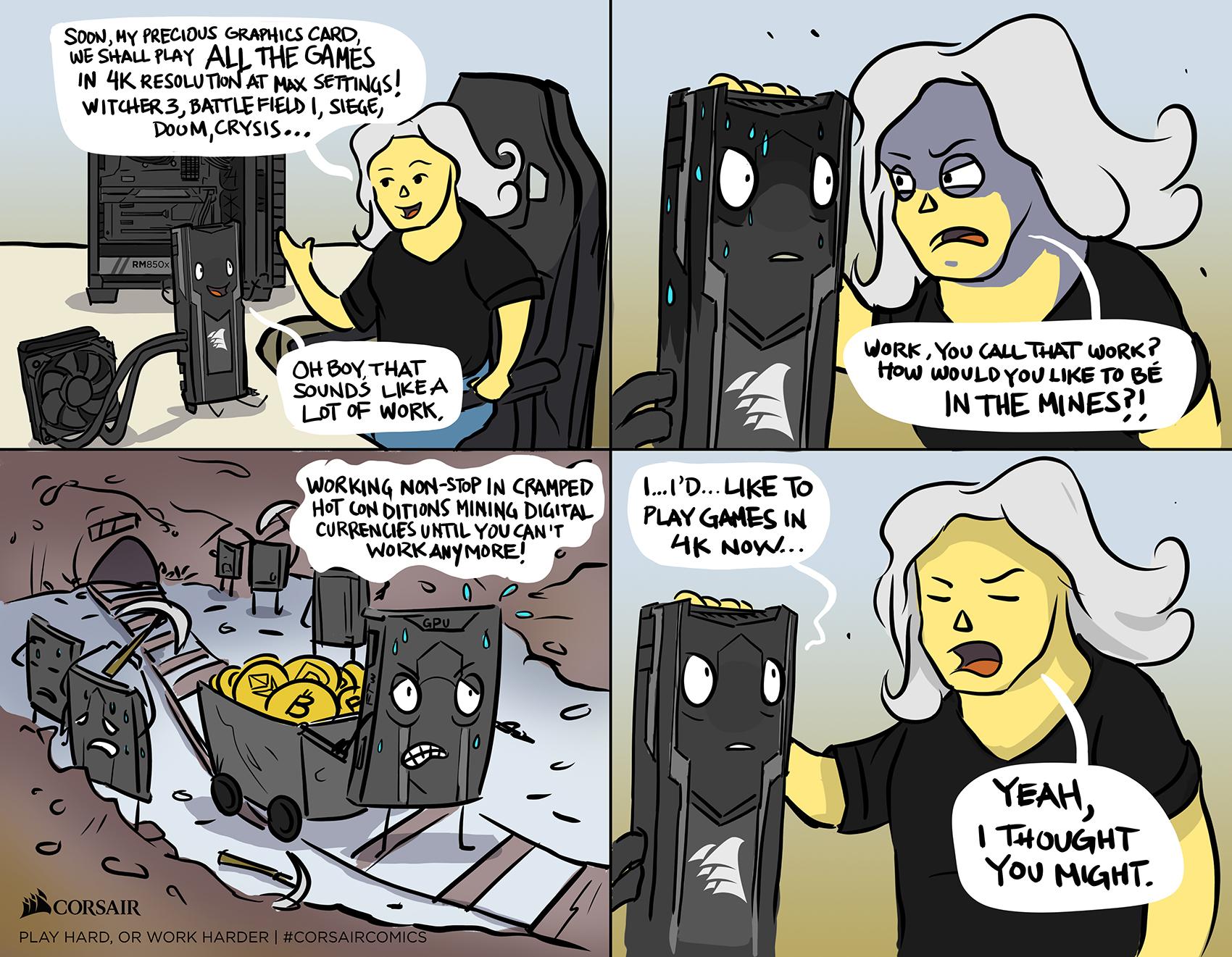

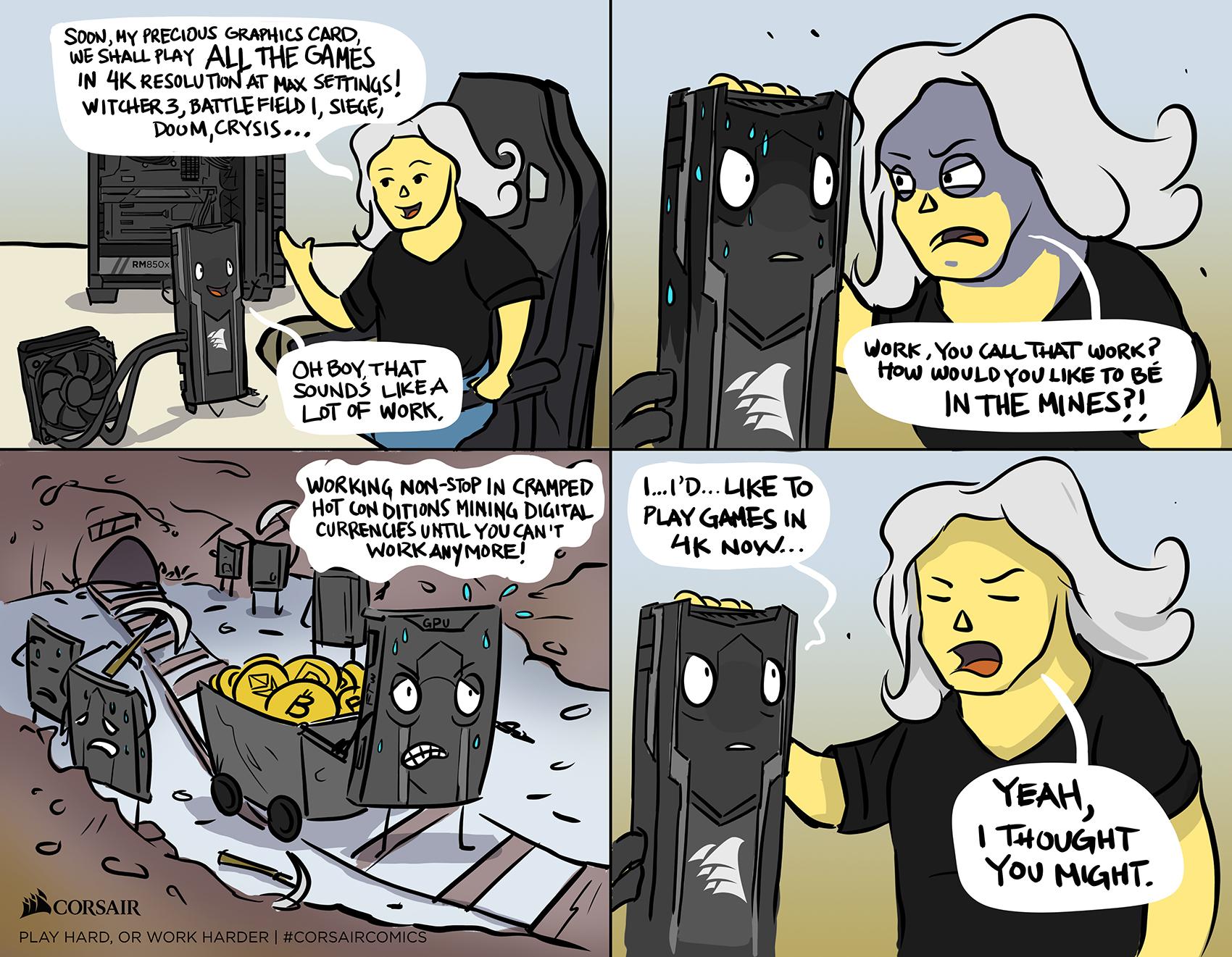

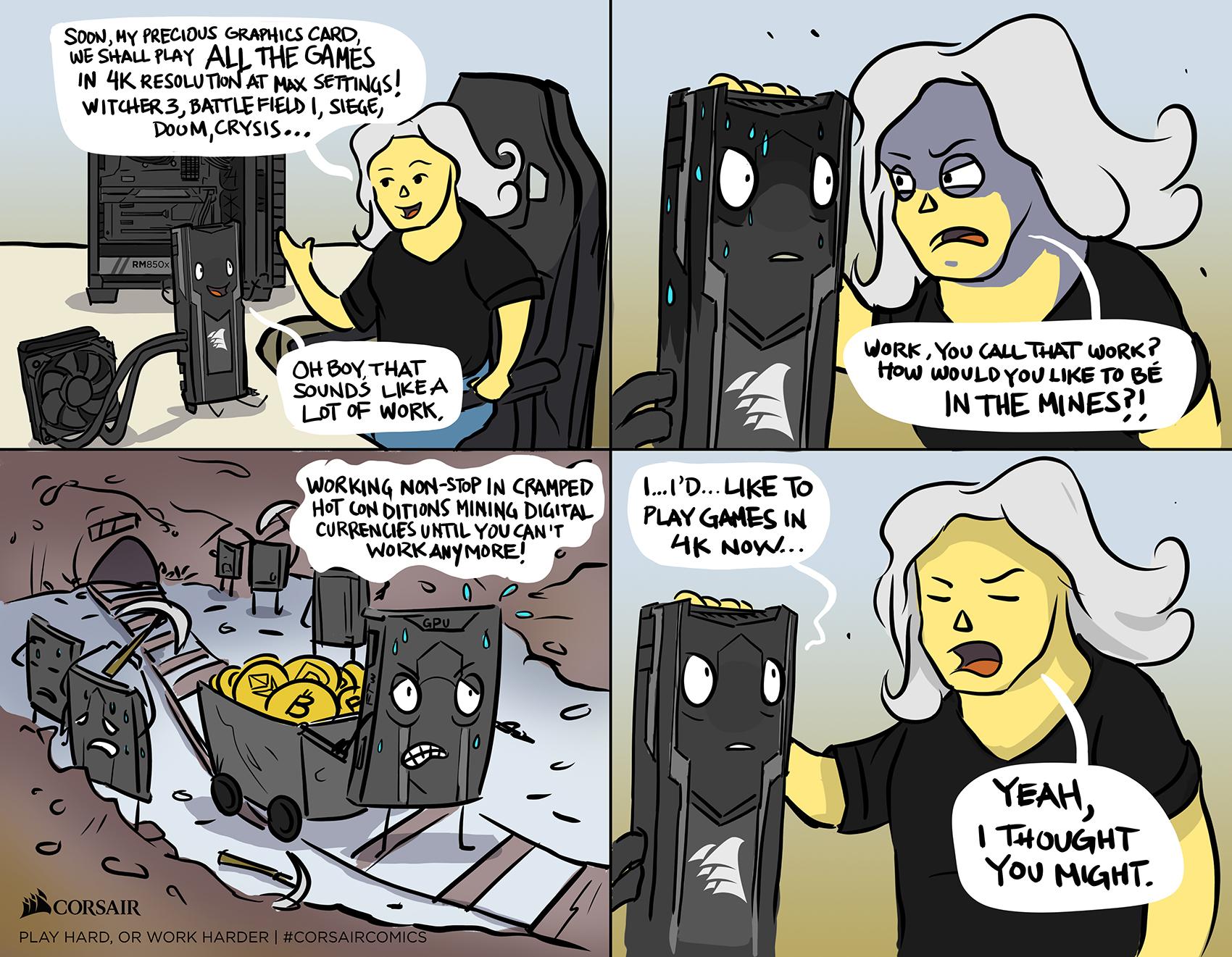

Vega better be able to do 4k or else....

Remove if off topic but found it funny for Vega to lighten the mood.

Remove if off topic but found it funny for Vega to lighten the mood.

As far as the desktop market goes, I think so too. Long term they are going to have APU's and GPGPU's.They are probably just refactoring their GPU into something... That approach may or may not pay off later but currently it looks like Vega is just a testbed for infinity fabric. Probably the whole point of Vega is to make GPU arch for their APUs. Or so it seems...

Yes, you can limit the max amount of power, at the cost of performance with drivers, but, all power gating should already been enabled at the hardware level.

I would consider the 1080 mid range there's 1080ti and the Titan above it that's two tiers down from the top.Since when has gtx 1080 been midrange?? It's still nvidia 2017 high end gaming card, not it's top enthusiast but it's high end premium.

And by competing it should be beating it, outside of power consumption.

Vega does NOT have professional, signed drivers. Those will come with Radeon Pro WX 9100. What we see with Vega is just difference between throughput of Fiji and throughput of Vega cores.Even a W7100 with half the execution units of Fiji, bests it on these "Pro" benchmarks. That makes it blatantly obvious that drivers can unlock massive performance gains in these particular benchmarks.

It is MUCH more likely that all we are seeing here is the difference between Fiji consumer and [semi]Pro drivers on Vega RX.

This is actually just more evidence that the Vega FE is getting higher workstation scores than Fiji because of drivers, not HW advances.

In high-level layout - sure, it is Fiji, at first glance.On paper it's actually really close to Fiji. Just clock speed is higher. Which leads to another question: how that was achieved? It does have few improvements that should make x amount of improvement. I think those seem to work well in some professional workloads. But those do not necessarily translate to games (although in this case it's kinda pointless to compare Vega FE to gaming cards in professional workloads, because the drivers). Also I think AMD is focusing way too much in compute.

So, did they reduce the IPC to get the clocks up and the new improvements offset the lost IPC so that clock per clock it is more or less the same as Fiji? That could work IF you can get those clock speeds high enough. However it looks like AMD has issues clocking it high enough. That's likely the problem that they are facing. It can't be process' fault solely since GT 1030 runs surprisingly high clocks out of the box while being really energy efficient (I have one passive cooled version in our HTPC and it boost way over 1700 MHz at stock). It uses comparable process (14nm Samsung).

When RX comes out we'll find out for sure.

I would consider the 1080 mid range there's 1080ti and the Titan above it that's two tiers down from the top.

1600/1050 is 52% increase.That should be enough to beat GTX1080 by 20%.Problem is vega is memory bandwidth bottleneck and its not even close 52% faster than FURYX.On paper it's actually really close to Fiji. Just clock speed is higher. Which leads to another question: how that was achieved? It does have few improvements that should make x amount of improvement. I think those seem to work well in some professional workloads. But those do not necessarily translate to games (although in this case it's kinda pointless to compare Vega FE to gaming cards in professional workloads, because the drivers). Also I think AMD is focusing way too much in compute.

So, did they reduce the IPC to get the clocks up and the new improvements offset the lost IPC so that clock per clock it is more or less the same as Fiji? That could work IF you can get those clock speeds high enough. However it looks like AMD has issues clocking it high enough. That's likely the problem that they are facing. It can't be process' fault solely since GT 1030 runs surprisingly high clocks out of the box while being really energy efficient (I have one passive cooled version in our HTPC and it boost way over 1700 MHz at stock). It uses comparable process (14nm Samsung).

When RX comes out we'll find out for sure.

1600/1050 is 52% increase.That should be enough to beat GTX1080 by 20%.Problem is vega is memory bandwidth bottleneck and its not even close 52% faster than FURYX.

Also its still GCN so it eats power like two tanks.I dont get why they went with 2048bit.This is another huge mistake by design team.

If they increase shader power by 50% they need increase memory bandwidth by same and not cut it to less than fury X have.

its like Nv have make GP102 TITANXP with 256bit and slow 7Ghz memory.Thats same what vega is.

TITANXP 548GB/s

980TI 336GB/s

thats +63%

FURYX 512GB/s

VEGA 484GB/s

thats -6%

1600/1050 is 52% increase.That should be enough to beat GTX1080 by 20%.Problem is vega is memory bandwidth bottleneck and its not even close 52% faster than FURYX.

Also its still GCN so it eats power like two tanks.I dont get why they went with 2048bit.This is another huge mistake by design team.

If they increase shader power by 50% they need increase memory bandwidth by same and not cut it to less than fury X have.

its like Nv have make GP102 TITANXP with 256bit and slow 7Ghz memory.Thats same what vega is.

TITANXP 548GB/s

980TI 336GB/s

thats +63%

FURYX 512GB/s

VEGA 484GB/s

thats -6%

1600/1050 is 52% increase.That should be enough to beat GTX1080 by 20%.Problem is vega is memory bandwidth bottleneck and its not even close 52% faster than FURYX.

Also its still GCN so it eats power like two tanks.I dont get why they went with 2048bit.This is another huge mistake by design team.

If they increase shader power by 50% they need increase memory bandwidth by same and not cut it to less than fury X have.

its like Nv have make GP102 TITANXP with 256bit and slow 7Ghz memory.Thats same what vega is.

TITANXP 548GB/s

980TI 336GB/s

thats +63%

FURYX 512GB/s

VEGA 484GB/s

thats -6%

You folks may have the data, but you lack the objectivity. Myself, will hold until it's out; no rush.

Titan is based on the same GP102 as 1080Ti. Unless every SKU counts as a tier for you that's one tier down from the top.

And if you count every SKU and 1080 is midrange, then what's 1070? And 1060? Rinse and repeat... and 1030? :>

Vega does NOT have professional, signed drivers. Those will come with Radeon Pro WX 9100. What we see with Vega is just difference between throughput of Fiji and throughput of Vega cores.

The biggest mouths around here clearly have an agenda to make 'their' company look best

1080 is the third card down the stack anyway you look at it.

http://www.tomshardware.com/reviews/nvidia-titan-xp,5066.html

Titan Xp (2017) is a full GP102 chip, 1080 Ti is also a GP102 but has less shaders, ROPs, memory interface.

I didn't think a 1080 could ever be called "mid-range". I always thought that was the $250-400 range...but maybe nV knows better

I didn't think a 1080 could ever be called "mid-range". I always thought that was the $250-400 range...but maybe nV knows better

Probably the two 8Hi-Stacks. Doesn't matter that much for professional workloads, but for gaming the results are extremely bad.PCGH tested the card and now we at least know where the problem lies:

http://www.pcgameshardware.de/Vega-...elease-AMD-Radeon-Frontier-Edition-1232684/3/

They tested the b3d suite and you can clearly see that the effective bandwidth at the moment ist terrible. Black texture with color compression at fury level and random texture bandwidth even way lower than fury. Whatever the reason for this low bandwidth might be, it's the reason for the slow performance. It's just starving on bandwidth.