The core count thing can be simplified as "are they counting cores based on FP or Int units?". Im guessing FP units, as each core showing as 2 in task manager makes more sense that way. So 4/8 would be 4 cores, 8 threads. Its a high end chip though, so they may do 8 cores (16 threads), but I doubt it.

This is really confusing in terms of definitions, but the building blocks are "Bulldozer Module", each able to do 2 threads, thanks to their "mini cores".

You can read that in the Server Platforms PDF from their Analyst Day found in

http://phx.corporate-ir.net/phoenix.zhtml?c=74093&p=irol-analystday .

The definition we currently give to core is filled by the "Bulldozer Module".

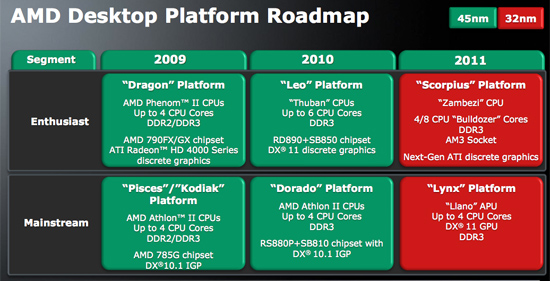

When they say 4/8 cores (and in the Client Platform PDF, the "Scorpius Platform" is described as being 4-8 (I read this as 4 to 8) 32nm Bulldozer cores.

Now, it gets confusing, because AMD describes their way of doing "hyper threading" has 1 "mini-core" (as IDC said, if anyone knows of a better word to describe it, please be my guest) per thread.

As AMD told Anand, when they say 1 Bulldozer Core, they are referring to the unit capable of doing 2 threads, not the individual "mini-core".

From all this, I infer that AMD will release a CPU with 8 of those Bulldozer cores or modules, per die, for the desktop and up to 16 of them for Servers.

What I still didn't understand is how will AMD build their dies - are these Quad-Cores and then a Octo-core is 2 Quads MCM'ed? Or can AMD just throw these things individually as in "Want 12 cores just put 12 modules, want 16 put 16 modules" instead of having to have 3 or 4 Quads MCM'ed?

"Valencia" is 6 cores and 8 cores. Does that means 2 MCM'ed quads with 2 disabled cores?