cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

Here is an example of what I hope doesn't happen with Raven Ridge.

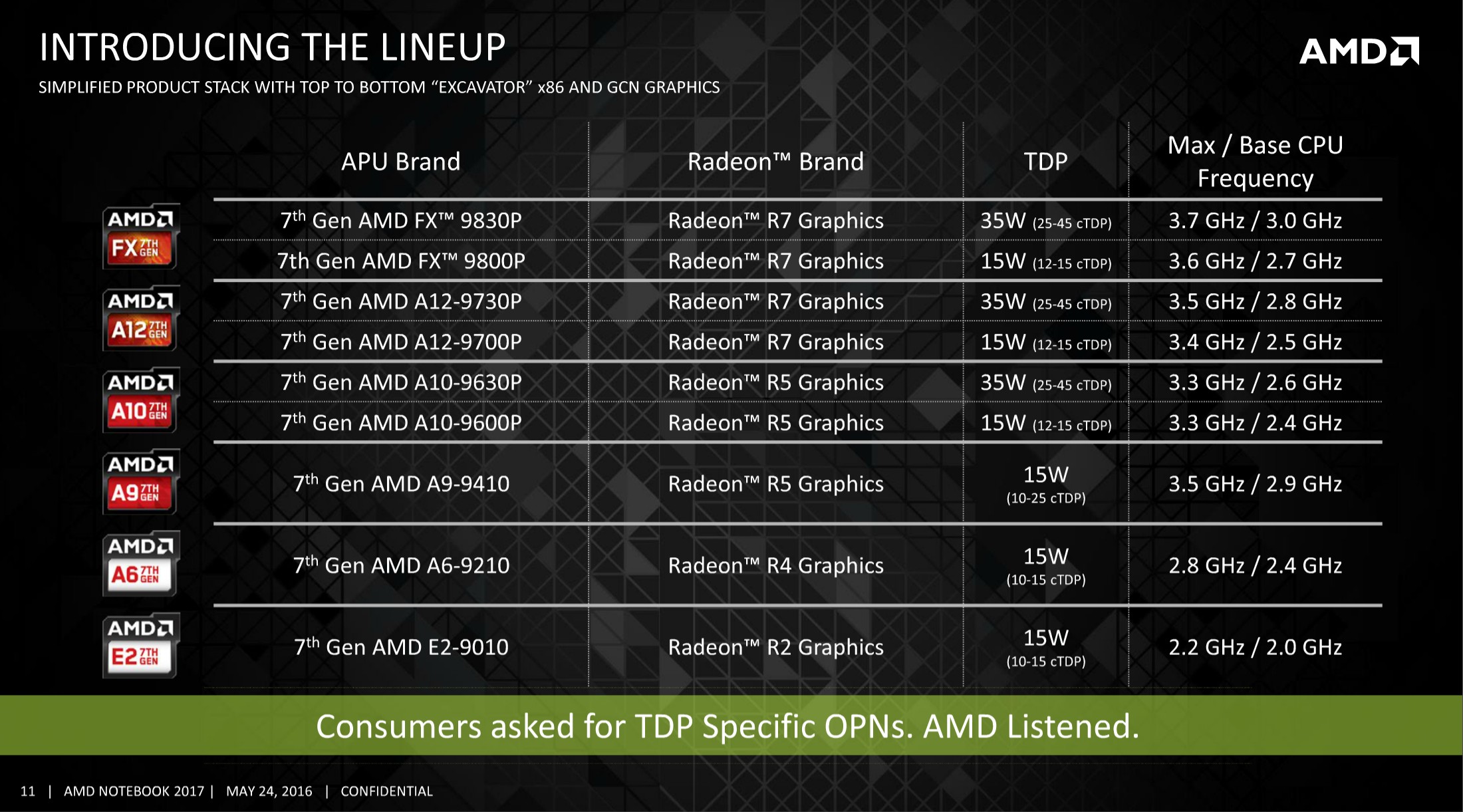

HP is using two DDR4 SO-DIMMs with the laptop in the review, but decides to use a 15W Bristol Ridge APU andOland Jet dGPU instead of a 35W Bristol Ridge APU.

Some quotes from the review:

HP is using two DDR4 SO-DIMMs with the laptop in the review, but decides to use a 15W Bristol Ridge APU and

Some quotes from the review:

We found the dedicated Radeon R7 M440 GPU of our test laptop relatively disappointing. It rarely performed faster than the integrated Radeon R5 and, when it did, the IGP was suffering performance drops due to its limiting TDP. Instead, HP should have installed the higher-performance A10-9630P (35-watt). This would have boosted CPU and GPU performance. This statement is supported by the fact that the CrossFire setup did not produce higher frame rates in none of the games we tested.

Performance Consistency - Sustaining High CPU and GPU Loads

In games, the TDP limit is temporarily exceeded. However, after a maximum of two minutes (depending on the previous load and temperatures of the laptop), the 15-watt limit strikes back, dropping clock speeds. For example, in “Diablo III”, the CPU and GPU clock speeds start at 1800 and 550 MHz respectively. As the game runs, the speeds drop to 1100 to 1200 (CPU) and 380 to 420 MHz (GPU). The frame rates drop in parallel in our static gaming scene from 72 to 49 fps.

We want to praise the AMD IGP. Once again, the manufacturer steals the performance crown in the 15-watt segment. The only Intel IGP capable of surpassing the AMD competitor is the Iris Graphics 540, which is very expensive. We hope that many manufacturers will install Bristol Ridge without a dedicated graphics card and thus make good use of this strong advantage. Dual Graphics can only create a large lead over the competition in synthetic benchmarks. When running demanding applications, such as games, the setup does not offer much better performance.

Last edited: