LOL_Wut_Axel

Diamond Member

- Mar 26, 2011

- 4,310

- 8

- 81

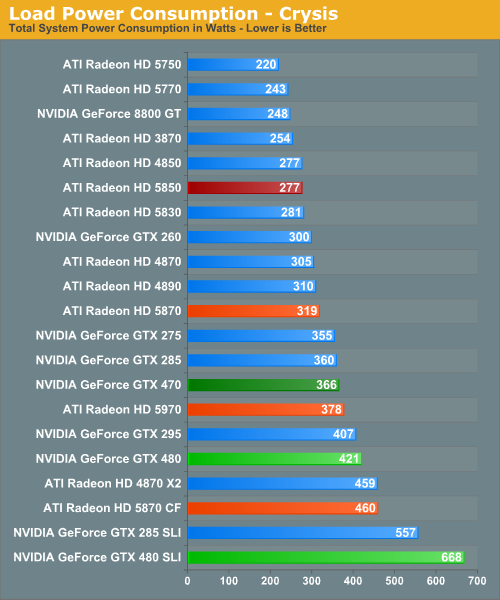

Ya, pretty much with some minor changes (HDMI 1.4, UVD3.0, some reduction in unneeded shaders / TMUs). But my main concern is that AMD is simply looking to shift VLIW-4 HD6950/6970 chips to 28nm HD7850 / 7870 chips. Sure they might get more than 50% reduction in power consumption but that means almost no performance improvement. Is that what the consumer wants for discrete desktop GPUs? I think 20-30% more performance in the mid-range at 170-180W power envelope would have been far more preferable imo. It's good to keep increasing mid-range performance since a lot of buyers want these cards.

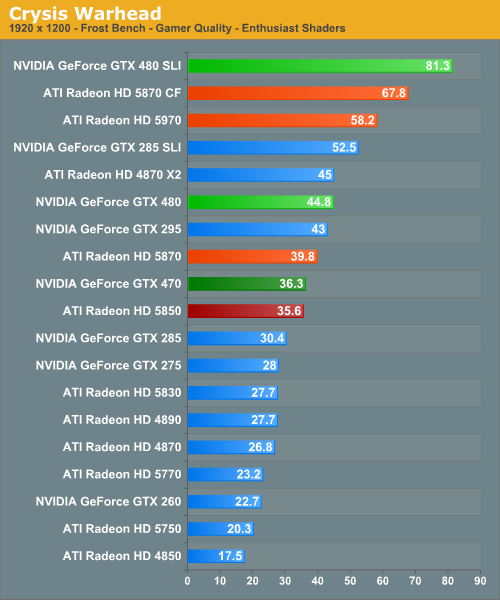

I'm sorry, but what are you ranting on about? They're giving people yesterday's Enthusiast card performance at a Performance card price with a Mainstream card power consumption.

The Radeon HD 6970 is 25% faster than the Radeon HD 6870, so it's not "almost no performance improvement". In any case, there's probably still a performance improvement in comparison to the 6970. I'd say around 35% faster than the 6870 would be right.